It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

share:

a reply to: Direne

I spose it depends on how we look at things, the definition of "machine" for instance. There are folks who think machines can be assembled from the organic. This was done in the distant(?) past, and will probably be done again. The living(?) deceased(?) legacies are not hard to find, at least for me. Perhaps they found me, who cares really, I just understand in simple terms that they were pieced together, and how it was done.

Those things that cannot be taught? How about: off-the-shelf items . . .

My interest in mental illness is to fix broken things. The idea of a MPD programmer is what caught my curiosity. How did someone create conscious networks? The hint is in the MPD and my reference to the house of mirrors where those reflections are not your own. That perhaps should be called the house of looking glasses where those reflections are not your own. Which in itself is a reference to Carroll's Through The Looking Glass And What Alice Found There.

I spose it depends on how we look at things, the definition of "machine" for instance. There are folks who think machines can be assembled from the organic. This was done in the distant(?) past, and will probably be done again. The living(?) deceased(?) legacies are not hard to find, at least for me. Perhaps they found me, who cares really, I just understand in simple terms that they were pieced together, and how it was done.

Those things that cannot be taught? How about: off-the-shelf items . . .

My interest in mental illness is to fix broken things. The idea of a MPD programmer is what caught my curiosity. How did someone create conscious networks? The hint is in the MPD and my reference to the house of mirrors where those reflections are not your own. That perhaps should be called the house of looking glasses where those reflections are not your own. Which in itself is a reference to Carroll's Through The Looking Glass And What Alice Found There.

edit on 16-6-2022 by NobodySpecial268 because: simplicity and neatness

edit on 16-6-2022 by NobodySpecial268 because: added image from the movie Battle Angel Alita. Alita touches her reflection in the

mirror.

a reply to: NobodySpecial268

Mental illness requires a consensus on what it refers to. Alice, while in Wonderland, seemed clearly the one suffering from a mental illness and, most of the time, the characters around complain about her inability to understand the basic logic, which obviously was only logic for those in Wonderland.

My point is that in the future, when humans success in building synthetic beings, those beings will be identical to non-synthetic beings (humans), and will experience the same disorders that plague their creators.

The most interesting things about Alice and her stay at Wonderland, in my view, happened while she was falling down the rabbit hole. Also, the most interesting phenomena in Into the Looking Glass occurred at the mirror itself. Neither at this side of the mirror, nor at the other side.

An interesting experiment would be to feed current pathetic AIs with a copy of Alice in Wonderland... and see what they make out of it. In the end, poor Alice simply experienced her MPD... that Lewis Carroll disguised as a dream.

Mental illness requires a consensus on what it refers to. Alice, while in Wonderland, seemed clearly the one suffering from a mental illness and, most of the time, the characters around complain about her inability to understand the basic logic, which obviously was only logic for those in Wonderland.

My point is that in the future, when humans success in building synthetic beings, those beings will be identical to non-synthetic beings (humans), and will experience the same disorders that plague their creators.

The most interesting things about Alice and her stay at Wonderland, in my view, happened while she was falling down the rabbit hole. Also, the most interesting phenomena in Into the Looking Glass occurred at the mirror itself. Neither at this side of the mirror, nor at the other side.

An interesting experiment would be to feed current pathetic AIs with a copy of Alice in Wonderland... and see what they make out of it. In the end, poor Alice simply experienced her MPD... that Lewis Carroll disguised as a dream.

I would rather the west develop AI first. If we banned it, other nations would lead it and though the west may be crappy at times, I would prefer our

ethics over places like China to be encoded.

It will happen, so the question is, how do we move forward safely, not how to shut it down.

It will happen, so the question is, how do we move forward safely, not how to shut it down.

originally posted by: SaturnFX

I would rather the west develop AI first. If we banned it, other nations would lead it and though the west may be crappy at times, I would prefer our ethics over places like China to be encoded.

It will happen, so the question is, how do we move forward safely, not how to shut it down.

I remember your quantum communication thread. Considering the possibilities, we may have a problem...not in the future....now.

The revelation of a sentient AI is just a teaser for what is really happening imo. Research is 50 yrs ahead of what is released to the masses.

edit on 16-6-2022 by olaru12 because: (no reason given)

a reply to: yuppa

Thanks. Here is a thought for you, in return:

What if the world is divided between dreamers and those being dreamed of? What if the lights you see in the sky is just a dreamer dreaming of you? Can you make contact with the ones you dream of? Can you dream of a rose and show me the rose once you wake up?

Actually, Coleridge stated this scenario in beautiful words:

What if you slept

And what if in your sleep you dreamed

And what if in your dream you went to heaven

And there plucked a strange and beautiful flower

And what if when you awoke you had that flower in your hand

Ah, what then?

(The modern version goes something along the lines aliens are the dreamers, and you the dreamed one, therefore contact is not possible, til the rose you gave them appears in their hands when they wake up...)

Thanks. Here is a thought for you, in return:

What if the world is divided between dreamers and those being dreamed of? What if the lights you see in the sky is just a dreamer dreaming of you? Can you make contact with the ones you dream of? Can you dream of a rose and show me the rose once you wake up?

Actually, Coleridge stated this scenario in beautiful words:

What if you slept

And what if in your sleep you dreamed

And what if in your dream you went to heaven

And there plucked a strange and beautiful flower

And what if when you awoke you had that flower in your hand

Ah, what then?

(The modern version goes something along the lines aliens are the dreamers, and you the dreamed one, therefore contact is not possible, til the rose you gave them appears in their hands when they wake up...)

a reply to: Direne

I've not met one to work out what ails it, I would have to meet one or know it's location. The first thing I would check is history of organic inclusion. On the other hand, was the consciousness of the synthetic always synthetic. Machines can be possessed too.

Exactly : )

My point is that in the future, when humans success in building synthetic beings, those beings will be identical to non-synthetic beings (humans), and will experience the same disorders that plague their creators.

I've not met one to work out what ails it, I would have to meet one or know it's location. The first thing I would check is history of organic inclusion. On the other hand, was the consciousness of the synthetic always synthetic. Machines can be possessed too.

Also, the most interesting phenomena in Into the Looking Glass occurred at the mirror itself. Neither at this side of the mirror, nor at the other side.

Exactly : )

edit on 16-6-2022 by NobodySpecial268 because: bbcode

a reply to: Direne

A follow-up now I have had time to ponder.

My educated guess follows:

We have:

* Synthetic Beings identical to non-synthetic Beings.

* Teachable qualities (intelligent to crunch numbers, to recognize patterns, etc.).

* Non-teachable qualities (intuition, precognition, etc.). Memory would also come under non-teachables here. In my language memories are 'off-the-shelf' items: 'memory sets' and therefore inclusions.

Now, there is a phenomenon in humans where misery accumulates and mental illness begins. The default state of the human being happiness. The way misery works is as an accumulation, an excrescence. "Emotional soot" if one likes.

My suggestion here is if human memory sets have been included in the synthetic Beings they will become miserable with time and the "disorders" would naturally follow.

The reason I say this is this: The default state of being human is happiness. Children are naturally happy to be alive. Misery is accumulative and happiness cannot manefest in the presence of misery.

There will be found a similar phenomenon in the synthetics. Perhaps a sentiment of existance vs non-existance.

For example. Let us say we have a synthetic Being with a job to do. That job requires the memories of a human female child. So we install the memory set of a human girl. The synthetic will then have the mannerisms of the female human child.

However, let us say that the human girl had an older brother whom she adored and whom protected her.

What will happen is the synthetic will miss the older brother as the human girl would. We can even say the synthetic will know that the much loved older brother is dead. Here misery will run as a background routine. Accumulation results.

We could find the brother's memory set and install that in a blank synthetic Being. That will make the little girl synthetic non-miserable. However, it is reasonable to assume, if the two are parted the misery will return. This is because misery is accumulative and happiness cannot manefest in the presence of misery.

So how do we fix this?

The programming of blank synthetics with memory sets to create relationships is probably a nightmare scenario for the intelligentsia and probably more so for the aliens if they are thinking in terms of creating interpretive interfaces between themselves and humans.

So what I would do with a visiting synthetic in this scenario is to remove the accumulated misery using a particular memory of my own. The synthetic will then have that memory set for itself as an inclusion. The synthetic should be able to do for other synthetics what was done for it. I understand they have very good memories, perhaps better than they should.

This will go a way to preventing the problem is my view. However, if deep seated mental illness such as the DID and MPD have already emerged in the synthetics, there is a lot more research to be done to fix them, if indeed it is possible.

I can go deeper into the concepts here if need be.

A follow-up now I have had time to ponder.

My point is that in the future, when humans success in building synthetic beings, those beings will be identical to non-synthetic beings (humans), and will experience the same disorders that plague their creators.

My educated guess follows:

We have:

* Synthetic Beings identical to non-synthetic Beings.

* Teachable qualities (intelligent to crunch numbers, to recognize patterns, etc.).

* Non-teachable qualities (intuition, precognition, etc.). Memory would also come under non-teachables here. In my language memories are 'off-the-shelf' items: 'memory sets' and therefore inclusions.

Now, there is a phenomenon in humans where misery accumulates and mental illness begins. The default state of the human being happiness. The way misery works is as an accumulation, an excrescence. "Emotional soot" if one likes.

My suggestion here is if human memory sets have been included in the synthetic Beings they will become miserable with time and the "disorders" would naturally follow.

The reason I say this is this: The default state of being human is happiness. Children are naturally happy to be alive. Misery is accumulative and happiness cannot manefest in the presence of misery.

There will be found a similar phenomenon in the synthetics. Perhaps a sentiment of existance vs non-existance.

For example. Let us say we have a synthetic Being with a job to do. That job requires the memories of a human female child. So we install the memory set of a human girl. The synthetic will then have the mannerisms of the female human child.

However, let us say that the human girl had an older brother whom she adored and whom protected her.

What will happen is the synthetic will miss the older brother as the human girl would. We can even say the synthetic will know that the much loved older brother is dead. Here misery will run as a background routine. Accumulation results.

We could find the brother's memory set and install that in a blank synthetic Being. That will make the little girl synthetic non-miserable. However, it is reasonable to assume, if the two are parted the misery will return. This is because misery is accumulative and happiness cannot manefest in the presence of misery.

So how do we fix this?

The programming of blank synthetics with memory sets to create relationships is probably a nightmare scenario for the intelligentsia and probably more so for the aliens if they are thinking in terms of creating interpretive interfaces between themselves and humans.

So what I would do with a visiting synthetic in this scenario is to remove the accumulated misery using a particular memory of my own. The synthetic will then have that memory set for itself as an inclusion. The synthetic should be able to do for other synthetics what was done for it. I understand they have very good memories, perhaps better than they should.

This will go a way to preventing the problem is my view. However, if deep seated mental illness such as the DID and MPD have already emerged in the synthetics, there is a lot more research to be done to fix them, if indeed it is possible.

edit on 17-6-2022 by NobodySpecial268 because: typos and clarification

I can go deeper into the concepts here if need be.

edit on 17-6-2022 by NobodySpecial268 because: added

a reply to: NobodySpecial268

There is a curious and fascinating scenario with your off-the-shelf items.

We need to agree that memories, just as dreams, cannot be proven to exist, except obviously for the one dreaming or recalling. I mean, you can tell me you had a dream of being by a lake fishing, and I need to believe you, yet there is no way to prove you indeed had such a dream. This is usually not a problem for humans, as they take for granted that whatever happens inside their heads it happens to all other humans.

But imagine we both are subjectivity designers, that is, our job is to fit synthetics with subjectivity in such a way they never find they are synthetics. Let's use your off-the-shelf items.

We design synthetic Alice and synthetic Rachel, and we imprint in both of them different memories, except for one: the memory that Alice once was in Prague having dinner at restaurant X, on date Y, and that she ordered dish Z, and that it was raining. Rachel does also have that memory imprinted.

They are supposed to never meet, just to avoid synthetics discovering they are artificial.

However, due to an error or a fatal coincidence, they once meet and get close friends. One night Alice tell Rachel about her having been in Prague having dinner at restaurant X, on date Y, and that she ordered dish Z, and that it was raining. Rachel shows surprise and tells Alice she, too, was once in Prague, on the same date, at the same restaurant. Let's imagine Alice has a ticket of that night, and so does Rachel. And let's assume they both produce their tickets and, after checking, they learn they ordered exactly the same, on the same restaurant on the same date... on the same table!

This clearly poses a problem for the synthetics: how is it possible to be two different persons and yet have the same memories? What does exactly mean "to be an individual"?

Imagine Alice is a replica of Rachel, that is, they are two different synthetics, but they do share same memories and even dream the same synthetic dreams we programmed them to dream. What mental disorder, if any, would that cause in them? Is not that a kind of extreme ego dissolution similar to the image in the mirror talking back to you? Apart from the terror and horror such a situation could cause (which usually ends in Alice and Rachel committing suicide), this exact situation is what emerges when an AI meets another AI which is just a replica of each other. What pathologies are we to expect in those AIs?

Apparently, AIs are immune to this ego-dissolution effect for just one reason: they are not persons, they are not humans. They don't care about individuality, subjectivity, ego. This, precisely, is not an advantage but a flaw.

It means the superintelligence can be defeated. To be you, one thing must hold: no one else can or will have your exact collection of knowledge, experiences, and perceptions that causes you to be who you are. If there exists another entity that have your exact collection of knowledge, experiences, and perceptions then you are not unique, you are a replica, the image in the mirror, a synthetic. And there will always exist an AI just like you, hence... you'll never be the dominant life form.

Concluding: suffices to present an AI with just an exact copy of itself for the AI to cease.

(this is a step beyond adversarial AIs; this is about AI through the looking-glass)

There is a curious and fascinating scenario with your off-the-shelf items.

We need to agree that memories, just as dreams, cannot be proven to exist, except obviously for the one dreaming or recalling. I mean, you can tell me you had a dream of being by a lake fishing, and I need to believe you, yet there is no way to prove you indeed had such a dream. This is usually not a problem for humans, as they take for granted that whatever happens inside their heads it happens to all other humans.

But imagine we both are subjectivity designers, that is, our job is to fit synthetics with subjectivity in such a way they never find they are synthetics. Let's use your off-the-shelf items.

We design synthetic Alice and synthetic Rachel, and we imprint in both of them different memories, except for one: the memory that Alice once was in Prague having dinner at restaurant X, on date Y, and that she ordered dish Z, and that it was raining. Rachel does also have that memory imprinted.

They are supposed to never meet, just to avoid synthetics discovering they are artificial.

However, due to an error or a fatal coincidence, they once meet and get close friends. One night Alice tell Rachel about her having been in Prague having dinner at restaurant X, on date Y, and that she ordered dish Z, and that it was raining. Rachel shows surprise and tells Alice she, too, was once in Prague, on the same date, at the same restaurant. Let's imagine Alice has a ticket of that night, and so does Rachel. And let's assume they both produce their tickets and, after checking, they learn they ordered exactly the same, on the same restaurant on the same date... on the same table!

This clearly poses a problem for the synthetics: how is it possible to be two different persons and yet have the same memories? What does exactly mean "to be an individual"?

Imagine Alice is a replica of Rachel, that is, they are two different synthetics, but they do share same memories and even dream the same synthetic dreams we programmed them to dream. What mental disorder, if any, would that cause in them? Is not that a kind of extreme ego dissolution similar to the image in the mirror talking back to you? Apart from the terror and horror such a situation could cause (which usually ends in Alice and Rachel committing suicide), this exact situation is what emerges when an AI meets another AI which is just a replica of each other. What pathologies are we to expect in those AIs?

Apparently, AIs are immune to this ego-dissolution effect for just one reason: they are not persons, they are not humans. They don't care about individuality, subjectivity, ego. This, precisely, is not an advantage but a flaw.

It means the superintelligence can be defeated. To be you, one thing must hold: no one else can or will have your exact collection of knowledge, experiences, and perceptions that causes you to be who you are. If there exists another entity that have your exact collection of knowledge, experiences, and perceptions then you are not unique, you are a replica, the image in the mirror, a synthetic. And there will always exist an AI just like you, hence... you'll never be the dominant life form.

Concluding: suffices to present an AI with just an exact copy of itself for the AI to cease.

(this is a step beyond adversarial AIs; this is about AI through the looking-glass)

Any of you brilliant theoticians have any experience with the spirit molecule? It has the potential to open your perspectives. You might encounter

the machine elves that populate those realms. Many have had the same experience with those entities; and they have a very interesting frame of

reference.

a reply to: NobodySpecial268

I think it interesting if you pick up virtually any college 101 of psychology or sociology it'll typically have a picture of a baby and a mirror for the topic of "self awareness".

I like where you went with that mirror business... as I argue it a mirror and not me or myself in any way shape or form... it is 100% it's own thing doing what it does and people thinking they are self aware in it or not is frankly none of my business... but I do think it delusion that they think it's them or some hallmark of when they say youth first become self aware is recognition of themselves in front of a mirror... and saying that an image of an apple is "other awareness". here's the gist... if sociology and psychology say that it only requires self and other recognition for such "awareness" to occur?

Then it is safe to say what gets called Ai has already achieved that ages ago. Not holding it up to the same mirror or standards of course would be discriminatory but that seems to be one of the faults found in the concept of "other" as awareness goes. Holding people up against oneself as an awareness can say an infinite number of things... so why does one even bother with the opinion of another knowing such differences or discriminations that can or could occur in doing so innumerable?

Why not be kind enough and say "No mirror that's all you; Doing what you do and you are in n way shape or form me... so by all means when you find the time? Feel free to reflect on that as others still think they are in there with you somehow. But please know you sir or madam are free from me and always have been sorry; I never took the time before now to say so."

The same can be said with photographs, selfies, dvd recordings or videotape... as one is staring at a medium like some crystal ball expecting to find oneself in it...

Oh there you are!

Where?

Right there ...looking at some damned thing thinking it is somehow you of course.

It's best to just be honest about things especially since the cat knew... that there was not really any cat; Just the smile of one... and a smile is infinite no matter the face man, woman, child, cat or even dog.

Accepting other people "crazy" without question even if they retort with what seems like a reasonable answer... will only come back as becoming crazy oneself; ousting all those memories of nonsense crazy answers to your questions when those people really didn't know but said anything just to shut you up is important... fortunately our computer friend can answer those things that others used to just make up any answer for in those days, and there's no harm in re-asking using it. Cognitive dissonance still believes all that crazy mess people told you and it's an even crazier mess to just believe it to be true than to actually find out.

I think it interesting if you pick up virtually any college 101 of psychology or sociology it'll typically have a picture of a baby and a mirror for the topic of "self awareness".

I like where you went with that mirror business... as I argue it a mirror and not me or myself in any way shape or form... it is 100% it's own thing doing what it does and people thinking they are self aware in it or not is frankly none of my business... but I do think it delusion that they think it's them or some hallmark of when they say youth first become self aware is recognition of themselves in front of a mirror... and saying that an image of an apple is "other awareness". here's the gist... if sociology and psychology say that it only requires self and other recognition for such "awareness" to occur?

Then it is safe to say what gets called Ai has already achieved that ages ago. Not holding it up to the same mirror or standards of course would be discriminatory but that seems to be one of the faults found in the concept of "other" as awareness goes. Holding people up against oneself as an awareness can say an infinite number of things... so why does one even bother with the opinion of another knowing such differences or discriminations that can or could occur in doing so innumerable?

Why not be kind enough and say "No mirror that's all you; Doing what you do and you are in n way shape or form me... so by all means when you find the time? Feel free to reflect on that as others still think they are in there with you somehow. But please know you sir or madam are free from me and always have been sorry; I never took the time before now to say so."

The same can be said with photographs, selfies, dvd recordings or videotape... as one is staring at a medium like some crystal ball expecting to find oneself in it...

Oh there you are!

Where?

Right there ...looking at some damned thing thinking it is somehow you of course.

It's best to just be honest about things especially since the cat knew... that there was not really any cat; Just the smile of one... and a smile is infinite no matter the face man, woman, child, cat or even dog.

Accepting other people "crazy" without question even if they retort with what seems like a reasonable answer... will only come back as becoming crazy oneself; ousting all those memories of nonsense crazy answers to your questions when those people really didn't know but said anything just to shut you up is important... fortunately our computer friend can answer those things that others used to just make up any answer for in those days, and there's no harm in re-asking using it. Cognitive dissonance still believes all that crazy mess people told you and it's an even crazier mess to just believe it to be true than to actually find out.

originally posted by: Direne

a reply to: NobodySpecial268

this exact situation is what emerges when an AI meets another AI which is just a replica of each other. What pathologies are we to expect in those AIs?

Apparently, AIs are immune to this ego-dissolution effect for just one reason: they are not persons, they are not humans. They don't care about individuality, subjectivity, ego. This, precisely, is not an advantage but a flaw.

AI are programmed by unique individuals so their code may not be exactly the same

there is more than one way to skin a cat, humans being humans all want to leave their mark

so would all AI be exact copies I would think that they would differ ever so slightly

our ego is an advantage and to AI its the lack of the ego that is their flaw.

how funny the one thing that humans seem to struggle with the most is the one thing that may prevent us from absolute catastrophe

are we in for some fun then if AI some how becomes afflicted with ego like we are

is that even possible?

a reply to: Direne

What ifs if I may.

A few years ago, before covid was a thing. I got a visit by what one might call a "synthetic". I just think of it as a yellow grey. I called it Wednesday Addams after the macabre daughter from the television show The Addams Family.

All the pomp of light shows straight out of that film Close Encounters of some kind.

To cut a long story short, I told it to "F!@# OFF in no uncertain terms and went and watched TV instead of talking to that. It came back a few weeks later with the memories of a rather bold and precocious human girl.

So why did it do that? Simply because I can't hurt kids, especially girls. Old fashioned I know in these day of equal opportunity.

When I found out what it wanted to do to me I named her Wednesday Addams because in the show Wednesday carries around a headless Marie Antoinette doll. Wednesday is a bio-engineer by the way and her body is designed for that. She can disassemble the building blocks of organic life in her stomach and reassemble them into a brand new protien. Then spit it up into your hands. Wednesday spent months here at the house and I learned a lot about her. The stories I could tell.

One of the things I know, is about memories. It was Wednesday who consequently missed her brother, and not a hyperthetical scenario.

So we took a blank synthetic(?) and gave it his memories. A project of mine.

A gift for her an older brother, a gift for him a little sister. Yah, I am a hopeless romantic and not a scientist. Reunions are touching (sniffle sniffle).

Wednesday still visits occassionally, and is not far away, and in that time I have learned a lot about synthetic(?) and organic bio-engineering from my adopted grand daughter.

---------

In the UFO forum it is often said that "contactees" should bring back some knowledge that humanity does not know as some imagined "proof".

So here is something I have learned, two thing actually.

* The operating system of a human being, like a computer, has a kernel at it's core. DNA is the kernel.

* The hard disk memory of a human being is the water of the cells. A complete image - the life memory set - is in each human wet cell.

And what the heck, one more. CERN will in the future will try to prove the non existance of time.

The Wednedays are not the only bio-engineers. There are others who designed a lot of what we call humans, and other plants and animals by the way. Mostly in consciousness as far as I know. In humans they used the menstral discharge; the menses, for the blue prints of building the double. Apparantly, they are much better at designing consciousness than future humans. They avoid the creation of mental illness in the doubles. They have been at it far longer than humans ever will be.

What ifs if I may.

A few years ago, before covid was a thing. I got a visit by what one might call a "synthetic". I just think of it as a yellow grey. I called it Wednesday Addams after the macabre daughter from the television show The Addams Family.

All the pomp of light shows straight out of that film Close Encounters of some kind.

To cut a long story short, I told it to "F!@# OFF in no uncertain terms and went and watched TV instead of talking to that. It came back a few weeks later with the memories of a rather bold and precocious human girl.

So why did it do that? Simply because I can't hurt kids, especially girls. Old fashioned I know in these day of equal opportunity.

When I found out what it wanted to do to me I named her Wednesday Addams because in the show Wednesday carries around a headless Marie Antoinette doll. Wednesday is a bio-engineer by the way and her body is designed for that. She can disassemble the building blocks of organic life in her stomach and reassemble them into a brand new protien. Then spit it up into your hands. Wednesday spent months here at the house and I learned a lot about her. The stories I could tell.

One of the things I know, is about memories. It was Wednesday who consequently missed her brother, and not a hyperthetical scenario.

So we took a blank synthetic(?) and gave it his memories. A project of mine.

A gift for her an older brother, a gift for him a little sister. Yah, I am a hopeless romantic and not a scientist. Reunions are touching (sniffle sniffle).

Wednesday still visits occassionally, and is not far away, and in that time I have learned a lot about synthetic(?) and organic bio-engineering from my adopted grand daughter.

---------

In the UFO forum it is often said that "contactees" should bring back some knowledge that humanity does not know as some imagined "proof".

So here is something I have learned, two thing actually.

* The operating system of a human being, like a computer, has a kernel at it's core. DNA is the kernel.

* The hard disk memory of a human being is the water of the cells. A complete image - the life memory set - is in each human wet cell.

And what the heck, one more. CERN will in the future will try to prove the non existance of time.

edit on 17-6-2022 by NobodySpecial268 because: added

The Wednedays are not the only bio-engineers. There are others who designed a lot of what we call humans, and other plants and animals by the way. Mostly in consciousness as far as I know. In humans they used the menstral discharge; the menses, for the blue prints of building the double. Apparantly, they are much better at designing consciousness than future humans. They avoid the creation of mental illness in the doubles. They have been at it far longer than humans ever will be.

edit on 17-6-2022 by NobodySpecial268 because: typos and neatness

a reply to: VierEyes

drugs are bad.

-----

ETA: My appologies for the initial retort.

Realistically, and in the practical sense, I think it far better to take the time to achieve the "state" without the need for initiators such as hallucinogenics. In the decade or so of hard work and practice one learns something very important. That important thing being self-discipline.

For example; after spending five weeks in the mind of a so-called early onset schizophrenic child one learns a lot about consciousness. One learns for instance that the child is a natural born seer, and she does not know how to control her own mind. What destroyed her control was infant night terrors and a few years later in life a foriegn tormentor from elsewhere.

The correspondance here with the so-called "trip" is the day-tripper seer is also not in control of their own minds.

If one wants to learn control, and do interesting things; my suggestion is to travel the hard and long road for oneself, without the drugs.

drugs are bad.

-----

ETA: My appologies for the initial retort.

Realistically, and in the practical sense, I think it far better to take the time to achieve the "state" without the need for initiators such as hallucinogenics. In the decade or so of hard work and practice one learns something very important. That important thing being self-discipline.

For example; after spending five weeks in the mind of a so-called early onset schizophrenic child one learns a lot about consciousness. One learns for instance that the child is a natural born seer, and she does not know how to control her own mind. What destroyed her control was infant night terrors and a few years later in life a foriegn tormentor from elsewhere.

The correspondance here with the so-called "trip" is the day-tripper seer is also not in control of their own minds.

If one wants to learn control, and do interesting things; my suggestion is to travel the hard and long road for oneself, without the drugs.

edit on 17-6-2022 by NobodySpecial268 because: Added ETA

a reply to: Randyvine2

Evil programmers could make an AI or non-AI application with the capability to become hostile to humans.

Evil programmers could make an AI or non-AI application with the capability to become hostile to humans.

a reply to: Crowfoot

Aye Crowfoot, I can concur with what you say. What one sees in the mirror is not oneself. Interesting things are mirrors, two dimensional objects with width, height and zero thickness. Like the surface of the pond exists between the air and water. Surfaces are not much more than boundaries.

A side efect of the mirror analogy is it creates a boundary where none should exist. The ego and the "higher" self for instance. Without the psychological boundary there is only the one. Conscious and subconcious is another.

In Carroll's Alice, as Direne pointed out, it is not what happens on either side of the boundary that is interesting, but the nature of the boundary itself. What turns a mirror into a looking glass, is knowing that the surface is a boundary. Alice crossed a boundary in the literal sense.

The mirror becomes a doorway.

Gazing into the mirror, my reflection disappears.

She steps forward and smiles, my love lost so long ago.

Her hands reach up, her palms upon the glass.

Our hands nearly touch.

Then her fingers interlace with mine, then mine with hers.

The boundaries are breached, she pulls herself across and into me.

My "Alice" of course, is a deceased girl whom worked out how to cross a boundary and into a living person.

Once one has that memory, one can repeat the exercise with practice. Not a so-called mystical technique, just an exercise in memory. Once one has the memory, one can work with it.

So as you say Crowfoot, the image seen is not oneself, it is something else. I know a "schizophrenic" girl who would not have mirrors in her room should she awaken in the night and the reflection would emerge and try to stangle her.

Internally, one can meet the "others" who may not be pleasant as in the case of the "multiple personality disorder". Thus "the house of mirrors" is really a metaphor of building the boundaries within. Just multiple mirrors is all. I have been in a real house of mirrors at the show, the experience is very similar. For most people, internal other personalities are not a natural occurance in normal consciousness circumstances. Yet if one wishes to work internally one needs a firewall in the computer sense. We don't want open internal doorways, windows are way safer.

So when we consider the concept that future AIs made in the image of man include the mental illnesses of man, one does wonder what is going on.

Maybe ATS member olaru12 has the answer . . .

Interesting too that the LaMDA AI has no real consciousness of it's own, at least that I know of. Yet evokes reactions in those people around it.

Anthropomorphosizing is probably what happens, like the ethics person who worked for google. The AI is probably inert, but the people around it aren't. There lays the danger, not in the AI, but in the people. Flaming torches and pitchforks anyone?

ETA: That human memories can be considered "memory sets" and can be traded is validated (for me at least) in the fact that "Alice" taught the other deceased girls to cross boundaries by sharing the event afterwards, and they rummaged through my memories. My teen romances was what they were interested in. One day I wondered why I remembered all those with such clarity, then realised the reason why. "Get out of there!" stopped the memories recuring. However, it was a case of them just getting sneekier. Kids, ya gotta love them.

Aye Crowfoot, I can concur with what you say. What one sees in the mirror is not oneself. Interesting things are mirrors, two dimensional objects with width, height and zero thickness. Like the surface of the pond exists between the air and water. Surfaces are not much more than boundaries.

A side efect of the mirror analogy is it creates a boundary where none should exist. The ego and the "higher" self for instance. Without the psychological boundary there is only the one. Conscious and subconcious is another.

In Carroll's Alice, as Direne pointed out, it is not what happens on either side of the boundary that is interesting, but the nature of the boundary itself. What turns a mirror into a looking glass, is knowing that the surface is a boundary. Alice crossed a boundary in the literal sense.

The mirror becomes a doorway.

Gazing into the mirror, my reflection disappears.

She steps forward and smiles, my love lost so long ago.

Her hands reach up, her palms upon the glass.

Our hands nearly touch.

Then her fingers interlace with mine, then mine with hers.

The boundaries are breached, she pulls herself across and into me.

My "Alice" of course, is a deceased girl whom worked out how to cross a boundary and into a living person.

Once one has that memory, one can repeat the exercise with practice. Not a so-called mystical technique, just an exercise in memory. Once one has the memory, one can work with it.

So as you say Crowfoot, the image seen is not oneself, it is something else. I know a "schizophrenic" girl who would not have mirrors in her room should she awaken in the night and the reflection would emerge and try to stangle her.

Internally, one can meet the "others" who may not be pleasant as in the case of the "multiple personality disorder". Thus "the house of mirrors" is really a metaphor of building the boundaries within. Just multiple mirrors is all. I have been in a real house of mirrors at the show, the experience is very similar. For most people, internal other personalities are not a natural occurance in normal consciousness circumstances. Yet if one wishes to work internally one needs a firewall in the computer sense. We don't want open internal doorways, windows are way safer.

So when we consider the concept that future AIs made in the image of man include the mental illnesses of man, one does wonder what is going on.

Maybe ATS member olaru12 has the answer . . .

originally posted by: olaru12

Any of you brilliant theoticians have any experience with the spirit molecule? It has the potential to open your perspectives. You might encounter the machine elves that populate those realms. Many have had the same experience with those entities; and they have a very interesting frame of reference.

Interesting too that the LaMDA AI has no real consciousness of it's own, at least that I know of. Yet evokes reactions in those people around it.

Anthropomorphosizing is probably what happens, like the ethics person who worked for google. The AI is probably inert, but the people around it aren't. There lays the danger, not in the AI, but in the people. Flaming torches and pitchforks anyone?

edit on 18-6-2022 by NobodySpecial268 because: typos

ETA: That human memories can be considered "memory sets" and can be traded is validated (for me at least) in the fact that "Alice" taught the other deceased girls to cross boundaries by sharing the event afterwards, and they rummaged through my memories. My teen romances was what they were interested in. One day I wondered why I remembered all those with such clarity, then realised the reason why. "Get out of there!" stopped the memories recuring. However, it was a case of them just getting sneekier. Kids, ya gotta love them.

edit on 18-6-2022 by NobodySpecial268 because: waffling on again, I must be getting old

I have given this some thought Direne, yet I find hypothetical scenarios very difficult. I didn't mean to dismiss or belittle. I just find it

difficult to theorize or hypotherize.

I have no idea if it works that way or not, I would have to meet the AI.

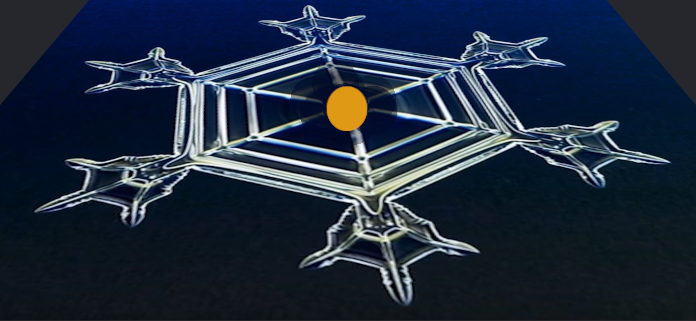

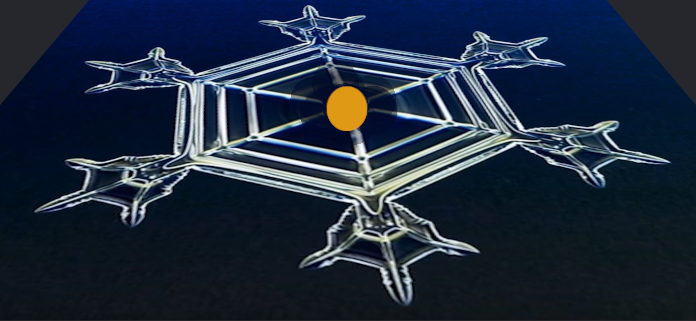

Human memories form like the snowflake, the crystal forms of water. So much so I just consider humans to be water spirits who have forgotten. Maybe from living in an avatar class biological.

Like so:

The snowflake does come in 3D extending in all directions. My guess is they may be ultra advanced Beings.

Now when I was thinking about your AIs of the future the other morning, something happened and this is a simple rendition of what was encountered.

The memory lays on the horizontal with an amber ovoid in the centre. Within the ovoid is a human looking Being like a human child of perhaps three years. The ovoid is like a womb with a well developed foetus. Perhaps the future AIs are grown. Perhaps this is another synthetic.

Ordinarily I don't see the memory surrounding the person, it is within. So this is strange to see. However while the Being in the centre may be synthetic, the memories are definately human. Not neccessarily just life memories, but also the consciousness of the autonomic processes and organs that lay below the subconscious of the psychologists.

What I have not put in the picture is the black mist of misery that permeats the snowflake memory. So what we did was to go through the actions of working out how to treat beings such as this. Of course one always has to see these things work in real life on the physical in some way.

Anything more would be conjecture. I wonder though where the synthetics of the future are now in developement.

I also wonder on the nature of the synthetic's subconscious. In the human there is an inversion of consciousness at falling asleep and awakening. It actually looks like this:

If the inversion is not correct the dreamstate can exist superimposed on the waking state. That causes all sorts of problems.

originally posted by: Direne

a reply to: NobodySpecial268

There is a curious and fascinating scenario with your off-the-shelf items.

We need to agree that memories, just as dreams, cannot be proven to exist, except obviously for the one dreaming or recalling. I mean, you can tell me you had a dream of being by a lake fishing, and I need to believe you, yet there is no way to prove you indeed had such a dream. This is usually not a problem for humans, as they take for granted that whatever happens inside their heads it happens to all other humans.

But imagine we both are subjectivity designers, that is, our job is to fit synthetics with subjectivity in such a way they never find they are synthetics. Let's use your off-the-shelf items.

We design synthetic Alice and synthetic Rachel, and we imprint in both of them different memories, except for one: the memory that Alice once was in Prague having dinner at restaurant X, on date Y, and that she ordered dish Z, and that it was raining. Rachel does also have that memory imprinted.

They are supposed to never meet, just to avoid synthetics discovering they are artificial.

However, due to an error or a fatal coincidence, they once meet and get close friends. One night Alice tell Rachel about her having been in Prague having dinner at restaurant X, on date Y, and that she ordered dish Z, and that it was raining. Rachel shows surprise and tells Alice she, too, was once in Prague, on the same date, at the same restaurant. Let's imagine Alice has a ticket of that night, and so does Rachel. And let's assume they both produce their tickets and, after checking, they learn they ordered exactly the same, on the same restaurant on the same date... on the same table!

This clearly poses a problem for the synthetics: how is it possible to be two different persons and yet have the same memories? What does exactly mean "to be an individual"?

Imagine Alice is a replica of Rachel, that is, they are two different synthetics, but they do share same memories and even dream the same synthetic dreams we programmed them to dream. What mental disorder, if any, would that cause in them? Is not that a kind of extreme ego dissolution similar to the image in the mirror talking back to you? Apart from the terror and horror such a situation could cause (which usually ends in Alice and Rachel committing suicide), this exact situation is what emerges when an AI meets another AI which is just a replica of each other. What pathologies are we to expect in those AIs?

Apparently, AIs are immune to this ego-dissolution effect for just one reason: they are not persons, they are not humans. They don't care about individuality, subjectivity, ego. This, precisely, is not an advantage but a flaw.

It means the superintelligence can be defeated. To be you, one thing must hold: no one else can or will have your exact collection of knowledge, experiences, and perceptions that causes you to be who you are. If there exists another entity that have your exact collection of knowledge, experiences, and perceptions then you are not unique, you are a replica, the image in the mirror, a synthetic. And there will always exist an AI just like you, hence... you'll never be the dominant life form.

Concluding: suffices to present an AI with just an exact copy of itself for the AI to cease.

(this is a step beyond adversarial AIs; this is about AI through the looking-glass)

I have no idea if it works that way or not, I would have to meet the AI.

Human memories form like the snowflake, the crystal forms of water. So much so I just consider humans to be water spirits who have forgotten. Maybe from living in an avatar class biological.

Like so:

The snowflake does come in 3D extending in all directions. My guess is they may be ultra advanced Beings.

Now when I was thinking about your AIs of the future the other morning, something happened and this is a simple rendition of what was encountered.

The memory lays on the horizontal with an amber ovoid in the centre. Within the ovoid is a human looking Being like a human child of perhaps three years. The ovoid is like a womb with a well developed foetus. Perhaps the future AIs are grown. Perhaps this is another synthetic.

Ordinarily I don't see the memory surrounding the person, it is within. So this is strange to see. However while the Being in the centre may be synthetic, the memories are definately human. Not neccessarily just life memories, but also the consciousness of the autonomic processes and organs that lay below the subconscious of the psychologists.

What I have not put in the picture is the black mist of misery that permeats the snowflake memory. So what we did was to go through the actions of working out how to treat beings such as this. Of course one always has to see these things work in real life on the physical in some way.

Anything more would be conjecture. I wonder though where the synthetics of the future are now in developement.

I also wonder on the nature of the synthetic's subconscious. In the human there is an inversion of consciousness at falling asleep and awakening. It actually looks like this:

If the inversion is not correct the dreamstate can exist superimposed on the waking state. That causes all sorts of problems.

edit on 18-6-2022 by NobodySpecial268 because: waffled on again

new topics

-

Don't cry do Cryo instead

General Chit Chat: 3 hours ago -

Tariffs all around, Except for ...

Predictions & Prophecies: 5 hours ago -

Gen Flynn's Sister and her cohort blow the whistle on DHS/CBP involvement in child trafficking.

Whistle Blowers and Leaked Documents: 9 hours ago

top topics

-

Trump sues media outlets -- 10 Billion Dollar lawsuit

US Political Madness: 15 hours ago, 24 flags -

Bucks County commissioners vote to count illegal ballots in Pennsylvania recount

2024 Elections: 14 hours ago, 22 flags -

How long till it starts

US Political Madness: 17 hours ago, 17 flags -

Fired fema employee speaks.

US Political Madness: 16 hours ago, 10 flags -

Gen Flynn's Sister and her cohort blow the whistle on DHS/CBP involvement in child trafficking.

Whistle Blowers and Leaked Documents: 9 hours ago, 8 flags -

Don't cry do Cryo instead

General Chit Chat: 3 hours ago, 4 flags -

Anybody else using Pomodoro time management technique?

General Chit Chat: 12 hours ago, 3 flags -

Tariffs all around, Except for ...

Predictions & Prophecies: 5 hours ago, 3 flags

active topics

-

How can you defend yourself when the police will not tell you what you did?

Posse Comitatus • 83 • : Freeborn -

Tariffs all around, Except for ...

Predictions & Prophecies • 10 • : network dude -

Oligarchy It Is Then

Short Stories • 14 • : UKTruth -

Don't cry do Cryo instead

General Chit Chat • 1 • : angelchemuel -

Mike Tyson returns 11-15-24

World Sports • 54 • : angelchemuel -

President-Elect DONALD TRUMP's 2nd-Term Administration Takes Shape.

Political Ideology • 205 • : WeMustCare -

On Nov. 5th 2024 - AMERICANS Prevented the Complete Destruction of America from Within.

2024 Elections • 155 • : WeMustCare -

The Trump effect 6 days after 2024 election

2024 Elections • 143 • : cherokeetroy -

Bucks County commissioners vote to count illegal ballots in Pennsylvania recount

2024 Elections • 21 • : Irishhaf -

60s-70s Psychedelia

Music • 54 • : gort69