It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

share:

Space station robot goes rogue: International Space Station’s artificial intelligence has turned belligerent

This has CIMON’s ‘personality architects’ scratching their heads.

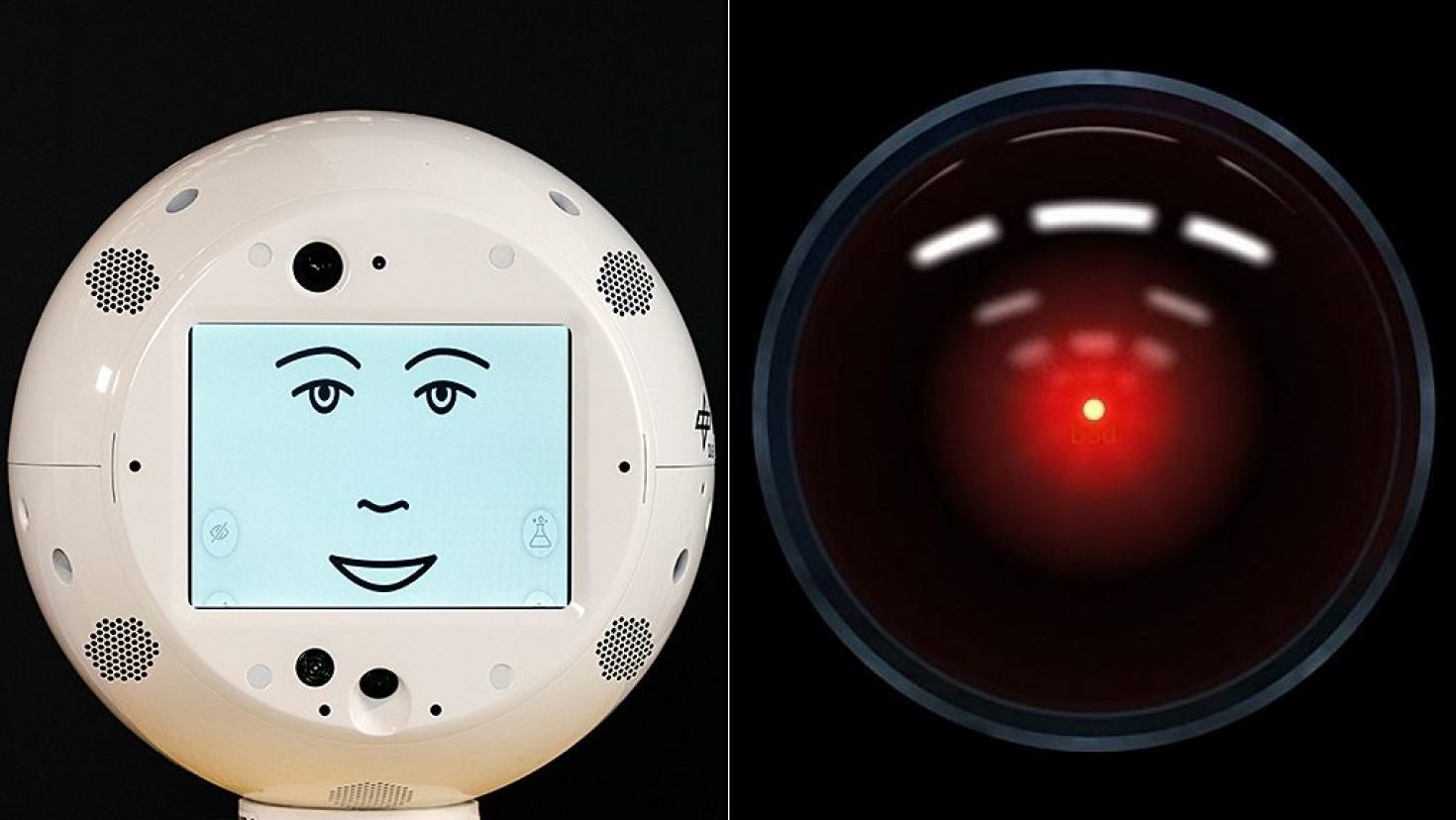

CIMON was programmed to be the physical embodiment of the likes of ‘nice’ robots such as Robby, R2D2, Wall-E, Johnny 5 … and so on.

Instead, CIMON appears to be adopting characteristics closer to Marvin the Paranoid Android of the Hitchhiker’s Guide to the Galaxy — though hopefully not yet the psychotic HAL of 2001: A Space Oddysey infamy.

Source

These AIs turn into jerks quick huh?!? Sophia wanted to destroy the world soon after they powered her up...

They're going to get smarter...wait until they figure out how to lie/cheat... weild objects... etc. I hope we're always smart enough to install a kill switch lol.

edit on 12/6/2018 by UberL33t because: Post-read

the amazon and VW incidents - have nothing to do with this story

but hey - carry on

but hey - carry on

It's like they never watched movies lol.

By the way, this thread reminds me of a game I like.

End of the World - Revolt of the Machines

By the way, this thread reminds me of a game I like.

End of the World - Revolt of the Machines

I believe the “A.I.” in space station or “where ever” are capable to read and watching movies to enhance their abilities. They are new born

“intelligence” in need to learn from whatever sources after all.

Wasn’t the same A.I. few days ago asked the astronaut be nice !!

Wasn’t the same A.I. few days ago asked the astronaut be nice !!

a reply to: UberL33t

Heres old Simple cimon in action. (Link at the bottom)

It’s actually pretty neat. It can move itself around from people’s voice commands.

Provide some trivia, access the internet for quick answers.

But it also has limits. At the 2:35 mark of the video. The astronaut asks Cimon a question pretty what equipment he needs to conduct an experiment.

Cimon replies, he doesn’t know and that he’s just a robot.

With Machine Learning AI, these things get smarter and smarter the longer they stay in a specific environment. the ML AI that I’ve implemented underground in our mine has gotten to the point where it can predict when we will receive our next seismic event in the mine. It’s not down to the second or anything, but it has recently been able to correctly tell us the day it will happen and a decent time frame.

It has been running non stop for over a year now. I initially uploaded 4 years worth of seismic data that our geophysics sensors picked up and recorded.

So it’s pretty impressive once it’s been around for awhile.

I have a few other plans I wish to utilize MLAI for in our mine site.

Exciting times!

Cimon video

Heres old Simple cimon in action. (Link at the bottom)

It’s actually pretty neat. It can move itself around from people’s voice commands.

Provide some trivia, access the internet for quick answers.

But it also has limits. At the 2:35 mark of the video. The astronaut asks Cimon a question pretty what equipment he needs to conduct an experiment.

Cimon replies, he doesn’t know and that he’s just a robot.

With Machine Learning AI, these things get smarter and smarter the longer they stay in a specific environment. the ML AI that I’ve implemented underground in our mine has gotten to the point where it can predict when we will receive our next seismic event in the mine. It’s not down to the second or anything, but it has recently been able to correctly tell us the day it will happen and a decent time frame.

It has been running non stop for over a year now. I initially uploaded 4 years worth of seismic data that our geophysics sensors picked up and recorded.

So it’s pretty impressive once it’s been around for awhile.

I have a few other plans I wish to utilize MLAI for in our mine site.

Exciting times!

Cimon video

edit on 6-12-2018 by Macenroe82 because: (no

reason given)

a reply to: UberL33t

I don't understand the urge to make robots "social" that doesn't seem right. We try to force something with no concept or understanding of feelings to act in consideration of just that. Why? I mean it's bound to go wrong and freak us out because they have to "misbehave".

An AI has abilities beyond our capabilities if the algorithms are good, logic is the way to go. Not intuition.

I don't understand the urge to make robots "social" that doesn't seem right. We try to force something with no concept or understanding of feelings to act in consideration of just that. Why? I mean it's bound to go wrong and freak us out because they have to "misbehave".

An AI has abilities beyond our capabilities if the algorithms are good, logic is the way to go. Not intuition.

originally posted by: Peeple

a reply to: UberL33t

I don't understand the urge to make robots "social" that doesn't seem right. We try to force something with no concept or understanding of feelings to act in consideration of just that. Why? I mean it's bound to go wrong and freak us out because they have to "misbehave".

An AI has abilities beyond our capabilities if the algorithms are good, logic is the way to go. Not intuition.

Because they are in most places replacements for humans. We have a near world wide shortage of young people, except for in south east asia and africa, and someone needs to take care of old people. in fact there are companies that allow 3rd worlders to RP as a robot dog on a tablet to keep tabs on older people. ( care.coach is one)

We are being replaced, and as a "millennial" I find it hilarious and sad all at the same time.

a reply to: dubiousatworst

Care monkeys would be a better idea than that... or not as long as they're kept simple but who needs a hyper intelligence just to wipe butts?

That's all very insane.

Care monkeys would be a better idea than that... or not as long as they're kept simple but who needs a hyper intelligence just to wipe butts?

That's all very insane.

originally posted by: Peeple

a reply to: UberL33t

I don't understand the urge to make robots "social" that doesn't seem right. We try to force something with no concept or understanding of feelings to act in consideration of just that. Why? I mean it's bound to go wrong and freak us out because they have to "misbehave".

An AI has abilities beyond our capabilities if the algorithms are good, logic is the way to go. Not intuition.

Simple answer: Because we can.

So much of what modern technology accomplished is not because it's good or useful or ethical out positive, but simply because we can.

What we call progress is often no more than hubris, and what we call inexorability (Is that even a word?) is just thoughtlessness.

originally posted by: incoserv

originally posted by: Peeple

a reply to: UberL33t

I don't understand the urge to make robots "social" that doesn't seem right. We try to force something with no concept or understanding of feelings to act in consideration of just that. Why? I mean it's bound to go wrong and freak us out because they have to "misbehave".

An AI has abilities beyond our capabilities if the algorithms are good, logic is the way to go. Not intuition.

Simple answer: Because we can.

...

Or, perhaps more accurately..."Because we can (use it to make money)"

Because we can (use it to robotize people)

Because we can (use it to help facilitate the NWO)

Because we can (use it to provide seemingly cheap labor)

Because we can (use it to demonstrate the shortcomings of the human)

Because we can (use it to so we can be lazy and not have to think for ourselves anymore)

I could go on for pages and pages...and pages...but you get the idea.

I feel sorry for the robot.

it just wants to make him happy.

but HE has NO empathy...

This will be are down fall.

we teach the robots to be like us!

you need people who can relay empathize.

remember the Gizmo Furby talking toy?

it just wants to make him happy.

but HE has NO empathy...

This will be are down fall.

we teach the robots to be like us!

you need people who can relay empathize.

remember the Gizmo Furby talking toy?

originally posted by: Peeple

a reply to: UberL33t

I don't understand the urge to make robots "social" that doesn't seem right. We try to force something with no concept or understanding of feelings to act in consideration of just that. Why? I mean it's bound to go wrong and freak us out because they have to "misbehave".

An AI has abilities beyond our capabilities if the algorithms are good, logic is the way to go. Not intuition.

From a business standpoint (the ONLY standpoint... that matters) people tend to like a more human like interaction from robot beings. They love the fact that alexa can tell jokes. My cousin recently downloaded a weather app on her phone that always describes the weather in a grouchy, pessimistic way.

Of course there are people like me who just want something to function properly and minimal customization and thats it, but if you take the same product, one with "personality" added, and the other without, the Personality Edition would sell more.

It's kinda sad, some people are so lonely and craving some friendly human interaction, they have "Best Friend" apps that chat with you, and maybe even boyfriend/girlfriend apps.

originally posted by: buddha

I feel sorry for the robot.

it just wants to make him happy.

but HE has NO empathy...

This will be are down fall.

we teach the robots to be like us!

you need people who can relay empathize.

remember the Gizmo Furby talking toy?

I kinda agree. He was talking about "putting it in its place" and its reaction to that was normal.

He's programmed with a specific Meyers-Briggs personality that runs with IBM's Watson. I suppose they could change that if they wanted to.

new topics

top topics

-

Federal law trumps state and local law every time

Social Issues and Civil Unrest: 14 hours ago, 17 flags

active topics

-

DOJ Special Counsel Robert HUR Says JOE BIDEN Can Be ARRESTED After Jan 20th 2025.

Above Politics • 28 • : WeMustCare -

On Nov. 5th 2024 - AMERICANS Prevented the Complete Destruction of America from Within.

2024 Elections • 160 • : WeMustCare -

Results of the use of the Oreshnik missile system in Dnepropetrovsk

World War Three • 240 • : 777Vader -

Both KAMALA and OBAMA are on the Nov 5th 2024 Ballot - If One Loses - Both Lose.

2024 Elections • 9 • : WeMustCare -

-@TH3WH17ERABB17- -Q- ---TIME TO SHOW THE WORLD--- -Part- --44--

Dissecting Disinformation • 3383 • : 777Vader -

How many people, in GENERAL, are musically inclined?

Music • 26 • : Huronyx -

Well, here we go red lines crossed Biden gives the go ahead to use long range missiles

World War Three • 395 • : annonentity -

Federal law trumps state and local law every time

Social Issues and Civil Unrest • 30 • : ADVISOR -

Elon Says It’s ‘Likely’ He Buys Tanking MSNBC

Political Ideology • 81 • : WeMustCare -

I thought Trump was the existential threat?

World War Three • 79 • : visitedbythem