It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

share:

reply to post by funkster4

That makes no sense.

That's like saying there's a software that will make a 3D image out of Mona Lisa painting from a single image (to say that painting).

That's where I believe you are wrong: what you are explaining is the conventional PTM methodology. I am saying that you can have similar results using other variables with the "light" variable.

That makes no sense.

That's like saying there's a software that will make a 3D image out of Mona Lisa painting from a single image (to say that painting).

funkster4

I am saying that you can have similar results using other variables with the "light" variable.

Explain the process whereby you achieve those results.

reply to post by SkepticOverlord

I can't help but draw similarities between this OP's debate style and the one from:

www.abovetopsecret.com...

And then peacefulpete. They all registered around the same time and all have the same misunderstandings of image editing.

I can't help but draw similarities between this OP's debate style and the one from:

www.abovetopsecret.com...

And then peacefulpete. They all registered around the same time and all have the same misunderstandings of image editing.

Originally posted by SkepticOverlord

funkster4

The image I used show a very visible smudge, contrary to the image you refer to. Again. I am not aware of NASA disclaiming the image with the smudge:

You've provided no evidence that the image you used has a provenance traced back to NASA or the Navy.

Images that do have such provenance lack the smudge.

It's time to either say you've been fooled, or your deception failed.

...again, last I heard of, NASA's explanation was that the smudge was due to "data loss"...

"Images that do have such provenance lack the smudge."

What about they've done a better job later on? Just asking...

"It's time to either say you've been fooled, or your deception failed"

I resent the fact that you imply I am trying to deceive anyone here. I really do.

Listen, I understand that this is your website, and that it probably costs money and hassles of all sorts, and I would not want to be in your place running this. So probably, in the end, it is legitimate you do what you feel is right from your point of view.

That's just not what I expected, from the recommandations that were given to me regarding this forum...

funkster4

...again, last I heard of, NASA's explanation was that the smudge was due to "data loss"...

--- sigh ---

"Last I heard"

Where? What NASA web page, press release, or document. What direct quote attributed to the curator of these images at the Navy or NASA? Where? Please, evidence.

I resent the fact that you imply I am trying to deceive anyone here. I really do.

You are establishing the need for the implication all on your own.

That's just not what I expected, from the recommandations that were given to me regarding this forum...

If you were told that the members and staff of this website would accept crap evidence with amateurish photo manipulation as evidence of anything other than same, then YOU have been fooled.

reply to post by funkster4

Start with citing that source

...again, last I heard of, NASA's explanation was that the smudge was due to "data loss"...

Start with citing that source

Originally posted by SkepticOverlord

funkster4

I am saying that you can have similar results using other variables with the "light" variable.

Explain the process whereby you achieve those results.

...quite simple:

*chose a source image

*apply to it any conventional settings (lighting, sharpness, contour, contrast, etc)

*save the resulting iteration

*interpolate the new iteration with the source image: you are now getting a new (second) iteration which contains both the information of the source and the data content of the first iteration. This second iteration (the third iteration in the data base) can now be interpolated with either the original image or the first iteration, etc,enriching ad infinitum the reference data base . This goes on and on, ultimately sorting out objective data from noise by mere iteration. I think I explained the use of frequency as a filter.

let me go a bit technical here, as to how the process operates:

-you need to save all first 10 iterations derived from the source, and regurlarly reinject them into the data loop

-most importantly, you need to reinject regularly the original source image into the loop: I would recommand you do this at least every 5 iterations until you have a data base of 50 iterations, and that you do so regularly still afterwards

-when processing images (whatever the type) what can happen is that different interpretations manifest themselves during the process: never discard any of them a priori. I recommand you open sub files tracking specific patterns, and "feed" them as described. What you will observe is that "freak images" will be erased by the process, just because they are not statistically supported; You will see what looked like promising patterns dissolve as they are cross checked and cross checked again against the ever-enriched data base.

The main potential obstacle to using this methodlogy efficiently lies in the potential bias of the observer: that is why reinjecting the original source image frequently in the data loop is crucial.

i believe that most of the process could be automated, which could make it easier to use, and to understand.

edit on 28-8-2013 by funkster4 because: (no reason given)

reply to post by funkster4

There is no more information available than that which is contained in the original image.

Adjusting lighting (whatever that means), contrast, etc. provides no new information. Fiddling with sharpness and contours synthesizes information which was never present in the first place. At best all you are doing is accentuating compression artifacts. At worst you are inventing information which did not exist in the original image.

*interpolate the new iteration with the source image: you are now getting a new (second) iteration which contains both the information of the source and the data content of the first iteration.

There is no more information available than that which is contained in the original image.

Adjusting lighting (whatever that means), contrast, etc. provides no new information. Fiddling with sharpness and contours synthesizes information which was never present in the first place. At best all you are doing is accentuating compression artifacts. At worst you are inventing information which did not exist in the original image.

edit on 8/28/2013 by Phage because: (no reason given)

reply to post by funkster4

You are getting a new image yes, but no new data. All you are doing is creating fake data.

You are getting a new image yes, but no new data. All you are doing is creating fake data.

funkster4

*interpolate the new iteration with the source image: you are now getting a new (second) iteration which contains both the information of the source and the data content of the first iteration. This second iteration (the third iteration in the data base) can now be interpolated with either the original image or the first iteration, etc,enriching ad infinitum the reference data base . This goes on and on, ultimately sorting out objective data from noise by mere iteration. I think I explained the use of frequency as a filter.

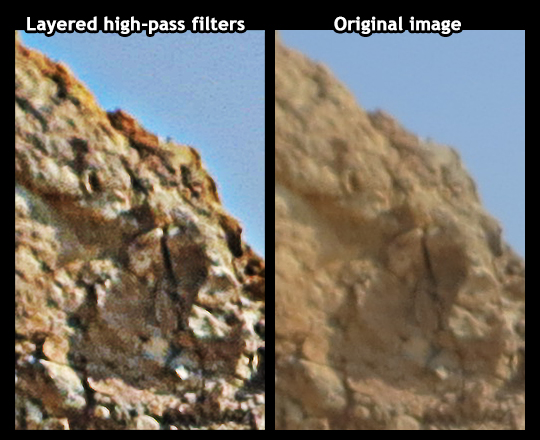

You're using pseudo-techical jargon/gibberish to describe an absurdly insane cycle of arbitrary adjustments. It's wrong, and the polar opposite of what image analysis experts would consider. Here's an example what I've done to enhance a portion of a RAW original digital camera image in the Crete UFO thread:

This was done to illustrate the technique on "known" visible data as a context for the same being applied to the supposed "UFO"

The above done with a custom PhotoShop actionScript I wrote specifically for enhancing images. I'd never use it on anything other than an original RAW, TIFF, PNG, or 100% quality JPEG. The basis for my custom script is from direct conversations with image enhancement experts on the Adobe Photoshop product team... combined with my years of experience as a programmer and expert with Photoshop since version 2.0.

What you're describing is nothing even close to polynomial texture mapping. It's an ad-hoc layering of arbitrary ad-hoc effects with subjective input to achieve predetermined results. It has nothing to do with that single variable of light you've been referring to.

edit on 28-8-2013 by SkepticOverlord because: (no reason given)

edit on 28-8-2013 by SkepticOverlord because: (no reason

given)

Originally posted by funkster4

Originally posted by SkepticOverlord

funkster4

I am saying that you can have similar results using other variables with the "light" variable.

Explain the process whereby you achieve those results.

...quite simple:

*chose a source image

*apply to it any conventional settings (lighting, sharpness, contour, contrast, etc)

*save the resulting iteration

*interpolate the new iteration with the source image: you are now getting a new (second) iteration which contains both the information of the source and the data content of the first iteration.

Each manipulation will either remove or add information. Each manipulation will, to some extent, distort the original image. Your above process sends shivers down the spine for anyone with a basic understanding of image processing. Sorry, this is just how it is.

this is starting to sound like intervention or something..."we all are here for you funkster, but all you are doing is creating fake data...its not real. the fake data makes you see things that aren't really there and it makes you think everything is OK, but its not. The lying and the abuse has to stop...you are staying out at all hours of the night and nobody knows where you are or what you are doing. the fake data is destroying you...its destroying... us....please..."

Originally posted by raymundoko

reply to post by funkster4

You are getting a new image yes, but no new data. All you are doing is creating fake data.

reply to post by ZetaRediculian

Can you recommend an appropriate facility?

We can all chip in for the enrollment costs.

Can you recommend an appropriate facility?

We can all chip in for the enrollment costs.

reply to post by Phage

Be nice.

Originally posted by Phage

reply to post by ZetaRediculian

Can you recommend an appropriate facility?

We can all chip in for the enrollment costs.

Be nice.

reply to post by Phage

No, but I have been using real data for 635 days now. one day at a time.

No, but I have been using real data for 635 days now. one day at a time.

edit on 29-8-2013 by ZetaRediculian because: (no reason

given)

Originally posted by Deaf Alien

reply to post by funkster4

That's where I believe you are wrong: what you are explaining is the conventional PTM methodology. I am saying that you can have similar results using other variables with the "light" variable.

That makes no sense.

That's like saying there's a software that will make a 3D image out of Mona Lisa painting from a single image (to say that painting).

---

Actually that is correct...THERE IS SOFTWARE than can create a 3D image from a flat planar image.

This version is from Cornel University:

make3d.cs.cornell.edu...

Another 2D to 3D image converter was done by a University of British Columbia team

but I can't find the thesis that was presented. I'll continue the search for it though.

reply to post by Deaf Alien

Yes. But not the Mona Lisa.

news.stanford.edu...

Cool coding but I don't think I'd rely on it too much.

Yes. But not the Mona Lisa.

Although the technology works better than any other has so far, Ng said, it is not perfect. The software is at its best with landscapes and scenery rather than close-ups of individual objects. Also, he and Saxena hope to improve it by introducing object recognition. The idea is that if the software can recognize a human form in a photo it can make more accurate distance judgments based on the size of the person in the photo.

news.stanford.edu...

Cool coding but I don't think I'd rely on it too much.

edit on 8/29/2013 by Phage because: (no reason given)

reply to post by Phage

As you yourself and I have said... we need multiple scenes to create an image.

'

As you yourself and I have said... we need multiple scenes to create an image.

'

Extracting 3-D information from still images is an emerging class of technology. In the past, some researchers have synthesized 3-D models by analyzing multiple images of a scene.

new topics

-

An Interesting Conversation with ChatGPT

Science & Technology: 3 hours ago

top topics

-

Have you noticed?? Post Election news coverage...

World War Three: 16 hours ago, 12 flags -

Squirrels becoming predators

Fragile Earth: 15 hours ago, 10 flags -

Drone Shooting Arrest - Walmart Involved

Mainstream News: 14 hours ago, 10 flags -

World's Best Christmas Lights!

General Chit Chat: 13 hours ago, 8 flags -

Can someone 'splain me like I'm 5. Blockchain?

Science & Technology: 15 hours ago, 7 flags -

Labour's Anti-Corruption Minister Named in Bangladesh Corruption Court Papers

Regional Politics: 16 hours ago, 6 flags -

An Interesting Conversation with ChatGPT

Science & Technology: 3 hours ago, 3 flags

active topics

-

An Interesting Conversation with ChatGPT

Science & Technology • 8 • : BasicResearchMethods -

Smartest Man in the World Tells His Theory About What Happens At Death

Philosophy and Metaphysics • 45 • : ufoorbhunter -

Have you noticed?? Post Election news coverage...

World War Three • 9 • : ElitePlebeian2 -

World's Best Christmas Lights!

General Chit Chat • 12 • : GENERAL EYES -

The King James Bible, it's Translation, it's Preservation and its Inspiration

Religion, Faith, And Theology • 44 • : GENERAL EYES -

Drones everywhere in New Jersey ---and Elsewhere Master Thread

Aliens and UFOs • 201 • : worldstarcountry -

Drone Shooting Arrest - Walmart Involved

Mainstream News • 26 • : worldstarcountry -

Can someone 'splain me like I'm 5. Blockchain?

Science & Technology • 74 • : worldstarcountry -

Trump Meets Kristen Welker on Meet the Press

Mainstream News • 20 • : Astrocometus -

The Mystery Drones and Government Lies --- Master Thread

Political Conspiracies • 150 • : worldstarcountry