It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

share:

I put this into social issues and civil unrest because I personally feel like this is an overlooked issue not just with technology but something that

affects us all socially and has led to unrest.

A lot of people may not even realize exactly what the problem is. They know there's a problem with modern technology, somewhere along the way, it went from being a tool to being used against us. Well, what happened?

The latter half of the 20th century and so far in the 21st century has been shaped and molded, for better and worse by one of the most versatile, powerful, equalizing, amazing pieces of technology ever invented by humanity.

The general purpose computer.

So what exactly is a general purpose computer?

cheapskatesguide.org...

The most important part of this I feel is the last bolded part there. General purpose computers are probably the most equalizing technology humanity has ever created. Anyone, anywhere, with access to a general purpose computer and electricity has the power to create so many different things. Things that only a few decades before, would have been impossible for the average person without a large amount of resources.

Computers enabled a huge explosion of creativity, technological advancement and global connectivity unparalleled in human history.

But, something's gone wrong. Increasingly, the ability for computers to freely empower individuals is slowly being taken away. The general purpose computer is being locked up, sandboxed and managed under more and more layers of 'security' and surveillance.

Continued Below....

A lot of people may not even realize exactly what the problem is. They know there's a problem with modern technology, somewhere along the way, it went from being a tool to being used against us. Well, what happened?

The latter half of the 20th century and so far in the 21st century has been shaped and molded, for better and worse by one of the most versatile, powerful, equalizing, amazing pieces of technology ever invented by humanity.

The general purpose computer.

So what exactly is a general purpose computer?

cheapskatesguide.org...

what is a General Purpose Computer, and why is that important? The idea of a Universal Machine, to most people, seems grandiose. Like something from science fiction. Perhaps "universal" overstates the case. Computers cannot provide light, energy, food, medicine or transport matter. Yet, they can help with all those things. They do so by a process known as computing, which transforms symbols, usually taken to be numbers. We can express problems as questions in these symbols, and get answers.

The universal computer was a theoretical creation of mathematicians going back to Al Kwarismi Musa, eponymous creator of the "Algorithm" in the 8th century AlKhwarizmi, and most famously by Charles Babbage and then Alan Turing. Of those, only Turing lived to see a working computer created 2. In the 1950s at Harvard, John von Neumann designed a workable microprocessor using the latest silicon chips, and digital computers became reality.

It was Ada Lovelace who offered the first prophetic insights into the social power of programming as a language for creativity. She imagined art, music, and thinking machines built from pure code, by anyone who could understand mathematics. With a general purpose computer anybody can create an application program. That is their extraordinarily progressive, enabling power. Apps, being made of invisible words, out of pure language, are like poems or songs. Anybody can make and share a poem, a recipe, or an idea like a computer program. This is the beauty and passion at the heart of the hacker culture.

The most important part of this I feel is the last bolded part there. General purpose computers are probably the most equalizing technology humanity has ever created. Anyone, anywhere, with access to a general purpose computer and electricity has the power to create so many different things. Things that only a few decades before, would have been impossible for the average person without a large amount of resources.

Computers enabled a huge explosion of creativity, technological advancement and global connectivity unparalleled in human history.

But, something's gone wrong. Increasingly, the ability for computers to freely empower individuals is slowly being taken away. The general purpose computer is being locked up, sandboxed and managed under more and more layers of 'security' and surveillance.

General purpose computers enable unparalleled freedom and opportunity to those wanting to build common resources. The story of computing after about 2000 is the tale of how commercial interests tried to sabotage the prevalence of general purpose computers.

Smartphones occupy a state between general purpose computing and appliances which could be seen as a degenerate condition. Software engineers have condemned, for half a century, the poor modularity, chaotic coupling, lack of cohesion, side effects and data leaks that are the ugly symptoms of the technological chimera. As we now know, smartphones, being neither general purpose computers over which the user has authority, nor functionally stable appliances, bring a cavalcade of security holes, opaque behaviours, backdoors and other faults typical of machinery built according to ad-hoc design and a celebration of perversely tangled complexity.

Smartphones were originally designed around powerful general purpose computers. The cost of general use microprocessors had fallen so far that it was more economical to take an off-the-shelf computer and add a telephone to it than to design a sophisticated handset as an appliance (a so-called ASIC) from scratch. But the profit margins on hardware are small. Smartphones needed to become appliance-like platforms for selling apps and content. To do so it was necessary to cripple their general purpose capabilities, lest users retain too much control.

This crippling process occurs for several reasons ostensibly sold as "security". Security of the user from bad hackers is one perspective. Security of the vendor and carrier from the user is the other. We have shifted from valuing the former to having the latter imposed on us. In this sense mobile cybersecurity is a zero sum game, what the vendor gains the user loses. In order to secure vendor rights to extraction of rent, the users freedoms must be taken away.

Recently, developer communities have been busy policing language in order to expunge the word 'slave' from software source code. Meanwhile, slavery is precisely what a lot of software is itself enabling.

Under the euphemism of 'software as service', each device and application has become a satellite of its manufacturers' network, intruding into the owner's personal and digital space. Even in open source software, such as the sound editor Audacity, developers have become so entitled, lazy and unable to ship a working product that they alienated their userbase by foisting "telemetry" (a euphemism for undeclared or non-consensual data extraction and updates) on the program.

The second pillar of slavery is encrypted links that benefit the vendor. Encryption hides the meaning of communications. Normally we consider encryption to be a benefit, such as when we want to talk privately. But it can be turned to nefarious ends if the user does not hold the key. This same design is used in malware. Encryption is turned against the user when it is used to send secret messages to your phone that control or change its behaviour, and you are unable to know about them.

The manufacturer will tell you that these 'features' are for your protection. But once an encrypted link, to which you have no key, is established between your computer and a manufacturer's server, you relinquish all control over the application and most likely your whole device. There is no way for you, or a security expert, to verify its good behaviour. All trust is given over to the manufacturer. Without irony this is called Trusted Computing when it is embedded into the hardware so that you cannot even delete it from your "own" device. You have no real ownership of such devices, other than physical possession. Think of them as rented appliances.

The third mechanism for enslavement is open-ended contracts. Traditionally, a contract comprises established legal steps such as invitation, offer, acceptance and so forth. The written part of a contract that most of us think of, the part that is 'signed', is an agreement to some fixed, accepted terms of exchange. Modern technology contracts are nothing like this. For thirty years corporations have been pushing the boundaries of contract law to the point they are unrecognisable as 'contracts'.

Continued Below....

So, if you managed to get through all that, you'll see that through a series of added hardware and software layers, device manufacturers, have managed

to turn general purpose computers into broken appliances designed to extract money from users. The devices are built intentionally to limit the power

of users so profits can be made.

This has basically crippled human growth and ingenuity for the entire planet. What they have done to computers isn't just greedy and immoral, it has actually stifled human innovation.

The article speaks mostly about smart phones, but any device with a computer in it, consoles, phones, TVs, cars, etc. are artificially limited to remove control from you to extract as much money from you as physically possible.

I want to talk a bit about ARM processors here.

en.m.wikipedia.org...

ARM processors are Restricted Instruction Set Processors used in pretty much all smart phones, the raspberry pi and various other devices that require lower power consumption than Complete Instruction Set Processors like x86(Intel, amd).

There's a stark difference however in the way Intel/amd's chips are licensed and manufactured however vs ARM chips. This difference, is essentially what has enabled device manufacturers to build locked down appliance type devices.

www.anandtech.com...

Because of this, manufacturers are free to lockdown their hardware as much or as little as they want. In the case of the big manufacturers, Qualcomm, Broadcomm etc. They ensure chips used in android and Apple phones, or other big tech companies are fully locked down. So they can do things such as:

slashdot.org...

Sadly, this is no longer limited to the world of low powered arm devices.

Over the last several years, these kinds of things have slowly been pushed into the hardware of even desktops and laptops.

Built directly into both Intel and AMD processors is the Intel management engine and the AMD Platform Security Processor. They're slightly different but functionally identical.

hackaday.com...

So, Intel and amd chips come with a built in microcontroller, put in at the behest of the NSA with its own network stack, access to keyboard inputs and is unremovable without rendering your computer inoperable.

As if that wasn't bad enough. Every modern computer also comes with UEFI and SecureBoot.

So, what are those two things?

en.m.wikipedia.org...

and secure boot is:

arstechnica.com...

Continued Below....

This has basically crippled human growth and ingenuity for the entire planet. What they have done to computers isn't just greedy and immoral, it has actually stifled human innovation.

The article speaks mostly about smart phones, but any device with a computer in it, consoles, phones, TVs, cars, etc. are artificially limited to remove control from you to extract as much money from you as physically possible.

I want to talk a bit about ARM processors here.

en.m.wikipedia.org...

ARM processors are Restricted Instruction Set Processors used in pretty much all smart phones, the raspberry pi and various other devices that require lower power consumption than Complete Instruction Set Processors like x86(Intel, amd).

There's a stark difference however in the way Intel/amd's chips are licensed and manufactured however vs ARM chips. This difference, is essentially what has enabled device manufacturers to build locked down appliance type devices.

www.anandtech.com...

AMD, Intel and NVIDIA all make money by ultimately selling someone a chip. ARM’s revenue comes entirely from IP licensing. It’s up to ARM’s licensees/partners/customers to actually build and sell the chip. ARM’s revenue structure is understandably very different than what we’re used to.

There are two amounts that all ARM licensees have to pay: an upfront license fee, and a royalty. There are a bunch of other adders with things like support, but for the purposes of our discussions we’ll focus on these big two.

Everyone pays an upfront license fee and everyone pays a royalty. The amount of these two is what varies depending on the type of license.

Because of this, manufacturers are free to lockdown their hardware as much or as little as they want. In the case of the big manufacturers, Qualcomm, Broadcomm etc. They ensure chips used in android and Apple phones, or other big tech companies are fully locked down. So they can do things such as:

slashdot.org...

Microsoft has released a security update that has patched a backdoor in Windows RT operating system [that] allowed users to install non-Redmond approved operating systems like Linux and Android on Windows RT tablets. This vulnerability in ARM-powered, locked-down Windows devices was left by Redmond programmers during the development process. Exploiting this flaw, one was able to boot operating systems of his/her choice, including Android or GNU/Linux.

Sadly, this is no longer limited to the world of low powered arm devices.

Over the last several years, these kinds of things have slowly been pushed into the hardware of even desktops and laptops.

Built directly into both Intel and AMD processors is the Intel management engine and the AMD Platform Security Processor. They're slightly different but functionally identical.

hackaday.com...

Over the last decade, Intel has been including a tiny little microcontroller inside their CPUs. This microcontroller is connected to everything, and can shuttle data between your hard drive and your network adapter. It’s always on, even when the rest of your computer is off, and with the right software, you can wake it up over a network connection. Parts of this spy chip were included in the silicon at the behest of the NSA. In short, if you were designing a piece of hardware to spy on everyone using an Intel-branded computer, you would come up with something like the Intel Managment Engine.

Intel’s Management Engine is only a small part of a collection of tools, hardware, and software hidden deep inside some the latest Intel CPUs. These chips and software first appeared in the early 2000s as Trusted Platform Modules. These small crypto chips formed the root of ‘trust’ on a computer. If the TPM could be trusted, the entire computer could be trusted. Then came Active Management Technology, a set of embedded processors for Ethernet controllers. The idea behind this system was to allow for provisioning of laptops in corporate environments. Over the years, a few more bits of hardware were added to CPUs. This was the Intel Management Engine, a small system that was connected to every peripheral in a computer. The Intel ME is connected to the network interface, and it’s connected to storage. The Intel ME is still on, even when your computer is off. Theoretically, if you type on a keyboard connected to a powered-down computer, the Intel ME can send those keypresses off to servers unknown.

How do you turn the entire thing off?

Unfortunately, you can’t. A computer without valid ME firmware shuts the computer off after thirty minutes.

So, Intel and amd chips come with a built in microcontroller, put in at the behest of the NSA with its own network stack, access to keyboard inputs and is unremovable without rendering your computer inoperable.

As if that wasn't bad enough. Every modern computer also comes with UEFI and SecureBoot.

So, what are those two things?

en.m.wikipedia.org...

The Unified Extensible Firmware Interface (UEFI)[1] is a publicly available specification that defines a software interface between an operating system and platform firmware. UEFI replaces the legacy Basic Input/Output System (BIOS) firmware interface originally present in all IBM PC-compatible personal computers,[2][3] with most UEFI firmware implementations providing support for legacy BIOS services. UEFI can support remote diagnostics and repair of computers, even with no operating system installed.[

and secure boot is:

arstechnica.com...

Since UEFI's first version (2.0, released in 2006), it has supported the use of digital signatures to ensure that UEFI drivers and UEFI programs are not tampered with. In version 2.2, released in 2008, digital signature support was extended so that operating system loaders—the pieces of code supplied by operating system developers to actually load and start an operating system—could also be signed.

The digital signature mechanism uses standard public key infrastructure technology. The UEFI firmware stores one or more trusted certificates. Signed software (whether it be a driver, a UEFI program, or an operating system loader) must have a signature that can be traced back to one of these trusted certificates. If there is no signature at all, if the signature is faulty, or if the signature does not correspond to any of the certificates, the system will refuse to boot.

Continued Below....

The problem with this is:

oofhours.com...

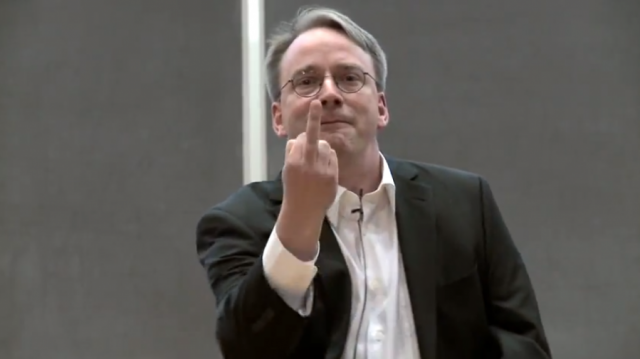

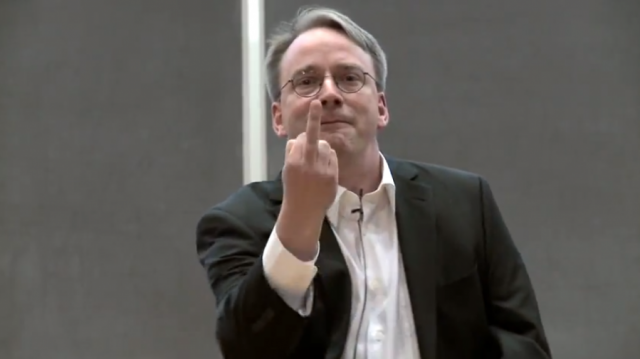

So, essentially, every computer since windows 8 was released has made it so any operating system installed on a computer with secure boot must be approved by Microsoft, and as Linus Torvalds(creator of the Linux Kernel) put it, quite bluntly:

arstechnica.com...

Alright, I know this has been long. We're almost done. Let's just talk a bit about what's coming down the road.

Microsoft recently announced Windows 11, along with this they mentioned that Windows 11 will require a Trusted Platform Module (TPM) be installed on the computer.

What's a TPM? You might ask.

en.m.wikipedia.org...

Well that's a rather bland description...so...what is it?

It's yet another small microcontroller, you are not in control of, built in to the motherboard that stores cryptographic keys needed to verify software installed on the computer.

I'm not going to post any quotes here, it's a bit ranty, but Richard Stallman (crazy software freedom dude who created GNU and the GPL) goes off about the entire concept of 'trusted' computing in general.

www.gnu.org...

This has been a long one. Thanks for reading and congrats if you made it through the whole thing.

I want to wrap this up though by saying. Don't just dismiss this with:

'technology's evil, big Tech's bad.'

Technology's not evil. The way it's being used is evil. On many levels. Not only is it creating a mass dystopian corporate ran surveillance society, but they're slowly stealing and locking away one of the greatest and most amazing of all of humanity's inventions.

Rather than just outright dismiss technology, take the time to learn how to really use computers, enough that you don't need to rely on Microsoft, or Apple or google or the others.

If you've got the money, invest in something like the Pine Phone or the Librem Phone or other open source hardware. Use open source software alternatives whenever possible rather than support Microsoft or others. Don't even pirate their software, they expect it, it's not a # you, it's what allows them to keep their grip in the corporate world.

It's worth it, if enough people choose to support alternatives to the locked down, corporate controlled devices, we may actually be able to keep this amazing technology in the hands of all of humanity where it belongs.

oofhours.com...

what certificates are in that list? Per the Microsoft documentation for running Windows on the device, there should be at least two:

Microsoft Windows Production PCA 2011. The Windows bootloader (bootmgr.efi) is signed using this, so this is what allows Windows (and Windows PE) to run.

Microsoft Corporation UEFI CA 2011. This one is used by Microsoft to sign non-Microsoft UEFI boot loaders, such as those used to load Linux or other operating systems. Technically, it’s described as “optional” but it would be unusual to find a device that doesn’t include it. (Windows RT devices, if you remember those, did not include this cert or any others, so as a result, it only ran Windows RT. We shall see what certs are included on Windows 10x devices…)

Who controls what can run on the device when Secure Boot is enabled? (As long as you can turn off Secure Boot, you always have the final authority.) As you can see from the above, it’s Microsoft and the OEMs. And since you probably don’t want to have to have an OEM- or model-specific boot loader, that effectively means it’s Microsoft. Only Windows binaries get signed with the “Windows Production” certificate, but anyone can get code signed using the “UEFI” certificate — if you pass the Microsoft requirements. You can get an idea of what those requirements entail from this blog post. It’s a non-trivial process because you are effectively having your code reviewed by Microsoft.

So, essentially, every computer since windows 8 was released has made it so any operating system installed on a computer with secure boot must be approved by Microsoft, and as Linus Torvalds(creator of the Linux Kernel) put it, quite bluntly:

arstechnica.com...

If you want to parse PE binaries, go right ahead. If Red Hat wants to deep-throat Microsoft, that's *your* issue. That has nothing what-so-ever to do with the kernel I maintain. It's trivial for you guys to have a signing machine that parses the PE binary, verifies the signatures, and signs the resulting keys with your own key. You already wrote the code, for chrissake, it's in that #ing pull request.

Why should *I* care? Why should the kernel care about some idiotic "we only sign PE binaries" stupidity? We support X.509, which is the standard for signing.

Do this in user land on a trusted machine. There is zero excuse for doing it in the kernel.

Linus

Alright, I know this has been long. We're almost done. Let's just talk a bit about what's coming down the road.

Microsoft recently announced Windows 11, along with this they mentioned that Windows 11 will require a Trusted Platform Module (TPM) be installed on the computer.

What's a TPM? You might ask.

en.m.wikipedia.org...

Trusted Platform Module (TPM, also known as ISO/IEC 11889) is an international standard for a secure cryptoprocessor, a dedicated microcontroller designed to secure hardware through integrated cryptographic keys.

Well that's a rather bland description...so...what is it?

It's yet another small microcontroller, you are not in control of, built in to the motherboard that stores cryptographic keys needed to verify software installed on the computer.

The concerns include the abuse of remote validation of software (where the manufacturer—and not the user who owns the computer system—decides what software is allowed to run) and possible ways to follow actions taken by the user being recorded in a database, in a manner that is completely undetectable to the user.

I'm not going to post any quotes here, it's a bit ranty, but Richard Stallman (crazy software freedom dude who created GNU and the GPL) goes off about the entire concept of 'trusted' computing in general.

www.gnu.org...

This has been a long one. Thanks for reading and congrats if you made it through the whole thing.

I want to wrap this up though by saying. Don't just dismiss this with:

'technology's evil, big Tech's bad.'

Technology's not evil. The way it's being used is evil. On many levels. Not only is it creating a mass dystopian corporate ran surveillance society, but they're slowly stealing and locking away one of the greatest and most amazing of all of humanity's inventions.

Rather than just outright dismiss technology, take the time to learn how to really use computers, enough that you don't need to rely on Microsoft, or Apple or google or the others.

If you've got the money, invest in something like the Pine Phone or the Librem Phone or other open source hardware. Use open source software alternatives whenever possible rather than support Microsoft or others. Don't even pirate their software, they expect it, it's not a # you, it's what allows them to keep their grip in the corporate world.

It's worth it, if enough people choose to support alternatives to the locked down, corporate controlled devices, we may actually be able to keep this amazing technology in the hands of all of humanity where it belongs.

Started programming in 1978.

Business applictions, not toy computers.

The Army, college and graduate school came along. There was a war somewhere in there.

Made a living from 1994 - 2004 as a full time, heads down programmer. Ran one company and later started my own which is still in business.

Learn to program and these tools are your servants. Buy programs and you never know what "extra" features you will get.

Computers made me pretty wealthy. I feel for folk who think because they use computers, that they actually understand how they work.

You have some deep insight here that users should pay attention to.

s&F.

You should keep this up. Am too old and tired to try again.

ETA: I really liked how you ended on a positive note. I would like to add to that.

Learning about computers and programming them is not that hard. As OP pointed out, there is a lot of resources for free. Open source is great - if some one has done a routine and wants to share - use it! They are proud of their work.

Also, the IT community is one of the most open and sharing groups of people you will find in the world.

You don't have to know complex math, or understand electrical engineering to be good at programming. Just pick a language that is good for you, trial and error till it works, and you have made a machine perform something for you that might have been dull, repetitive, or boring. Now go on and use your free time to do more exciting things!

Business applictions, not toy computers.

The Army, college and graduate school came along. There was a war somewhere in there.

Made a living from 1994 - 2004 as a full time, heads down programmer. Ran one company and later started my own which is still in business.

Learn to program and these tools are your servants. Buy programs and you never know what "extra" features you will get.

Computers made me pretty wealthy. I feel for folk who think because they use computers, that they actually understand how they work.

You have some deep insight here that users should pay attention to.

s&F.

You should keep this up. Am too old and tired to try again.

ETA: I really liked how you ended on a positive note. I would like to add to that.

Learning about computers and programming them is not that hard. As OP pointed out, there is a lot of resources for free. Open source is great - if some one has done a routine and wants to share - use it! They are proud of their work.

Also, the IT community is one of the most open and sharing groups of people you will find in the world.

You don't have to know complex math, or understand electrical engineering to be good at programming. Just pick a language that is good for you, trial and error till it works, and you have made a machine perform something for you that might have been dull, repetitive, or boring. Now go on and use your free time to do more exciting things!

edit on 17-7-2021 by Havamal because: (no reason given)

There is so much wrong with this , I cannot even fathom how most was even derived.

1) No "symbol" ever went through a PC architecture until the very end product on the screen.It is off and on . Something like sending morse code by using the light switch in your home.

2) UEFI is nothing but BIOS v2 .Its main difference is instead of WIndows controlling the hardware device setup , it does.

3) The reason for the Intel management engine is that controls PCI (sorta) ,and memory as that is more efficient than 2 separate controllers. (southbridge and memory controllers.)

4) Secure boot is your friend in these days . Helps to prevent something affecting your system that was set to start on a reboot such as ransomware. There was something like this way back in the late 90s .

I don't have time to go further , but reply to this post with any questions .

I will answer them as I catch them .

1) No "symbol" ever went through a PC architecture until the very end product on the screen.It is off and on . Something like sending morse code by using the light switch in your home.

2) UEFI is nothing but BIOS v2 .Its main difference is instead of WIndows controlling the hardware device setup , it does.

3) The reason for the Intel management engine is that controls PCI (sorta) ,and memory as that is more efficient than 2 separate controllers. (southbridge and memory controllers.)

4) Secure boot is your friend in these days . Helps to prevent something affecting your system that was set to start on a reboot such as ransomware. There was something like this way back in the late 90s .

I don't have time to go further , but reply to this post with any questions .

I will answer them as I catch them .

a reply to: Gothmog

I'm not sure where to begin with this...

1) No, processors operate through a set of opcodes represented by binary values consisting of certain sized words(the number of binary values processed at once). They absolutely do operate on symbols. Look up any set of opcodes.

2) I suggest actually looking at the wiki for uefi. It is far more than BIOS v2. The BIOS was never ever controlled by windows. I have no idea where you got that from. The BIOS is what loaded the bootloader, which could have been the windows bootloader, or another like grub, as well as performing basic hardware setup and checks.

3) There's countless articles describing exactly what the Intel management engine does, the kinds of vulnerabilities it introduces and just the fact that it exists on ring -3

en.m.wikipedia.org...

Which is beyond the access of even any installed OS is a cause for concern.

4) Secure boot is controlled by Microsoft. Microsoft is nobody's friend. Secure boot stops no such thing as evidenced by the large amount of ransomware attacks that have occurred only since secure boot has been in effect.

I'm not sure where to begin with this...

1) No, processors operate through a set of opcodes represented by binary values consisting of certain sized words(the number of binary values processed at once). They absolutely do operate on symbols. Look up any set of opcodes.

2) I suggest actually looking at the wiki for uefi. It is far more than BIOS v2. The BIOS was never ever controlled by windows. I have no idea where you got that from. The BIOS is what loaded the bootloader, which could have been the windows bootloader, or another like grub, as well as performing basic hardware setup and checks.

3) There's countless articles describing exactly what the Intel management engine does, the kinds of vulnerabilities it introduces and just the fact that it exists on ring -3

en.m.wikipedia.org...

Which is beyond the access of even any installed OS is a cause for concern.

4) Secure boot is controlled by Microsoft. Microsoft is nobody's friend. Secure boot stops no such thing as evidenced by the large amount of ransomware attacks that have occurred only since secure boot has been in effect.

edit on 17/7/2021 by dug88 because: (no reason given)

a reply to: dug88

1) Wrong.

2) I don't have to look at a "Wiki" . I may have been denoted in some .(or most)

3) I don't have to deal with articles. I see articles from the manufacturers most every day .

4) Secure boot is controlled by UEFI (detects changes on the MBR / GPT of Windows or any other compatible OS .)It does READ the boot table . As the last step in POST . Checkpoint 7F Transfer of Boot Code .

Hope this helps to clear up some "mythology" .

(I don't care about phones . I own a landline and a flip phone.)

1) Wrong.

2) I don't have to look at a "Wiki" . I may have been denoted in some .(or most)

3) I don't have to deal with articles. I see articles from the manufacturers most every day .

4) Secure boot is controlled by UEFI (detects changes on the MBR / GPT of Windows or any other compatible OS .)It does READ the boot table . As the last step in POST . Checkpoint 7F Transfer of Boot Code .

Hope this helps to clear up some "mythology" .

(I don't care about phones . I own a landline and a flip phone.)

edit on 7/17/21 by Gothmog because: (no reason given)

a reply to: dug88

Interesting breakdown of the evolution of PC architecture.

Basically, they had to figure out a way to get around that nasty Open Source OS and software.

By adding Remote Access via the firmware level, presto.

Also, by cryptographically sealing all processes, only their "key" can unlocks it all. So, hardware can't interact without being "Trusted".

I was in IT back at Y2K.

Was the good ole days before IOT when we could still unplug.

Great Thread. SnF

Interesting breakdown of the evolution of PC architecture.

Basically, they had to figure out a way to get around that nasty Open Source OS and software.

By adding Remote Access via the firmware level, presto.

Also, by cryptographically sealing all processes, only their "key" can unlocks it all. So, hardware can't interact without being "Trusted".

I was in IT back at Y2K.

Was the good ole days before IOT when we could still unplug.

Great Thread. SnF

Sorry. My expertise is with larger and more complex computer systems. I always considered windows as a "toy" system for home users. Still is.

windows, at is base, is very primitive. Compare to IBM systems in th 1960s and they are just getting to a virtual machine. iBM 360 1964.

Ahem. But let the kids have fun with their toys.

windows, at is base, is very primitive. Compare to IBM systems in th 1960s and they are just getting to a virtual machine. iBM 360 1964.

Ahem. But let the kids have fun with their toys.

edit on 17-7-2021 by Havamal because: (no reason given)

originally posted by: MykeNukem

a reply to: dug88

Interesting breakdown of the evolution of PC architecture.

Basically, they had to figure out a way to get around that nasty Open Source OS and software.

By adding Remote Access via the firmware level, presto.

Also, by cryptographically sealing all processes, only their "key" can unlocks it all. So, hardware can't interact without being "Trusted".

I was in IT back at Y2K.

Was the good ole days before IOT when we could still unplug.

Great Thread. SnF

1) They already had access since early 80s (per some) .

2) "By adding Remote Access via the firmware level, presto." Where ? You can update the BIOS/UEFI over the network without an OS . Easy thing to turn that off , though.

3) "Also, by cryptographically sealing all processes, only their "key" can unlocks it all. So, hardware can't interact without being "Trusted". " Trusted computing (or technically , TPM doesn't work that way .

4) "I was in IT back at Y2K." I was "IT" back in the early 80s . And called in to ride Y2K through .

edit on 7/17/21 by Gothmog because: (no reason given)

originally posted by: Gothmog

originally posted by: MykeNukem

a reply to: dug88

Interesting breakdown of the evolution of PC architecture.

Basically, they had to figure out a way to get around that nasty Open Source OS and software.

By adding Remote Access via the firmware level, presto.

Also, by cryptographically sealing all processes, only their "key" can unlocks it all. So, hardware can't interact without being "Trusted".

I was in IT back at Y2K.

Was the good ole days before IOT when we could still unplug.

Great Thread. SnF

1) They already had access since early 80s (per some) .

2) "By adding Remote Access via the firmware level, presto." Where ? You can update the BIOS/UEFI over the network without an OS . Easy thing to turn that off , though.

3) "Also, by cryptographically sealing all processes, only their "key" can unlocks it all. So, hardware can't interact without being "Trusted". " Trusted computing (or technically , TPM doesn't work that way .

4) "I was in IT back at Y2K." I was "IT" back in the early 80s . And called in to ride Y2K through .

I wasn't being specific or having a pissing contest.

1. Ok. They still have it.

2. If it's enabled, it's enabled. It's enabled by default on most business units, no?

3. It basically works that way. If you're not "Trusted" you will at the very least lose access to certain services.

4. Again, ok. I was locked in Northern Credit Union waiting for the nothing to happen too.

I was just making general comments that aren't off the mark.

I haven't studied IT in awhile, but I could figure out any system in a few minutes of sitting at it.

Not trying to see who's the bestest.

a reply to: MykeNukem

That is debatable whether it ever was.

No . That is not correct.

No . I am using PCs with TPM enabled and TPM disabled. No difference whatsoever .

The reason for the uproar is the developer version of Windows 11 cannot be installed without TPM . TPM 2.0 came out in 2014 . If one has a system that cannot be TPM 2.0 enabled , one does not need to be trying to run Windows 11

No more "LEGACY" that is sooooooo 2000

Me neither .

Yet , I am attempting to say "I know" , as I HAVE to know .

Part of the job for the last 30 years.

1. Ok. They still have it.

That is debatable whether it ever was.

2. If it's enabled, it's enabled. It's enabled by default on most business units, no?

No . That is not correct.

3. It basically works that way. If you're not "Trusted" you will at the very least lose access to certain services.

No . I am using PCs with TPM enabled and TPM disabled. No difference whatsoever .

The reason for the uproar is the developer version of Windows 11 cannot be installed without TPM . TPM 2.0 came out in 2014 . If one has a system that cannot be TPM 2.0 enabled , one does not need to be trying to run Windows 11

No more "LEGACY" that is sooooooo 2000

Not trying to see who's the bestest.

Me neither .

Yet , I am attempting to say "I know" , as I HAVE to know .

Part of the job for the last 30 years.

edit on 7/17/21 by Gothmog because: (no reason given)

originally posted by: Gothmog

a reply to: MykeNukem

1. Ok. They still have it.

That is debatable whether it ever was.

2. If it's enabled, it's enabled. It's enabled by default on most business units, no?

No . That is not correct.

3. It basically works that way. If you're not "Trusted" you will at the very least lose access to certain services.

No . I am using PCs with TPM enabled and TPM disabled. No difference whatsoever .

The reason for the uproar is the developer version of Windows 11 cannot be installed without TPM . TPM 2.0 came out in 2014 . If one has a system that cannot be TPM 2.0 enabled , one does not need to be trying to run Windows 11

No more "LEGACY" that is sooooooo 2000

Not trying to see who's the bestest.

Me neither .

Yet , I am attempting to say "I know" , as I HAVE to know .

Part of the job for the last 30 years.

I have no doubt you're more up-to-date and on top of the latest tech., I never doubted it. As a former tech and current geek, I can tell.

1. Ok. We debated

2. From what I've seen Network Boot is enabled by default as the 3rd or $th option in the Boot List. Maybe that's changed. That would give you Remote Access for sure.

3. I was assuming we are talking about "enabled", which if the system detects a hardware change, will possible desiable certain driver functions until updated.

I know, you know.

Never doubted it, man.

a reply to: dug88

I just noticed this (I did state that I did not have a lot of time to go over this information)

AMD builds TPM right into the firmware of their CPUs.

Which can be disabled if wanted .

One has a choice of 3 different ways to enable.

But , either way , isn't a good thing to add an extra layer of protection ?

Trusted Platform Module (TPM, also known as ISO/IEC 11889) is an international standard for a secure cryptoprocessor, a dedicated microcontroller designed to secure hardware through integrated cryptographic keys.

I just noticed this (I did state that I did not have a lot of time to go over this information)

AMD builds TPM right into the firmware of their CPUs.

Which can be disabled if wanted .

One has a choice of 3 different ways to enable.

But , either way , isn't a good thing to add an extra layer of protection ?

edit on 7/17/21 by Gothmog because: (no reason given)

a reply to: MykeNukem

Network boot is default enabled in the BIOS/UEFI and has been for a long, long time on most every PC device .(Way down in the list )

This is for SANs , or anyone booting from a server (PXE Boot) . Which has to be specifically set up or doesn't work at all. No use at all for the normal user .

It does come in handy if one is setting up 100s or more machines at one time . Set up a boot server loaded with an image , and one can do all at one go .

No. You assumed wrong .(At least as of now and in the immediate future)

2. From what I've seen Network Boot is enabled by default as the 3rd or $th option in the Boot List. Maybe that's changed. That would give you Remote Access for sure.

Network boot is default enabled in the BIOS/UEFI and has been for a long, long time on most every PC device .(Way down in the list )

This is for SANs , or anyone booting from a server (PXE Boot) . Which has to be specifically set up or doesn't work at all. No use at all for the normal user .

It does come in handy if one is setting up 100s or more machines at one time . Set up a boot server loaded with an image , and one can do all at one go .

3. I was assuming we are talking about "enabled", which if the system detects a hardware change, will possible desiable certain driver functions until updated

No. You assumed wrong .(At least as of now and in the immediate future)

My three main computers are Lenovo T410s laptops running Linux mint20.1.

These laptops are pre UEFI bios computers.

What are the weak points of these computers.

And what are the strong points.

What I like is not having micros**t windows controlling my computers.

And having to BUY antivirus software every year.

I used to get hit with viruses a couple of times a year and since going to Linux full time I have had no viruses malware or ransomware.

And also not having a lot of bloatware on my computers, slowing them down to a snails pace.

Since all three computers are clones with the same OS and data on them and i at the most use two at one time I laugh at the threat of malware and ransomware as all I have to do is format and clone a new hard drive.to bring the infected computer back inline

These laptops are pre UEFI bios computers.

What are the weak points of these computers.

And what are the strong points.

What I like is not having micros**t windows controlling my computers.

And having to BUY antivirus software every year.

I used to get hit with viruses a couple of times a year and since going to Linux full time I have had no viruses malware or ransomware.

And also not having a lot of bloatware on my computers, slowing them down to a snails pace.

Since all three computers are clones with the same OS and data on them and i at the most use two at one time I laugh at the threat of malware and ransomware as all I have to do is format and clone a new hard drive.to bring the infected computer back inline

originally posted by: Gothmog

a reply to: MykeNukem

2. From what I've seen Network Boot is enabled by default as the 3rd or $th option in the Boot List. Maybe that's changed. That would give you Remote Access for sure.

Network boot is default enabled in the BIOS/UEFI and has been for a long, long time on most every PC device .(Way down in the list )

This is for SANs , or anyone booting from a server (PXE Boot) . Which has to be specifically set up or doesn't work at all. No use at all for the normal user .

It does come in handy if one is setting up 100s or more machines at one time . Set up a boot server loaded with an image , and one can do all at one go .

3. I was assuming we are talking about "enabled", which if the system detects a hardware change, will possible desiable certain driver functions until updated

No. You assumed wrong .(At least as of now and in the immediate future)

I know what Network Boot is, and have used it in just the situation you describe.

I also know, that if i had bad intentions I could use it for nefarious purposes.

Maybe you assumed wrong? They actually may develop something even more robust than TPM in the near future.

I'm not talking about "normal users", I'm talking about admins (whoever they may be) that can access remotely.

Didn't you just learn something about TPM a few posts up?

edit on 7/17/2021 by MykeNukem because: etd

a reply to: Gothmog

Here's a situation off the top of my head:

Could a packet sniffer be setup to retrieve TPM stack API packets and therefore the "key", which would enable you to spoof a piece of hardware and deliver a payload, taking over the complete machine?

Just a thought...

OP: thoughts on this?

Here's a situation off the top of my head:

Could a packet sniffer be setup to retrieve TPM stack API packets and therefore the "key", which would enable you to spoof a piece of hardware and deliver a payload, taking over the complete machine?

Just a thought...

OP: thoughts on this?

edit on 7/17/2021 by MykeNukem because: op

originally posted by: Havamal

Sorry. My expertise is with larger and more complex computer systems. I always considered windows as a "toy" system for home users. Still is.

windows, at is base, is very primitive. Compare to IBM systems in th 1960s and they are just getting to a virtual machine. iBM 360 1964.

Ahem. But let the kids have fun with their toys.

You realize anyone can just run Hercules and emulate an IBM 360 OS which is Public Domain now at thosands of times the speed , right?

Can the IBM 360 emulate Windows? No, of course not.

The more I'm hearing the "experts" in this thread talk, the more I'm realizing they're not experts.

edit on 7/17/2021 by MykeNukem because: BSperts

new topics

-

Watch as a 12 million years old Crab Emerges from a Rock

Ancient & Lost Civilizations: 2 hours ago -

ILLUMINATION: Dimensions / Degrees – Da Vincis Last Supper And The Philosophers Stone

Secret Societies: 8 hours ago -

Just Sick of It! Done! Can't take it anymore!

General Chit Chat: 9 hours ago -

Speaking of Pandemics

General Conspiracies: 11 hours ago -

Stuck Farmer And His Queue Jumping Spawn

Rant: 11 hours ago

top topics

-

Speaking of Pandemics

General Conspiracies: 11 hours ago, 9 flags -

Watch as a 12 million years old Crab Emerges from a Rock

Ancient & Lost Civilizations: 2 hours ago, 9 flags -

ILLUMINATION: Dimensions / Degrees – Da Vincis Last Supper And The Philosophers Stone

Secret Societies: 8 hours ago, 8 flags -

Just Sick of It! Done! Can't take it anymore!

General Chit Chat: 9 hours ago, 5 flags -

Stuck Farmer And His Queue Jumping Spawn

Rant: 11 hours ago, 4 flags

active topics

-

Joe Biden gives the USA's Highest Civilian Honor Award to Hillary Clinton and George Soros.

US Political Madness • 38 • : xuenchen -

Candidate Harris Supporter MARK CUBAN Says Trump Has No Smart-Intelligent Women in His Orbit.

2024 Elections • 88 • : Oldcarpy2 -

Vehicle Strikes people in New Orleans

Mainstream News • 298 • : WeMustCare -

Matthew Livelsberger said he was being followed by FBI

Political Conspiracies • 72 • : WeMustCare -

Winter Storm

Fragile Earth • 30 • : RickinVa -

Watch as a 12 million years old Crab Emerges from a Rock

Ancient & Lost Civilizations • 9 • : FullHeathen -

-@TH3WH17ERABB17- -Q- ---TIME TO SHOW THE WORLD--- -Part- --44--

Dissecting Disinformation • 3941 • : WeMustCare -

Orbs Appear And Form Triangle On Live Cam.

Aliens and UFOs • 28 • : DaydreamerX -

Musk calls on King Charles III to dissolve Parliament over Oldham sex grooming gangs

Mainstream News • 173 • : Oldcarpy2 -

Nigel Farage's New Year Message.

Politicians & People • 24 • : gortex