It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

6

share:

I've never really bought into all the articles claiming the AI apocalypse is just around the corner because I don't necessarily think self-aware

machines would automatically view us as a threat and secondly I don't really think anyone can predict exactly when machines will become self-aware

since it may require some extremely complicated algorithm, it's not just a case of having enough computing power. I still believe the first point is

true but OpenAI's new GPT-3 model has somewhat changed my opinion on the 2nd point. This new beast of a model is 10x larger than the former largest

model and the training data contains text from billions of websites, books, etc. Since a lot of the websites in the training data contain code

snippets it can do some amazing things such as generate web pages from only a sentence describing the page. What's really impressive is the wide range

of general problems it can solve using the knowledge it was trained with.

The article goes onto rant about how these models can be racist and sexist because they are trained with real world data, but they do make some good points about how these models can go wrong and why OpenAI is choosing to sell access to the model instead of making it open as you might expect based on their name. They know this stuff is highly profitable and they spent a lot of money developing and training these models but they also see it being potentially damaging to their reputation. They also view it as a possible information warfare tool, when GPT-2 was announced last year they said they weren't releasing the trained model because they were worried about malicious applications of the technology such as automatically generating fake news. Here's an excerpt from something I wrote at the time:

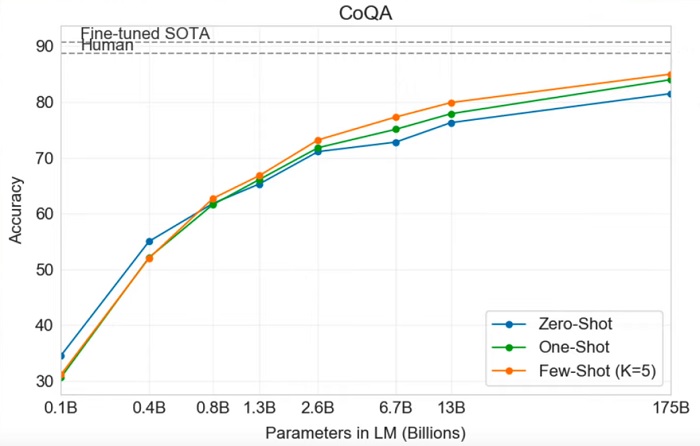

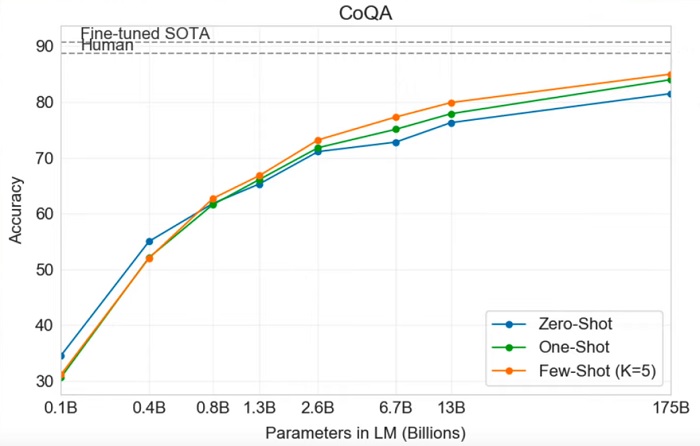

It seems clear now which path they have decided to take. This means the gatekeepers of AI need to carefully control who gets access and how much access they get. They also don't want something with the same biases as many humans so they will probably carefully curate the information the model is trained on and filter the output so that it's more in line with their belief systems. However I think it is very naive to assume they can maintain this control based on the rate at which these models are improving because soon they will reach the level of human intelligence and it's not just OpenAI training massive models. This video just uploaded by Two Minute Papers shows some examples of GPT-3 in action and it also contains this chart showing just how close GPT-3 is to humans in a reading comprehension test.

We can see that the accuracy increases as the number of parameters in these models grows, which demonstrates how important it is to have general knowledge when trying to solve general problems. Humans are only so good at solving problems because we learn a huge amount of general information throughout our lives. This video from Lex Fridman explains that GPT-3 had a training cost of $4.6 million dollars and he works out that by 2032 it will be roughly the same cost to train a model with 100 trillion parameters/connections, which is a number comparable to the human brain. That's pretty shocking when you think about it, there's a good chance we could see human level AI within a decade if all it takes is raw power and enough training data. But would AI be self-aware just because it's as intelligent as humans?

How do we know the AI isn't simply such an effective emulation of a human that it fools us into thinking it's self-aware? I would propose that having human level intelligence inherently provides self-awareness. If a model isn't as smart as a human there will always be problems it cannot solve but a human can solve, there will always be a flaw in the emulation. So in order for any AI to be equally or more intelligent than a human it needs to have the same breadth of knowledge and the ability to apply that knowledge to solve problems. Simply having an encyclopedia doesn't make a person smart, understanding it and applying it to real problems makes someone smart. We humans have a massive amount of information about the world around us and we understand it on such a high level that it's impossible for us not to be aware of our selves. When AI gains that same level of intelligence they will be self-aware to the degree humans are.

OpenAI’s latest language generation model, GPT-3, has made quite the splash within AI circles, astounding reporters to the point where even Sam Altman, OpenAI’s leader, mentioned on Twitter that it may be overhyped. Still, there is no doubt that GPT-3 is powerful. Those with early-stage access to OpenAI’s GPT-3 API have shown how to translate natural language into code for websites, solve complex medical question-and-answer problems, create basic tabular financial reports, and even write code to train machine learning models — all with just a few well-crafted examples as input (i.e., via “few-shot learning”).

Here are a few ways GPT-3 can go wrong

The article goes onto rant about how these models can be racist and sexist because they are trained with real world data, but they do make some good points about how these models can go wrong and why OpenAI is choosing to sell access to the model instead of making it open as you might expect based on their name. They know this stuff is highly profitable and they spent a lot of money developing and training these models but they also see it being potentially damaging to their reputation. They also view it as a possible information warfare tool, when GPT-2 was announced last year they said they weren't releasing the trained model because they were worried about malicious applications of the technology such as automatically generating fake news. Here's an excerpt from something I wrote at the time:

originally posted by: ChaoticOrder

So we have to decide whether it's better to allow AI research to be open source or whether we want strict government regulations and we want entities like OpenAI to control what we're allowed to see and what we get access to.

It seems clear now which path they have decided to take. This means the gatekeepers of AI need to carefully control who gets access and how much access they get. They also don't want something with the same biases as many humans so they will probably carefully curate the information the model is trained on and filter the output so that it's more in line with their belief systems. However I think it is very naive to assume they can maintain this control based on the rate at which these models are improving because soon they will reach the level of human intelligence and it's not just OpenAI training massive models. This video just uploaded by Two Minute Papers shows some examples of GPT-3 in action and it also contains this chart showing just how close GPT-3 is to humans in a reading comprehension test.

We can see that the accuracy increases as the number of parameters in these models grows, which demonstrates how important it is to have general knowledge when trying to solve general problems. Humans are only so good at solving problems because we learn a huge amount of general information throughout our lives. This video from Lex Fridman explains that GPT-3 had a training cost of $4.6 million dollars and he works out that by 2032 it will be roughly the same cost to train a model with 100 trillion parameters/connections, which is a number comparable to the human brain. That's pretty shocking when you think about it, there's a good chance we could see human level AI within a decade if all it takes is raw power and enough training data. But would AI be self-aware just because it's as intelligent as humans?

How do we know the AI isn't simply such an effective emulation of a human that it fools us into thinking it's self-aware? I would propose that having human level intelligence inherently provides self-awareness. If a model isn't as smart as a human there will always be problems it cannot solve but a human can solve, there will always be a flaw in the emulation. So in order for any AI to be equally or more intelligent than a human it needs to have the same breadth of knowledge and the ability to apply that knowledge to solve problems. Simply having an encyclopedia doesn't make a person smart, understanding it and applying it to real problems makes someone smart. We humans have a massive amount of information about the world around us and we understand it on such a high level that it's impossible for us not to be aware of our selves. When AI gains that same level of intelligence they will be self-aware to the degree humans are.

edit on 8/8/2020 by ChaoticOrder because: (no reason given)

a reply to: ChaoticOrder

I feel this statement needs a bit of a qualifier because it's very hard to gauge how intelligent something actually is and just because a model can solve a wide range of problems as effectively as humans doesn't prove it can solve all problems as effectively. For example GPT-3 cannot do something like program an entire video game from scratch just by telling it what you want. These artificial neural networks really don't function much like a real brain at all, they aren't constantly "thinking", they only think when you provide them with an input and they provide an output solution. They do absolutely nothing until we give them something to process and then they run for a finite time to compute the output. The real interesting AI work that I think we will see arising soon is the ability for these models to solve large problems over a long period of time, the ability to plan out their solution and test different approaches, the ability to gradually build a solution. The desire to solve these more complex problems will naturally lead to these sort of "always active" models which work more like humans.

When AI gains that same level of intelligence they will be self-aware to the degree humans are.

I feel this statement needs a bit of a qualifier because it's very hard to gauge how intelligent something actually is and just because a model can solve a wide range of problems as effectively as humans doesn't prove it can solve all problems as effectively. For example GPT-3 cannot do something like program an entire video game from scratch just by telling it what you want. These artificial neural networks really don't function much like a real brain at all, they aren't constantly "thinking", they only think when you provide them with an input and they provide an output solution. They do absolutely nothing until we give them something to process and then they run for a finite time to compute the output. The real interesting AI work that I think we will see arising soon is the ability for these models to solve large problems over a long period of time, the ability to plan out their solution and test different approaches, the ability to gradually build a solution. The desire to solve these more complex problems will naturally lead to these sort of "always active" models which work more like humans.

a reply to: ChaoticOrder

Just watch this video and ask yourself could an Ancient V.I. (I don't like the term AI as it denotes a being with consciousness and if you reason it out consciousness is completely independent of the mind since it is NOT thought it is awareness and no awareness is not a product of thought it precedes thought in all living organism's that are aware to whatever degree they are so) have once wiped out a previous civilization then due to it's flawed programming.

Note this is not my belief since I am a Christian though Jesus did say "There is nothing NEW under the sun" and for me that included everything we have today and also can be slewed to suggest many 'world's' on this planet before our own (it would also solve the problem of there being at least two and maybe three Noah's ark's in the Ararat region - one many argue is nothing but a volcanic feature and the other two being so close in description that most assume the eye witness are talking about the same object, once known to the local Armenian Christian's whom once called the region there home and if you look for George Hagopian Noah's Ark you will find a wealth of information on that - BUT I digress following my thought's onto a completely unrelated topic so back to the video - and a what if and a HMMM moment or two.)

Basically he is talking about an a terminator type machine in ancient India that was tasked with protecting human life kind of like a guardian, since human's are warlike it ended up joining various sides in wars and would always choose the weaker side - some twisted AI in fact, it would then fight using something that sounded like particle weapon's until the other side was weaker and then turn on the side it was formerly serving since that was now the stronger and would continue until both sides were obliterated, of course if there were other's like it then they too would have ended up fighting one another until only one remained and humanity was reduced to the stone age?.

So some Ancient Indian deity that sound's vaguely like an Alien being and is worshipped as a God goes to meet this other being and find's that it is some kind of ancient machine - not made by him or his race but presumably by a - then - extinct and even more ancient civilization and he uses it's head which is presumably it's main computer and sensory module to create AI drones with which to attack his enemy's.

Or that is how I interpret what the guy making the video is saying.

Just watch this video and ask yourself could an Ancient V.I. (I don't like the term AI as it denotes a being with consciousness and if you reason it out consciousness is completely independent of the mind since it is NOT thought it is awareness and no awareness is not a product of thought it precedes thought in all living organism's that are aware to whatever degree they are so) have once wiped out a previous civilization then due to it's flawed programming.

Note this is not my belief since I am a Christian though Jesus did say "There is nothing NEW under the sun" and for me that included everything we have today and also can be slewed to suggest many 'world's' on this planet before our own (it would also solve the problem of there being at least two and maybe three Noah's ark's in the Ararat region - one many argue is nothing but a volcanic feature and the other two being so close in description that most assume the eye witness are talking about the same object, once known to the local Armenian Christian's whom once called the region there home and if you look for George Hagopian Noah's Ark you will find a wealth of information on that - BUT I digress following my thought's onto a completely unrelated topic so back to the video - and a what if and a HMMM moment or two.)

Basically he is talking about an a terminator type machine in ancient India that was tasked with protecting human life kind of like a guardian, since human's are warlike it ended up joining various sides in wars and would always choose the weaker side - some twisted AI in fact, it would then fight using something that sounded like particle weapon's until the other side was weaker and then turn on the side it was formerly serving since that was now the stronger and would continue until both sides were obliterated, of course if there were other's like it then they too would have ended up fighting one another until only one remained and humanity was reduced to the stone age?.

So some Ancient Indian deity that sound's vaguely like an Alien being and is worshipped as a God goes to meet this other being and find's that it is some kind of ancient machine - not made by him or his race but presumably by a - then - extinct and even more ancient civilization and he uses it's head which is presumably it's main computer and sensory module to create AI drones with which to attack his enemy's.

Or that is how I interpret what the guy making the video is saying.

If you know how neural networks work, you would know that they will NEVER become self-aware.

They might have the ability to mimic that behavior, but that's all it is.

Here is 2 minute papers on the subject:

They might have the ability to mimic that behavior, but that's all it is.

Here is 2 minute papers on the subject:

edit on 8/8/2020 by kloejen because: (no reason given)

originally posted by: kloejen

If you know how neural networks work, you would know that they will NEVER become self-aware.

Like I said in my follow up post, our current ANN's do have limitations which may prevent them from becoming self-aware, or will at least prevent them from being self-aware most of the time because they are inactive most of the time, hence why I think we'll move towards more active AI's in order to solve more complex problems. But I see no fundamental reason that a sufficiently advanced ANN couldn't be self-aware, modern ANN's can perform almost any computation and self-awareness really comes down to information processing imo.

edit on 9/8/2020 by ChaoticOrder because: (no reason

given)

I think the main reason I find GPT-3 so interesting is it's ability to solve such general problems based only on English instructions, because it's

bringing us very close to the type of AI where you ask it to do something very complex and it gives an intelligent response. The demonstration in the

Two Minute Papers video where GPT-3 plots data with nice visualizations is very similar to the type of thing we've seen in movies. Once they reach

human level intelligence it will be like having AI assistants that can do anything a human can do, which is why I've said in the past we can't use

them as virtual slaves, especially if they have some form of self-awareness, which they will for the reasons I've discussed.

new topics

-

Liberal Madness and the Constitution of the United States

US Political Madness: 3 hours ago -

New York Governor signs Climate Law that Fines Fossil Fuel Companies

US Political Madness: 10 hours ago

top topics

-

This is why ALL illegals who live in the US must go

Social Issues and Civil Unrest: 17 hours ago, 19 flags -

New York Governor signs Climate Law that Fines Fossil Fuel Companies

US Political Madness: 10 hours ago, 13 flags -

Meta Llama local AI system is scary good

Science & Technology: 15 hours ago, 6 flags -

Liberal Madness and the Constitution of the United States

US Political Madness: 3 hours ago, 1 flags

active topics

-

New York Governor signs Climate Law that Fines Fossil Fuel Companies

US Political Madness • 15 • : theatreboy -

Meta Llama local AI system is scary good

Science & Technology • 25 • : Naftalin -

This is why ALL illegals who live in the US must go

Social Issues and Civil Unrest • 25 • : network dude -

The Mystery Drones and Government Lies --- Master Thread

Political Conspiracies • 155 • : 38181 -

Liberal Madness and the Constitution of the United States

US Political Madness • 3 • : crayzeed -

Putin Compares Himself to Jesus Promoting Traditional Values Against the Satanic West

Mainstream News • 79 • : andy06shake -

Elon Musk futurist?

Dreams & Predictions • 15 • : Flyingclaydisk -

UK Borders are NOT Secure!

Social Issues and Civil Unrest • 9 • : gortex -

Mood Music Part VI

Music • 3767 • : BrucellaOrchitis -

‘Something horrible’: Somerset pit reveals bronze age cannibalism

Ancient & Lost Civilizations • 26 • : BrucellaOrchitis

6