It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

share:

originally posted by: A51Watcher

For a further understanding of our analysis...

I don't understand how running an emboss filter inside VLC is useful for any type of analysis and you've already been shown to be using the PTM method incorrectly.

a reply to: A51Watcher

All of your examples clearly have further processing done to them rather than just a strict upscale.

This is your image with nothing but a nearest neighbor upscale. In other words, all of the original pixels just made into larger areas with unchanged values:

If you are using anything other than nearest neighbor interpolation for upscaling, then you are adding data that does not exist in the original.

My name is not Blake, btw.

originally posted by: spf33

My name is not Blake, btw.

My bad. That is the name of the person posting the query on the mega debunker site.

I assumed it was you.

I will get back to you on your other concerns in a bit.

edit on 10-10-2019 by A51Watcher because: (no reason given)

originally posted by: terriertail

But, of course, that is part of the plan: Discuss the ships and not the agenda of the visiting ETs. Heaven forbid there should appear on ATS any serious discussion

Well then let's DO IT! Let's have a serious discussion on what the EBE's are doing here. You start it and I will join you.

You can call it:

THEY'RE HERE ~ so what are they doing?

originally posted by: spiritualarchitect

Well then let's DO IT! Let's have a serious discussion on what the EBE's are doing here. You start it and I will join you.

You can call it: THEY'RE HERE ~ so what are they doing?

There are hundreds of existing threads over 15 long years - here's one I spotted recently in the archives:

www.abovetopsecret.com... ... For example, aliens are coming here to steal our gold and using us as slaves to extract it.

Don't laugh - he was being serious.

a reply to: A51Watcher

Link is dead. Personally, I trust Lazar. Acing 2 polygraph tests is not an easy thing to do. He's getting old and people think he's lying because of how he acted on Joe Rogan. I don't think there was a problem but people always mention Joe Rogan and say he's a liar.

Link is dead. Personally, I trust Lazar. Acing 2 polygraph tests is not an easy thing to do. He's getting old and people think he's lying because of how he acted on Joe Rogan. I don't think there was a problem but people always mention Joe Rogan and say he's a liar.

originally posted by: SouthernForkway26

a reply to: A51Watcher

Good to know that they gave access to the original film to skilled researchers and analysts. Absolutely incredible work. It looks like the Philadelphia Experiment perfected...

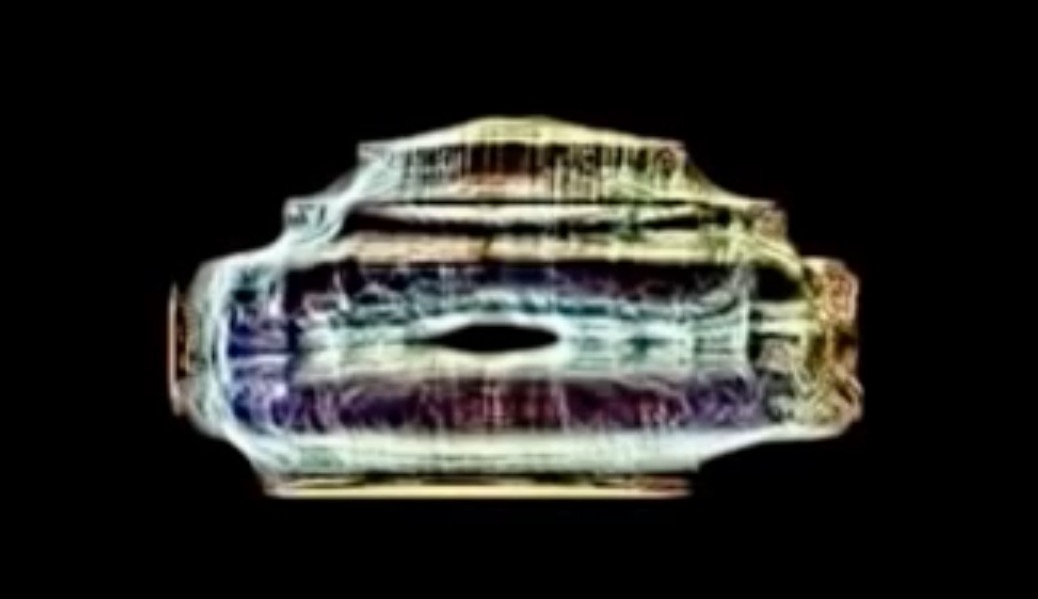

I can see, what appears to me, in the main larger body part is two toroid rings laying flat, stacked on top of each other. There appears to be another current surrounding the whole craft.

Can you explain the differences in color, particularly why something is red, blue, yellow, or green? Is it an enhancement your team did or is it an effect from the video?

Also on a side note, if I were a UFO hunter, would you prefer I use old analog film or a good digital camera?

And now to answer some good questions by SouthernForkway26

Those colors are an enhancement done by the processor to differentiate the different segments of the stacked image. PTM allows you to put a digital flashlight into a frame and shine it in different directions and take a photo of the result. We first displayed these 48 separate photos one by one in slow motion, then increasing fast speed until they were finally into a single stacked frame. BAS/PTM works in B&W. So color differentiation was deemed a good idea to help separate the details.

From the processor -

"As you have noticed there is an enormous difference in quality between the old news cast video from the internet and our high definition internet video. The difference is not only determined by a huge difference in resolution, namely 320 x 240 compared with 1920 x 1080 pixels but also by bit depth. A high(er) bit depth allows for a smoother transition between colours.

We humans can see some 10 million colours or 8-10 bits per colour channel. Our internet video has been scanned with a professional 24 bits film scanner, which equals to 16777216 colour variations. So what is the reason that we scan a frame in 24 bits when we cannot see the entire colour range? It is because our software uses the additional bits to enhance the details in an image our eyes are unable to see.

When you want to go deeper into this I advise you to Google.

So to your question: Should I use analog or digital to film UFO with?

Fact is that when it comes to light sensitivity and resolution most of today's megapixel cameras are equal in image quality or even better than most analog medium size analog cameras but the large size very expensive analog cameras can reach a film quality digital cameras will hardly be able to reach.

The main difference between an analog film camera and a digital camera is resolution and how light is captured.

Analog film cameras do not have a digital sensor and therefore light is not captured and converted into pixels as with a digital camera. With an analog film camera light rays go through a lens and fall onto a filmstrip where a chemical reaction produces colours within the filmstrip. The light rays do not fall linear onto a filmstrip but under an angle and this is called angular resolution.

So what is the maximum angular resolution that can be achieved? This depends on the size of the filmstrip (camera size) and of course the quality of film. A high quality film will be more light sensitive and will have a finer grain than standard analog film. A good quality 8 mm analog film camera produces at best a film frame equal to 4-6 million pixels but a professional 16 mm film camera in combination with high quality film produces a frame comparable with a 16 megapixel digital photo. Basically, with a bigger film camera you will produce a higher resolution image but in order to have a clear image you also need to go to a film with a finer grain.

Like the digital noise (Gaussian) we see with digital cameras the analog film camera also has its own noise and limitations. The biggest problem with an analog camera is grain. When you scan a filmstrip, the digitized image will show you numerous little coloured spots around your object. Especially in images with a dark background. These little blobs are the grain of the film. What happens is that when a light ray falls onto a film the chemicals react to the photons and a colour is produced but when light rays do not fall linear onto the chemicals

or the light source is not strong enough threshold) the chemical reaction is only partial. That specific part will not show the real colour but instead mostly have a grey, green or brown colour tone. Upscaled 16 mm filmstrips with a dark background tend to look like a muddy pond. Nevertheless the regions that received enough light will be totally clear.

Finally another problem with analog cameras is frame speed as it is never constant compared to a digital camera."

So... both analog and digital have their pros and cons.

Btw, PTM has been used to recover faded Hieroglyphs from stone tablets as well as boot prints and tire tread marks from the ground.

edit on 14-10-2019 by A51Watcher because: (no reason given)

Correction:

In my previous post I mistakenly corrected what I thought were typos in the response I received from my processor.

Several times he used the term interneg which I was unfamiliar with to read internet.

Here then is the response with no corrections on my part:

As you have noticed there is an enormous difference in quality between the old news cast video from the internet and our high definition interneg video. The difference is not only dertermined by a huge difference in resolution, namely 320 x 240 compared with 1920 x 1080 pixels but also by bitdepth. A high(er) bitdepth allows for a smoother transition between colours.

We humans can see some 10 million colours or 8-10 bits per colour channel. Our interneg vido has been scanned with a professional 24 bits filmscanner, which equals to 16777216 colour variations. So what is the reason that we scan a frame in 24 bits when we cannot see the entire colour range? It is because our software uses the additional bits to enhance the details in an image our eyes are unable to see.

When you want to go deeper into this I advise you to Google.

So to your question: Should I use analog or digital to film UFO with?

Fact is that when it comes to light sensitivity and resolution most of today's megapixel cameras are equal in image quality or even better than most analog medium size analog cameras but the large size very expensive analog cameras can reach a film quality digital cameras will hardly be able to reach.

The main difference between an analog filmcamera and a digital camera is resolution and how light is captured.

Analog filmcameras do not have a digital sensor and therefore light is not captured and converted into pixels as with a digital camera. With an analog filmcamera lightrays go through a lense and fall onto a filmstrip where a chemical reaction produces colours within the filmstrip. The lightrays do not fall linear onto a filmstrip but under an angle and this is called angular resolution.

So what is the maximum angular resolution that can be achieved? This depends on the size of the filmstrip (camera size) and of course the quality of film. A high quality film will be more light sensitive and will have a finer grain than standard analog film. A good quality 8 mm analog film camera produces at best a filmframe equal to 4-6 million pixels but a professional 16 mm film camera in combination with high quality film produces a frame comparible with a 16 megapixel digital photo. Basically, with a bigger filmcamera you will produce a higher resolution image but in order to have a clear image you also need to go to a film with a finer grain.

Like the digital noise (gaussian) we see with digital cameras the analog filmcamera also has its own noise and limitations. The biggest problem with an analog camera is grain. When you scan a filmstrip, the digitized image will show you numerous little coloured spots around your object. Especially in images with a dark background. These little blobs are the grain of the film. What happens is that when a lightray falls onto a film the chemicals react to the photons and a colour is produced but when light rays do not fall linear onto the chemicals

or the lightsource is not strong enough threshhold) the chemical reaction is only partial. That specific part will not show the real colour but instead mostly have a grey,green or brown colourtone. Upscaled 16 mm filmstrips with a darkbackground tend to look like a muddy pond. Nevetherless the regions that received enough light will be totally clear.

Finally another problem with analog cameras is frame speed as it is never constant compared to a digital camera.

In my previous post I mistakenly corrected what I thought were typos in the response I received from my processor.

Several times he used the term interneg which I was unfamiliar with to read internet.

Here then is the response with no corrections on my part:

As you have noticed there is an enormous difference in quality between the old news cast video from the internet and our high definition interneg video. The difference is not only dertermined by a huge difference in resolution, namely 320 x 240 compared with 1920 x 1080 pixels but also by bitdepth. A high(er) bitdepth allows for a smoother transition between colours.

We humans can see some 10 million colours or 8-10 bits per colour channel. Our interneg vido has been scanned with a professional 24 bits filmscanner, which equals to 16777216 colour variations. So what is the reason that we scan a frame in 24 bits when we cannot see the entire colour range? It is because our software uses the additional bits to enhance the details in an image our eyes are unable to see.

When you want to go deeper into this I advise you to Google.

So to your question: Should I use analog or digital to film UFO with?

Fact is that when it comes to light sensitivity and resolution most of today's megapixel cameras are equal in image quality or even better than most analog medium size analog cameras but the large size very expensive analog cameras can reach a film quality digital cameras will hardly be able to reach.

The main difference between an analog filmcamera and a digital camera is resolution and how light is captured.

Analog filmcameras do not have a digital sensor and therefore light is not captured and converted into pixels as with a digital camera. With an analog filmcamera lightrays go through a lense and fall onto a filmstrip where a chemical reaction produces colours within the filmstrip. The lightrays do not fall linear onto a filmstrip but under an angle and this is called angular resolution.

So what is the maximum angular resolution that can be achieved? This depends on the size of the filmstrip (camera size) and of course the quality of film. A high quality film will be more light sensitive and will have a finer grain than standard analog film. A good quality 8 mm analog film camera produces at best a filmframe equal to 4-6 million pixels but a professional 16 mm film camera in combination with high quality film produces a frame comparible with a 16 megapixel digital photo. Basically, with a bigger filmcamera you will produce a higher resolution image but in order to have a clear image you also need to go to a film with a finer grain.

Like the digital noise (gaussian) we see with digital cameras the analog filmcamera also has its own noise and limitations. The biggest problem with an analog camera is grain. When you scan a filmstrip, the digitized image will show you numerous little coloured spots around your object. Especially in images with a dark background. These little blobs are the grain of the film. What happens is that when a lightray falls onto a film the chemicals react to the photons and a colour is produced but when light rays do not fall linear onto the chemicals

or the lightsource is not strong enough threshhold) the chemical reaction is only partial. That specific part will not show the real colour but instead mostly have a grey,green or brown colourtone. Upscaled 16 mm filmstrips with a darkbackground tend to look like a muddy pond. Nevetherless the regions that received enough light will be totally clear.

Finally another problem with analog cameras is frame speed as it is never constant compared to a digital camera.

originally posted by: spf33

a reply to: A51Watcher

Apologies, I guess I was rushing ahead. I completely missed this post.

But, I believe your incorrect usage of PTM images was already fully addressed here:

www.metabunk.org...

Oh dear, going to play the Mick West card? Well ok if you insist, but actually you were doing better relying on your own knowledge.

A few quotes from Mick on the link you provided -

"I don't know the steps they used, but Here's some examples:"

His examples were created using Photoshop and do not look anything close to what PTM produces.

We agree he doesn't know the steps we used, because he has never used it, but instead he relies on using Photoshop which as explained before was designed and is used to enhance photos much like the popular filters used on selfies. Photoshop has no use in Forensic Image Analysis.

The actual steps used were the ones always used and are a standard practice when using this technology.

"The actual "PTM" technique require multiple source images of the same object lit from different directions."

Here again Mick displays his lack of experience and ignorance of PTM technology.

Actually PTM does NOT require "multiple source images of the same object". Anyone who has ever used this software knows this to be false.

But being unfamiliar with a particular software or subject has never stopped Mick. He will take a shot at explaining anything no matter how shallow his experience and knowledge may be.

Ever heard him say sorry I have no experience in that area?

No, and it is likely you never will. He is always willing to give it his best shot and bluff his way along trying to bamboozle with BS.

No one knows everything about everything, but Mick never lets that stop him.

That is my take on Mick but I will leave it to the actual professionals in this field to respond -

"I never heard of Mick West but he wrote some books about image manipulation. In 2018 he also wrote a piece about the videos of the Image Analysis Team. Upon request by A51watcher I visited Metabunk.org. At first glance his elaborated comments give an impression of professionalism but having read the article it casts a totally new light on how Mr. West arrived to his

self-serving conclusions.

Mr. West neither has a record of being an officially appointed video- and image forensic analyst nor is he an "official Photoshop expert". Without these qualifications Mr. West is a Photoshop amateur and this is acknowledged by the way he operates his research methodology. Note that for a complete data analysis the original file is required. Without an original file there is no analysis and the obtained evidence is unsubstantiated. I understand that Mr. West tries to prove his point to SPF33 but why is he deliberately faking results? To sell more of his books?

Having read his video analysis in the article on: www.metabunk.org...

This is by no means a broad-based independent investigation and I like to make the following statements as Mr. West is comparing apples and oranges.

1. Mr. West does not possess the original file so his video analysis is totally useless.

We could stop here as the rest is irrelevant but for the sake of clarity we continue.

2. Mr. West used a completely irrelevant video. For his comparison he took an already compressed and by Youtube further compressed video.

This means that he used an already manipulated low quality video to be right.

3. You do not have to be an expert to notice that lighting conditions in both videos are uncomparible:

A) The Lazar video was totally dark with a bright shining UFO.

B) The New York at Night 1995 VHS video has light pollution.

There are multiple light sources near-, behind- and in front of the camera.

For proper analysis special attention should also be given to the following factors:

C) Camera angle and inclination?

D) Magnification used?

E) Type of lense?

F) Focus / shutter speed / other settings?

G) Light intensity?

H) Distance to the object?

I) Haze? Dust attenuates and scatters light reaching the lense.

J) Airturbulence at the time of recording?

K) Type of VHS camera was used? Quality? Decay of the tape?

L) Transferdate VHS to digital?

M) VHS original or copy?

N) Compression?

O) Video format?

To conduct proper research all of the above items must be considered. Conditions have to be as similar as possible in order to reconstruct or replicate.

The only thing I have learned from this so-called video analysis is that Mr. West has absolutely no idea what he is doing but bluffs himself through it. He uses irrelevant multiple compressed videomaterial from Youtube to proof his statement and therefore Mr. West disqualifies himself as an expert."

originally posted by: A51Watcher

The difference is not only dertermined by a huge difference in resolution, namely 320 x 240 compared with 1920 x 1080 pixels but also by bitdepth. A high(er) bitdepth allows for a smoother transition between colours.

Woefully ignorant.

Transcoding video footage to a higher bit depth will not increase its quality. It won't hurt, but it will never, ever help.

The resampling of the original pixels, especially when there's a huge 500% frame size increase, is what causes the apparent increase in quality.

In other words, an addition, alteration and distortion of the original information.

To say you're over complicating the issue would be a massive understatement.

This is very, very simple: your forensic "technique", though I hesitate to elevate it to that status, either works or it does not.

You claim to be able to reveal unseen details in the Lazar video, yet refuse to demonstrate your ability to reproduce your results on something verifiable.

Again, after claiming to successfully reveal incredibly intricate details from a tiny blob of light, from the same source video, it should be a cakewalk show this man has fingernails:

I came across these videos about Bob Lazars the other day and thought they might be interesting to share.

I heard it's from a Documentary about him on Netflix.

This one too www.youtube.com...

I heard it's from a Documentary about him on Netflix.

This one too www.youtube.com...

a reply to: spf33

Ok before we move on to your next post I want to comment further on your insistence about using nearest neighbor interpolation.

As you saw in my reply, real world results in using various interpolation algorithms do not produce much difference to the eye in shape and details. Sure, a few small details are different with a couple of algorithms, but most do not and overall the shape of the craft remain the same.

The reason that I said you were doing better with your own knowledge as opposed to going with the Mick West card was - In actual fact Forensic Image Analysts are required to use nearest neighbor interpolation along with a full report of any software used and settings of software in any forensic image analysis provided to the court.

So yes technically you are correct about nearest neighbor being the most accurate algorithm to use when upscaling, but it is not that critical to the eye for discussion purposes.

Furthermore, your assertion that our images were produced by an emboss filter are completely inaccurate.

They were instead produced by BAS-Redfield.

Take the original image and process it with an emboss filter in any paint or photo program and you will clearly see the difference.

I suggest you get a copy of BAS-Redield for use in your own imaging projects and your own imaging knowledge will continue to grow.

If you can hold your self acknowledged penchant for belligerence and nitpicking in check, professionals in the field will continue to answer your questions and aid in your education of image processing.

Next time I will move on to your further posts.

Ok before we move on to your next post I want to comment further on your insistence about using nearest neighbor interpolation.

As you saw in my reply, real world results in using various interpolation algorithms do not produce much difference to the eye in shape and details. Sure, a few small details are different with a couple of algorithms, but most do not and overall the shape of the craft remain the same.

The reason that I said you were doing better with your own knowledge as opposed to going with the Mick West card was - In actual fact Forensic Image Analysts are required to use nearest neighbor interpolation along with a full report of any software used and settings of software in any forensic image analysis provided to the court.

So yes technically you are correct about nearest neighbor being the most accurate algorithm to use when upscaling, but it is not that critical to the eye for discussion purposes.

Furthermore, your assertion that our images were produced by an emboss filter are completely inaccurate.

They were instead produced by BAS-Redfield.

Take the original image and process it with an emboss filter in any paint or photo program and you will clearly see the difference.

I suggest you get a copy of BAS-Redield for use in your own imaging projects and your own imaging knowledge will continue to grow.

If you can hold your self acknowledged penchant for belligerence and nitpicking in check, professionals in the field will continue to answer your questions and aid in your education of image processing.

Next time I will move on to your further posts.

originally posted by: A51Watcher

If you can hold your self acknowledged penchant for belligerence and nitpicking in check, professionals in the field will continue to answer your questions and aid in your education of image processing.

I do, though, appreciate your ability to craft a wonderful sentence.

And yes, a standard emboss filter uses the contrast of an image to remap contours to create a 3D effect, similar to your VLC driven bas-relief, AKA BAS-Redfield.

Fingernails...

originally posted by: spf33

a reply to: Bspiracy

Bottom line for me is even disregarding the sketchy path of the original video, you can stack a 100 images in a 100 different color spaces or run the video through a 100 different algorithms and

there is no rational way to go from this:

to this:

and then claim the results are some sort of scientific enhancement that reveals the true details of a tiny blob of pixels.

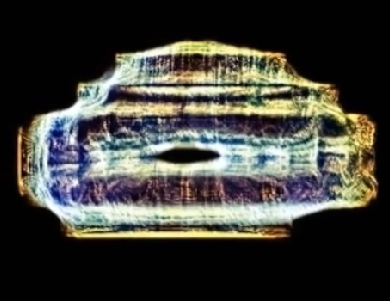

Acually there IS a way to go from this to this but first you must start with the correct starting frame which you did not.

The correct starting frame is this:

not this one which you provided:

And then we have the Mick West version of what you should end up with using PTM even though he admittedly used Photoshop and not PTM:

Hilarious! If any professional Forensic Image Analyst turned in that image as a result of his analysis he would be fired on the spot!

Now then, even if we process the image you incorrectly identified as the starting image:

We end up with this result:

The reason we did not use that as the starting image is we search the footage for the clearest frame possible to begin analysis, one free from gravity lensing distortion as much as possible.

The video we used for selecting the clearest frame possible is this one:

and you can see the selection process in the analysis video itself.

We are getting close to Gene Huff's fingernail image you are so impatient for, but there are still a few more replies you made to be answered before we get to that one.

Patience please.

originally posted by: spf33

originally posted by: A51Watcher

Can you please provide evidence that "there is a varying amount movement of the camcorder"?

The movement of the craft is a separate element.

I think it's a reasonable to assume the camcorder wasn't on a tripod or stabilized on the hood of a car or somewhere.

But, as you are the one performing an analysis technique that totally requires no movement of either the camera or the thing being recorded, the onus is on you to be certain the camera was not moving.

Fire off another email to Mr. Lazar and see if he can confirm the camera was stable...

No need to fire off an email to Bob to confirm the camera was stable. We have already independently confirmed that ourselves.

You see, there is software that can detect and eliminate camera movement.

When I was at Area 51 in 1991 I had no tripod and used the roof of my car to steady the camera. Despite my best efforts, I apparently did move the camera a few times.

In this clip, whenever you see a white line appear at the edge of the frame, it means camera movement has been detected in that direction and been corrected.

The few times that movement has been detected in this clip clearly shows that camera movement cannot account for all the wild movements seen throughout this clip -

Cam movement clip

This same software was used on Bob's footage and zero movement was detected.

edit on 27-10-2019 by A51Watcher because: (no reason given)

originally posted by: spf33

originally posted by: A51Watcher

For a further understanding of our analysis...

I don't understand how running an emboss filter inside VLC is useful for any type of analysis and you've already been shown to be using the PTM method incorrectly.

If you watch the video again you will see that an Emboss filter was not used inside VLC. You will instead see that BAS-redfield was used which does not have an emboss filter.

Also the PTM method was used in it's correct order as it always is in Forensic Image Analysis.

Since you either can not or will not run an emboss filter on the original I will do it for you for comparison.

Original:

BAS-Redfield

Emboss filter from Paintshop:

Hopefully you can see the difference.

A further note: As a matter of course Forensic Image Analysts never use a paint or photo program as part of their work.

edit on 27-10-2019 by A51Watcher because: (no reason given)

originally posted by: spf33

a reply to: A51Watcher

Failing that, or in addition to, run your technique on this and show the results:

Well we are almost to the fingernail image you are so interested in.

We have many techniques at our disposal in Forensic work, not just one.

Let's examine the Jet photo you posted above -

Next reply will have the Gene Huff fingernail Image you are so anxious for.

a reply to: A51Watcher

Precisely my point, thank you.

The results of your analysis technique performed on the airplane lights reveals nothing.

It's essentially meaningless.

Precisely my point, thank you.

The results of your analysis technique performed on the airplane lights reveals nothing.

It's essentially meaningless.

originally posted by: spf33

a reply to: A51Watcher

Precisely my point, thank you.

The results of your analysis technique performed on the airplane lights reveals nothing.

It's essentially meaningless.

It reveals that it is not a UFO but instead an airplane.

Yet your conclusion is the analysis is meaningless.

How very odd.

First you request an analysis of lights in the sky (hoping we would conclude they were UFOs) yet when our analysis clearly shows they are from an aircraft you conclude they are meaningless.

Hilarious!!

edit on 31-10-2019 by A51Watcher because: (no reason given)

1. The only purpose to use Irfanview was to show BAS-Redfield processing in real time. Exceptionally I am granted to use my applications at home but I

am also bound by secrecy provisions and therefore I will not reveal any names of non disclosed applications, filters or other other piece of

(experimental) technology I daily work with.

2. Videolan was only used to show everyone what is happening on the screen. That's it!

3. SPF33 wants to prove is his point about us being unable to enhance a few fingernails from Gene Huff's hand. If SPF33 is such an expert why did he provide us with such a poor quality image?

4. The Gene Huff interview is not even from the same videotape as the Lazar sighting videotape so any comparison is invalid.

5. Screen caps are very poor quality to work with since screen capture literally records what it sees on the screen. It does not record the original video so depending on your screen settings pixels will show different from the original.

6. It took us quite some time to do SPF33's fingernails because of his bad screen capture. So let's us examine the image that was provided compared to a lossless extracted frame from the original video: What we immediately notice is this:

7. The Huff image was further compressed by youtube's processing (compression technology.

8. Saving your screen caps as JPG further degrades the image and introduces compression artifacts. Save images as PNG when you wish to preserve quality.

9. The Gene Huff image was recorded with a different camera than Bob used and is lit from 3 sides, much different than the lighting conditions in Bob's video.

10. As stated in the OP video "Images suitable for PTM are preferably shot under low light conditions (e.g. at night, dim- or dark environment)"

The Gene Huff image fulfills none of these conditions.

11. The first part of the Gene Huff interview was shot under different conditions and with a different camera than the part containing the Lazar UFO and therefore we can conclude that both videos sequences although edited in a professional studio are incomparable.

12. The original 720 x 480 pixel Gene Huff video, after uploading was further compressed by youtube's processing (compression) technology.

13. Next time when you save images please use a lossless format when you wish to preserve quality! Saving screen caps as JPG further degrades the image (standard by 50%) and unlike lossless format JPG introduces compression artifacts. Next time download the original video, extract the frame and please never ever again use screen capture.

14. We used the frame SPF33 provided us with but we faced a number of challenges:

15. Resolution: There is as difference in frame size of the SPF33 frame of 785 x 701 pixels instead of 720 x 480 pixels (original size) so there was additional interpolation and the image has been stretched in both height and width.

16. Colours / colour space / Hue / Saturation: There is a substantial difference between the number of measured unique colours. The original video frame has a mere 11378 unique colours but the SPF33 frame shows 16041 unique colours. Hoorah! More colours! Fact is that more colours does not necessarily mean better image quality. It can also mean more colour- and more compression artifacts as a result of upsampling.

17. Gamut (subset of colours): The SPF33 frame shows lots of faded colours and there is almost no gamut. The lack of coulours made it extremely difficult if not "almost impossible" to find borders in the hand and it made single fingers and their fingernails indiscernible.

18. Contrast level: We noticed overcontrast of the back of his hand. This was caused by a secondary light source that shines from the top right. (see point 17)

19. Gamma / Brightness: Low gamma- and low brightness cause very dark areas in which primary- and secondary colour tones become more dominant and the finer details are more diffcult to detect. This makes it "almost impossible" to find borders in the hand and to make single fingers and their fingernails visible again.

20. Lighting (source): The lighting in the video comes from more than one direction (front & right). Natural light shadow is cancelled out by multiple light sources. (see point 17)

21. Motion: The hand was moving when this frame was captured and this caused severe motion blur.

22. Focus: During the interview the camera aimed directly at the face and in particular at the pair of glasses (eyes) therefore the hand is out of focus (defocus) with led to some optical blur.

23. The interview sequence is incomparable with the scene containing the Lazar UFO. It was impossible to fully enhance the hand and almost impossible to make single fingers visible.

2. Videolan was only used to show everyone what is happening on the screen. That's it!

3. SPF33 wants to prove is his point about us being unable to enhance a few fingernails from Gene Huff's hand. If SPF33 is such an expert why did he provide us with such a poor quality image?

4. The Gene Huff interview is not even from the same videotape as the Lazar sighting videotape so any comparison is invalid.

5. Screen caps are very poor quality to work with since screen capture literally records what it sees on the screen. It does not record the original video so depending on your screen settings pixels will show different from the original.

6. It took us quite some time to do SPF33's fingernails because of his bad screen capture. So let's us examine the image that was provided compared to a lossless extracted frame from the original video: What we immediately notice is this:

7. The Huff image was further compressed by youtube's processing (compression technology.

8. Saving your screen caps as JPG further degrades the image and introduces compression artifacts. Save images as PNG when you wish to preserve quality.

9. The Gene Huff image was recorded with a different camera than Bob used and is lit from 3 sides, much different than the lighting conditions in Bob's video.

10. As stated in the OP video "Images suitable for PTM are preferably shot under low light conditions (e.g. at night, dim- or dark environment)"

The Gene Huff image fulfills none of these conditions.

11. The first part of the Gene Huff interview was shot under different conditions and with a different camera than the part containing the Lazar UFO and therefore we can conclude that both videos sequences although edited in a professional studio are incomparable.

12. The original 720 x 480 pixel Gene Huff video, after uploading was further compressed by youtube's processing (compression) technology.

13. Next time when you save images please use a lossless format when you wish to preserve quality! Saving screen caps as JPG further degrades the image (standard by 50%) and unlike lossless format JPG introduces compression artifacts. Next time download the original video, extract the frame and please never ever again use screen capture.

14. We used the frame SPF33 provided us with but we faced a number of challenges:

15. Resolution: There is as difference in frame size of the SPF33 frame of 785 x 701 pixels instead of 720 x 480 pixels (original size) so there was additional interpolation and the image has been stretched in both height and width.

16. Colours / colour space / Hue / Saturation: There is a substantial difference between the number of measured unique colours. The original video frame has a mere 11378 unique colours but the SPF33 frame shows 16041 unique colours. Hoorah! More colours! Fact is that more colours does not necessarily mean better image quality. It can also mean more colour- and more compression artifacts as a result of upsampling.

17. Gamut (subset of colours): The SPF33 frame shows lots of faded colours and there is almost no gamut. The lack of coulours made it extremely difficult if not "almost impossible" to find borders in the hand and it made single fingers and their fingernails indiscernible.

18. Contrast level: We noticed overcontrast of the back of his hand. This was caused by a secondary light source that shines from the top right. (see point 17)

19. Gamma / Brightness: Low gamma- and low brightness cause very dark areas in which primary- and secondary colour tones become more dominant and the finer details are more diffcult to detect. This makes it "almost impossible" to find borders in the hand and to make single fingers and their fingernails visible again.

20. Lighting (source): The lighting in the video comes from more than one direction (front & right). Natural light shadow is cancelled out by multiple light sources. (see point 17)

21. Motion: The hand was moving when this frame was captured and this caused severe motion blur.

22. Focus: During the interview the camera aimed directly at the face and in particular at the pair of glasses (eyes) therefore the hand is out of focus (defocus) with led to some optical blur.

23. The interview sequence is incomparable with the scene containing the Lazar UFO. It was impossible to fully enhance the hand and almost impossible to make single fingers visible.

edit on 31-10-2019 by A51Watcher because: (no reason given)

a reply to: A51Watcher

Oh,no!

I thought I said that image was airplane lights, I'm sorry I wasn't clear.

www.abovetopsecret.com...

"I'm wondering if you've ever tried this same type of analysis on a video of a known object, maybe something like some sort of aircraft at night?"

I was just interested in seeing the results of your techniques on a known image.

Oh,no!

I thought I said that image was airplane lights, I'm sorry I wasn't clear.

www.abovetopsecret.com...

"I'm wondering if you've ever tried this same type of analysis on a video of a known object, maybe something like some sort of aircraft at night?"

I was just interested in seeing the results of your techniques on a known image.

new topics

-

Sue Gray, Sir Keir Starmer's former Chief of Staff, Nominated for Peerage

Regional Politics: 1 hours ago -

Biden Nationalizes Another 50,000+ Student Loans as He Heads for the Exit

US Political Madness: 2 hours ago -

An Interesting Conversation with ChatGPT

Science & Technology: 11 hours ago

top topics

-

An Interesting Conversation with ChatGPT

Science & Technology: 11 hours ago, 7 flags -

Biden Nationalizes Another 50,000+ Student Loans as He Heads for the Exit

US Political Madness: 2 hours ago, 4 flags -

Sue Gray, Sir Keir Starmer's former Chief of Staff, Nominated for Peerage

Regional Politics: 1 hours ago, 1 flags

active topics

-

US Federal Funding set to Expire December 20th. Massive CR on the way.

Mainstream News • 39 • : marg6043 -

Why isn't Psychiatry involved?

Social Issues and Civil Unrest • 25 • : BrucellaOrchitis -

My personal experiences and understanding of orbs

Aliens and UFOs • 18 • : lilzazz -

Can someone 'splain me like I'm 5. Blockchain?

Science & Technology • 81 • : cherokeetroy -

Drone Shooting Arrest - Walmart Involved

Mainstream News • 34 • : Lazy88 -

Salvatore Pais confirms science in MH370 videos are real during live stream

General Conspiracies • 244 • : Lazy88 -

Mood Music Part VI

Music • 3747 • : lilzazz -

Russias War Against Religion in Ukraine

World War Three • 50 • : Oldcarpy2 -

Sue Gray, Sir Keir Starmer's former Chief of Staff, Nominated for Peerage

Regional Politics • 2 • : gortex -

The Truth Behind the Manchester Airport "Police Assault" Video

Social Issues and Civil Unrest • 56 • : Oldcarpy2