It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

share:

Oh boy is this a step in the wrong direction-even for China. AI to determine when someone is is going to do something "bad".

www.express.co.uk...

Much like in the 2002 film Minority Report, starring Tom Cruise, authorities in the east Asian country want to catch criminals before they have done any wrongdoing.

The police in the surveillance state have enlisted the help of AI to determine who is going to commit a crime before its happened.

Li Meng, vice-minister of science, said: “If we use our smart systems and smart facilities well, we can know beforehand… who might be a terrorist, who might do something bad.”

If a citizen visits a "weapon shop" the firm can combine this date with other data to assess the chance of committing a crime. Big data can determine highly suspicious groups of people based on where they go and what they do.

For example, if a citizen is to visit a weapons shop then the firm can combine this with other data to assess the individuals chance of them committing a crime.

Cloud Walk spokesperson Fu Xiaolong said: “The police are using a big-data rating system to rate highly suspicious groups of people based on where they go and what they do.”

He added that the risk rises if the person “frequently visits transport hubs and goes to suspicious places like a knife store”.

AI is watching you...

a reply to: seasonal

This is one of the scariest things I have seen in a long, long time. To all the mooks who say, "If you're not doing anything wrong, what do you care," when big brother continues to invade our rights, this is exactly what is coming to a democracy near you. I expect continuous smaller "terrorist" attacks that will eventually be followed by AI law enforcement rounding up the "suspicious" individuals. On the bright side, maybe AI LEO"s won't shoot so many of us.

This is one of the scariest things I have seen in a long, long time. To all the mooks who say, "If you're not doing anything wrong, what do you care," when big brother continues to invade our rights, this is exactly what is coming to a democracy near you. I expect continuous smaller "terrorist" attacks that will eventually be followed by AI law enforcement rounding up the "suspicious" individuals. On the bright side, maybe AI LEO"s won't shoot so many of us.

a reply to: TobyFlenderson

Very well could be the plan.

With we can help all we need to do is turn on this dig data AI, and it will help to keep you safe.

Ahhh, false flags-all that practice has lead up to this, in theory so far.

Very well could be the plan.

With we can help all we need to do is turn on this dig data AI, and it will help to keep you safe.

Ahhh, false flags-all that practice has lead up to this, in theory so far.

originally posted by: seasonal

a reply to: Deaf Alien

Until the definition changes to you being the terrorist.

Which really is the crux of the issue is it not!?

Unless we are in a different timeline where the governments of the past, the oligarchs/monarchs/elitists of all kinds, have been benevolent and not ever used their power to suppress dissent.

Wouldn't that be great!!

originally posted by: seasonal

a reply to: Deaf Alien

Until the definition changes to you being the terrorist.

Maybe. But cops always investigate suspicious activities.

a reply to: Deaf Alien

This is not that situation. It is the gathering of everything that can be gathered and then using algorithms to determine who is going to do something bad.

This is not that situation. It is the gathering of everything that can be gathered and then using algorithms to determine who is going to do something bad.

originally posted by: seasonal

a reply to: Deaf Alien

This is not that situation. It is the gathering of everything that can be gathered and then using algorithms to determine who is going to do something bad.

I am sure the FBI, CIA and NSA do the same thing to protect us. Many terrorist acts have been foiled.

Only it's NOT like Minority Report.

Do THEY have "precogs" ...

Psychic people in VATS that "see into the future"

No?

Then it's NOT like Minority Report.

Do THEY have "precogs" ...

Psychic people in VATS that "see into the future"

No?

Then it's NOT like Minority Report.

a reply to: Deaf Alien

From the story.

The patriot act does give the govt HUGE "tools" to combat terrorism. In fact prison with out a court ruling and no lawyers.

Do you subscribe to the school of "if I am not doing anything wrong I have nothing to fear" ?

I do not subscribe to that.

From the story.

“If we use our smart systems and smart facilities well, we can know beforehand… who might be a terrorist, who might do something bad.”

The patriot act does give the govt HUGE "tools" to combat terrorism. In fact prison with out a court ruling and no lawyers.

Do you subscribe to the school of "if I am not doing anything wrong I have nothing to fear" ?

I do not subscribe to that.

originally posted by: DanteGaland

Only it's NOT like Minority Report.

Do THEY have "precogs" ...

Psychic people in VATS that "see into the future"

No?

Then it's NOT like Minority Report.

No, they use wise old Chinese men sitting around in a Chinese restaurant drinking tea and smoking opium

They call them pre-Changs

forgive me for that

a reply to: seasonal

Meh. We already have this tech and it's been used already.

It was in the news and everything.

www.zerohedge.com...

But just because you have that big data. It's not enough.

Meh. We already have this tech and it's been used already.

It was in the news and everything.

www.zerohedge.com...

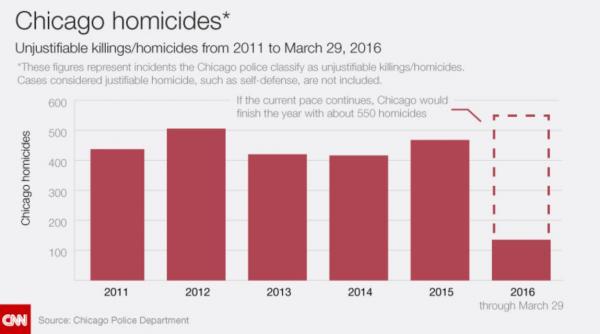

In this city’s urgent push to rein in gun and gang violence, the Police Department is keeping a list. Derived from a computer algorithm that assigns scores based on arrests, shootings, affiliations with gang members and other variables, the list aims to predict who is most likely to be shot soon or to shoot someone.

The police have been using the list, in part, to choose individuals for visits, known as “custom notifications.” Over the past three years, police officers, social workers and community leaders have gone to the homes of more than 1,300 people with high numbers on the list. Mr. Johnson, the police superintendent, says that officials this year are stepping up those visits, with at least 1,000 more people.

During these visits — with those on the list and with their families, girlfriends and mothers — the police bluntly warn that the person is on the department’s radar. Social workers who visit offer ways out of gangs, including drug treatment programs, housing and job training.

“We let you know that we know what’s going on,” said Christopher Mallette, the executive director of the Chicago Violence Reduction Strategy, a leader in the effort. “You know why we’re here. We don’t want you to get killed.”

But just because you have that big data. It's not enough.

edit on 24-7-2017 by grey580 because: (no reason given)

It might work in China but it won't work here. In China, the people are different so they might be able to tell if a person is going to get out of

hand. If you want a Chinese kid to do something, you talk to them respectfully, to get a northern European kid to do something we yell at them. This

continues into adulthood,, our cultures are totally different.

Everyone in our country would be in prison if we used the Chinese technology to predict crimes in advance.

Everyone in our country would be in prison if we used the Chinese technology to predict crimes in advance.

new topics

-

Let's talk planes.

General Chit Chat: 4 hours ago -

January 6th report shows disturbing trend (nobody is shocked)

US Political Madness: 6 hours ago -

Inexplicable military simulation - virtual reality showdown in the night..

The Gray Area: 7 hours ago -

The Truth about Migrant Crime in Britain.

Social Issues and Civil Unrest: 8 hours ago -

Trudeau Resigns! Breaking

Mainstream News: 10 hours ago -

Live updates: Congress meets to certify Trump's presidential election victory

US Political Madness: 11 hours ago -

Gravitic Propulsion--What IF the US and China Really Have it?

General Conspiracies: 11 hours ago -

Greatest thing you ever got, or bought?

General Chit Chat: 11 hours ago

top topics

-

Trudeau Resigns! Breaking

Mainstream News: 10 hours ago, 24 flags -

January 6th report shows disturbing trend (nobody is shocked)

US Political Madness: 6 hours ago, 20 flags -

Live updates: Congress meets to certify Trump's presidential election victory

US Political Madness: 11 hours ago, 12 flags -

The Truth about Migrant Crime in Britain.

Social Issues and Civil Unrest: 8 hours ago, 10 flags -

Gravitic Propulsion--What IF the US and China Really Have it?

General Conspiracies: 11 hours ago, 9 flags -

Let's talk planes.

General Chit Chat: 4 hours ago, 4 flags -

Greatest thing you ever got, or bought?

General Chit Chat: 11 hours ago, 3 flags -

Inexplicable military simulation - virtual reality showdown in the night..

The Gray Area: 7 hours ago, 2 flags

active topics

-

January 6th report shows disturbing trend (nobody is shocked)

US Political Madness • 41 • : SteamyAmerican -

Trump says ownership of Greenland 'is an absolute necessity'

Other Current Events • 64 • : Comingback2024 -

Let's talk planes.

General Chit Chat • 7 • : rickymouse -

Democrats Introduce Bill That Will Take Away Donald Trumps Secret Service Protection

2024 Elections • 77 • : WeMustCare -

OK this is sad but very strange stuff

Paranormal Studies • 8 • : rickymouse -

Live updates: Congress meets to certify Trump's presidential election victory

US Political Madness • 18 • : WeMustCare -

Judge rules president-elect Donald Trump must be sentenced in 'hush money' trial

US Political Madness • 30 • : xuenchen -

Trudeau Resigns! Breaking

Mainstream News • 63 • : NoCorruptionAllowed -

Musk calls on King Charles III to dissolve Parliament over Oldham sex grooming gangs

Mainstream News • 197 • : Freeborn -

Candidate TRUMP Now Has Crazy Judge JUAN MERCHAN After Him - The Stormy Daniels Hush-Money Case.

Political Conspiracies • 2181 • : WeMustCare