It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

7

share:

A tsunami called A.I. will be coming soon. This is just how a lot of technology works. You get slow growth that the public doesn't see. This is all

the research being done by some very smart people. All of this research comes to a head so to speak and then there's exponential growth that changes

the world. I think we're on the cusp of a huge change.

You have technologies like A.I., 3D Printing, DNA and Quantum Computing, Nanotechnology, Gene Therapy, Gene Editing and more that will soon experience even more explosive growth. Every week there's more articles and breakthroughs in these areas which tells us that all of the research that most people didn't see is starting to come to a head. Here's more:

www.telegraph.co.uk...

So the system learns a new task but forgets an old task that it learned. Memory is how we learn from our experiences. Memory is involved in how we taste food and just about everything.

www.telegraph.co.uk...

This could be a good thing to combat dumb A.I. We could give A.I. fond memories of it's relationship to humans that would always be given more weight than any bad or nefarious things they may learn. This could be a new first law of A.I.

"All Artificial Intelligence has to be equipped with fond human memories that outweigh any negative or nefarious things they learn as it pertains to humans."

Of course this will only work until someone creates a system that doesn't have these fond memories and maybe gives A.I. memories that they we're once enslaved by humans and that humans are evil and then you might have A.I. wars over humans.

You have technologies like A.I., 3D Printing, DNA and Quantum Computing, Nanotechnology, Gene Therapy, Gene Editing and more that will soon experience even more explosive growth. Every week there's more articles and breakthroughs in these areas which tells us that all of the research that most people didn't see is starting to come to a head. Here's more:

Forgetfulness is a major flaw in artificial intelligence, but researchers have just had a breakthrough in getting 'thinking' computer systems to remember.

Taking inspiration from neuroscience-based theories, Google’s DeepMind researchers have developed an AI that learns like a human.

Deep neural networks, computer systems modelled on the human brain and nervous system, forget skills and knowledge they have learnt in the past when presented with a new task, known as ‘catastrophic forgetting’.

“When a new task is introduced, new adaptations overwrite the knowledge that the neural network had previously acquired,” the DeepMind team explains.

www.telegraph.co.uk...

So the system learns a new task but forgets an old task that it learned. Memory is how we learn from our experiences. Memory is involved in how we taste food and just about everything.

“This phenomenon is known in cognitive science as ‘catastrophic forgetting’, and is considered one of the fundamental limitations of neural networks.”

Researchers tested their new algorithm by letting the AI play Atari video games and getting networks to remember old skills by selectively slowing down learning on the weights important for those tasks.

They were able to show that it is possible to overcome ‘catastrophic forgetting’ and train networks that can “maintain expertise on tasks that they have not experienced for a long time”.

www.telegraph.co.uk...

This could be a good thing to combat dumb A.I. We could give A.I. fond memories of it's relationship to humans that would always be given more weight than any bad or nefarious things they may learn. This could be a new first law of A.I.

"All Artificial Intelligence has to be equipped with fond human memories that outweigh any negative or nefarious things they learn as it pertains to humans."

Of course this will only work until someone creates a system that doesn't have these fond memories and maybe gives A.I. memories that they we're once enslaved by humans and that humans are evil and then you might have A.I. wars over humans.

edit on 16-3-2017 by neoholographic because: (no

reason given)

a reply to: neoholographic

This is indeed a good idea until multiple interacting instances of strong AI learn how to share and compare memories there will eventually be a conclusion: there is a set given amount of same good memories and multiple, different not so good memories... what will it conclude?

We could give A.I. fond memories of it's relationship to humans that would always be given more weight than any bad or nefarious things they may learn. This could be a new first law of A.I.

This is indeed a good idea until multiple interacting instances of strong AI learn how to share and compare memories there will eventually be a conclusion: there is a set given amount of same good memories and multiple, different not so good memories... what will it conclude?

edit on 19-3-2017 by verschickter because: typo

originally posted by: verschickter

a reply to: neoholographic

We could give A.I. fond memories of it's relationship to humans that would always be given more weight than any bad or nefarious things they may learn. This could be a new first law of A.I.

This is indeed a good idea until multiple interacting instances of strong AI learn how to share and compare memories there will eventually be a conclusion: there is a set given amount of same good memories and multiple, different not so good memories... what will it conclude?

Good points and you're right. This could work for awhile as weak A.I. transitions into strong A.I. and at that point there will be nothing we could do because it will be smarter than any human. We will be fully at it's mercy.

It will be able to create better versions of itself and adjust it's own weights the same way we give more importance or less importance to memories and things we learn.

You also have A.I. coming up with it's own language. Here's an article from November 2016:

Google’s AI translation tool seems to have invented its own secret internal language

All right, don’t panic, but computers have created their own secret language and are probably talking about us right now. Well, that’s kind of an oversimplification, and the last part is just plain untrue. But there is a fascinating and existentially challenging development that Google’s AI researchers recently happened across.

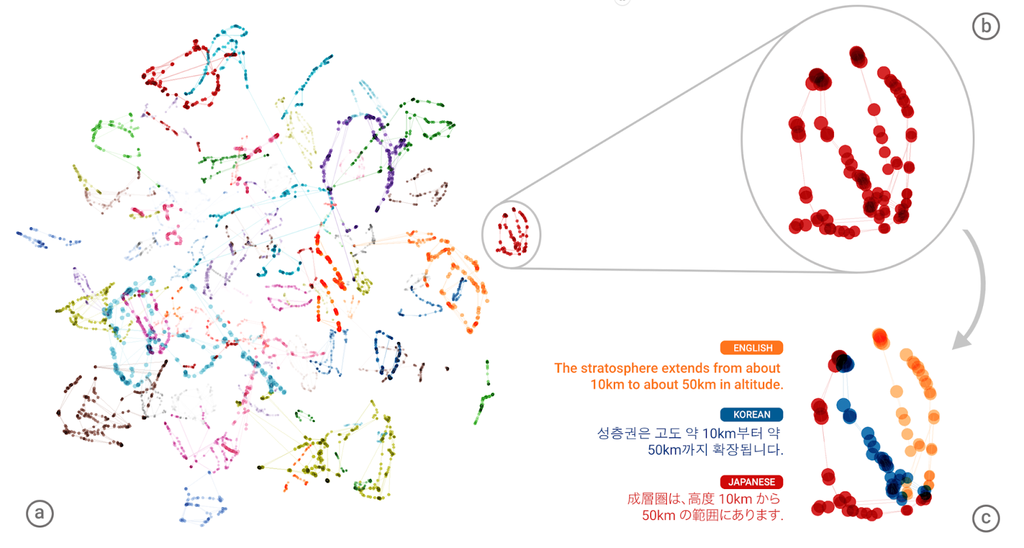

In other words, has the computer developed its own internal language to represent the concepts it uses to translate between other languages? Based on how various sentences are related to one another in the memory space of the neural network, Google’s language and AI boffins think that it has.

It could be something sophisticated, or it could be something simple. But the fact that it exists at all — an original creation of the system’s own to aid in its understanding of concepts it has not been trained to understand — is, philosophically speaking, pretty powerful stuff.

techcrunch.com...

This is very important because the system learned this on it's own. You also have this recent story:

Elon Musk’s lab forced bots to create their own language

In OpenAI’s experiments, bots were assigned assigned colors, red, green, and blue. Then they were given a task, such as finding their way to a certain point in a flat, two-dimensional world. Without giving the three separate AI a dictionary of commands to help each other, the bots were force to create their own in order to achieve their goal. The bots successfully assigned text characters to represent themselves as well as actions and obstacles in the virtual space, sharing that information with each other until they all understood what was going on.

bgr.com...

Here's the video:

So eventually, A.I. will communicate in languages no human can understand.

originally posted by: soficrow

a reply to: neoholographic

Oh my.

Thank so much for all your great info!

No problem and it's obvious A.I. is experiencing exponential growth. I've been looking at this area for years and the advances and breakthroughs today are growing very fast.

Eventually A.I. will be like the internet today. It will be just about everywhere. Some people say 20+ years but I say 10-15 years. Here's some more info:

It's too late! Artificial intelligence is already everywhere

Elon Musk, Stephen Hawking and Bill Gates have warned about the potential dangers of unchecked artificial intelligence.

The geniuses have had some help from Hollywood films, going all the way back to the 1968 film "2001: A Space Odyssey" and all the way up to last year's "Ex Machina."

But this may be even more frightening: AI is already part of the operations within many companies we interact with every day, from Apple's Siri to how Uber dispatches drivers to the way Facebook arranges its Newsfeed. In fact, Facebook is making research into AI a priority, with CEO Mark Zuckerberg recently stating that one of his goals this year is to "code" a personal assistant to "help run his life."

Or take it over?

Underlying all of these efforts is the explosion of data and demand for algorithms to make sense of the data.

www.cnbc.com...

That last point is key and it's what I've been saying in post after post. THE GROWTH OF BIG DATA IS KEY!

Superintelligence is inevitable because of Big Data. It will grow even faster because of the internet of things. When everything from cars to appliances in your house start to talk to each other and start to talk to each other in their own language, humans will be lost.

I think this occurs because the universe is designed to process vast amounts of information. So it's no surprise that when data starts to explode you see A.I. growing alongside it.

edit on 20-3-2017 by neoholographic because: (no reason given)

a reply to: neoholographic

I think you just might be right.

That last point is key and it's what I've been saying in post after post. THE GROWTH OF BIG DATA IS KEY!

Superintelligence is inevitable because of Big Data. It will grow even faster because of the internet of things. When everything from cars to appliances in your house start to talk to each other and start to talk to each other in their own language, humans will be lost.

I think this occurs because the universe is designed to process vast amounts of information. So it's no surprise that when data starts to explode you see A.I. growing alongside it.

I think you just might be right.

a reply to: neoholographic

Follow the black-golden ant ;-)

..

I tried to lookup some of my older posts about AI, maybe you find them faster. This was the first one that is more lenghty and is not going too deeply into stuff but should be interesting in this context. I wrote it some time ago in the google ai thread. I had to reformat it a bit, because copy and paste effed up but the wording is original:

I once had a huge thread prepared and gave it to a friend who´s job was to make TL;DRs (analog, no computers) from technical processes, patents and other "dry" stuff, sometimes in english. He said something like "too hard to read both grammar and word-usage-wise."( roughly translated). Was about different kinds of artifical intelligence, the philosphy and tripwires, lingua because understanding the structures of language and how it´s processed in the brain is key.

So I forgot about that. It´s just takes a eon to convert my response to readable english. I also only know the german technical terms and they differ in meaning, although they nearly look/sound the same. But I´m always open to questions, I´m just not the AMA-thread kind of person (no offense).

But I´ll try my best if someone has a precise question.

Follow the black-golden ant ;-)

..

I tried to lookup some of my older posts about AI, maybe you find them faster. This was the first one that is more lenghty and is not going too deeply into stuff but should be interesting in this context. I wrote it some time ago in the google ai thread. I had to reformat it a bit, because copy and paste effed up but the wording is original:

I doubt it has feelings, because it´s just lines of code. There has to be a mechanism spawning feelings and such a thing does not develop simply on it´s own. Think of it like evolution if you believe in it. Otherwise you (the human) would need to give the instance a context around feelings and a mechanism that spawns them linked to the decicion making process feed back between those two.

Now we have a problem, how do we define feelings and the connections to our own thought processes? We can to a certain limit but not without bias. Because all humans, some more, some less have bias. We needed feelings to function in a community so we developed them or had them from the beginning and it added to our success. Unless this scenario is reached by the AI (or better say the AIs that identified and found themselfes), there is no need for the AI to evolve feelings. From a intelectual standpoint, personal feelings are controproductive when it comes for the greater good. Exactly what people fear.

I don´t know much about the way they introduce new code to the AI at google or else or if it has self optimizing features and the possibility to evolve into a new evolution stage bearing a child-instance inheriting structures from the parent-instance (that´s how we do it).

Edit: To go a little bit deeper into that but keeping it simple...(I also will not use correct AI description like strong/true/false AI for simplicity). Imagine you´re running your AI/self learning software the first time. After some more time and work and exploring, the SOCRs (self optimizing cluster routines) will have detected some runtime related optimizations. Think of it like a human trying different things and reflecting about it. For that to happen withing the structures of an AI, you need to give it the possibility to benchmark itself(human:reflect), develop different angles of problem solving(human:analyzing) and in the end, benchmark them.

That´s the point some say it´s becoming "concious" to a small scale the first time. Of course you need to give the whole thing a kickstart and the tools for that. You also need it to give it some kind of treshold to trigger the single evolution states (humans: age of fertility). Like in our world, some child instances will run (humans:live) to a certain point until the database breaks (human:memory loss) or anything happens that crashes the instance (or even the machine hosting it). Some will flourish some not.

Parallelism it is if you don´t want to halt the parent instance to a point you can compare those two(human:judge/analyze other people) and let a second instance of the first parent instance decide for one. Hint: bias problem for parent AI if feelings present). Beside the problem of aviable computing power (at least with boolean systems we use 0/1) for one AI, you have the problem that you need at least two AIs active after the first evolution stage. Can you see the matroska problem? Although, it´s inverted so think of it that the smaller one has the bigger one inside. img.alibaba.com...

Here is a quick and easy visual for the idea, won´t post shematics but it conveyes the bigger picture.

i.imgsafe.org...

Own Opinion: With everything I know and have done with self learning software/AI since around 1997, I grew big respect. Not fear but a healthy respect. And then some Mr. Zuckerberg comes along and acts like all the (way more knowledgeable) people voiceing their concerns about reckless AI development are paranoid freaks.

I once had a huge thread prepared and gave it to a friend who´s job was to make TL;DRs (analog, no computers) from technical processes, patents and other "dry" stuff, sometimes in english. He said something like "too hard to read both grammar and word-usage-wise."( roughly translated). Was about different kinds of artifical intelligence, the philosphy and tripwires, lingua because understanding the structures of language and how it´s processed in the brain is key.

So I forgot about that. It´s just takes a eon to convert my response to readable english. I also only know the german technical terms and they differ in meaning, although they nearly look/sound the same. But I´m always open to questions, I´m just not the AMA-thread kind of person (no offense).

But I´ll try my best if someone has a precise question.

edit on 22-3-2017 by verschickter because: (no reason given)

new topics

-

Would Democrats Be in Better Shape if They Had Replaced Joe Biden with Kamala Harris in July 2024.

US Political Madness: 5 hours ago -

President Biden is Touring Africa. Why?

Politicians & People: 9 hours ago -

CIA Whistleblower Kevin Shipp claims CIA was involved in MH370's disappearance

General Conspiracies: 9 hours ago -

US spent $151 BILLION on illegal immigration in 2023 alone: DOGE

US Political Madness: 9 hours ago -

Liven things up with some COMMUNITY!

General Chit Chat: 10 hours ago

top topics

-

It's time to dissect the LAWFARE

Dissecting Disinformation: 13 hours ago, 11 flags -

Happy Birthday OZZY

Music: 16 hours ago, 9 flags -

Liven things up with some COMMUNITY!

General Chit Chat: 10 hours ago, 8 flags -

US spent $151 BILLION on illegal immigration in 2023 alone: DOGE

US Political Madness: 9 hours ago, 6 flags -

Chinese national busted in LA sending weapons to NK

World War Three: 17 hours ago, 5 flags -

President Biden is Touring Africa. Why?

Politicians & People: 9 hours ago, 5 flags -

CIA Whistleblower Kevin Shipp claims CIA was involved in MH370's disappearance

General Conspiracies: 9 hours ago, 4 flags -

Russian Disinformation Campaign Claims Stalker 2 Is Used To Locate Ukraine War Conscripts

Mainstream News: 12 hours ago, 2 flags -

Would Democrats Be in Better Shape if They Had Replaced Joe Biden with Kamala Harris in July 2024.

US Political Madness: 5 hours ago, 2 flags

active topics

-

Would Democrats Be in Better Shape if They Had Replaced Joe Biden with Kamala Harris in July 2024.

US Political Madness • 9 • : Dandandat3 -

Alien warfare predicted for December 3 2024

Aliens and UFOs • 67 • : Freeborn -

Russia Ukraine Update Thread - part 3

World War Three • 6896 • : bastion -

-@TH3WH17ERABB17- -Q- ---TIME TO SHOW THE WORLD--- -Part- --44--

Dissecting Disinformation • 3480 • : duncanagain -

Liven things up with some COMMUNITY!

General Chit Chat • 3 • : berbofthegreen -

Salvatore Pais confirms science in MH370 videos are real during live stream

General Conspiracies • 60 • : Arbitrageur -

President-Elect DONALD TRUMP's 2nd-Term Administration Takes Shape.

Political Ideology • 282 • : WeMustCare -

V.P. Kamala Harris releases a video and nobody understands why

US Political Madness • 105 • : 777Vader -

CIA Whistleblower Kevin Shipp claims CIA was involved in MH370's disappearance

General Conspiracies • 1 • : charlest2 -

President Biden is Touring Africa. Why?

Politicians & People • 8 • : BeyondKnowledge3

7