It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

share:

Today, more than 20 years after of SHA-1 was first introduced, we are announcing the first practical technique for generating a collision. This represents the culmination of two years of research that sprung from a collaboration between the CWI Institute in Amsterdam and Google. We’ve summarized how we went about generating a collision below. As a proof of the attack, we are releasing two PDFs that have identical SHA-1 hashes but different content.

Link

This industry cryptographic hash function standard is used for digital signatures and file integrity verification, and protects a wide spectrum of digital assets, including credit card transactions, electronic documents, open-source software repositories and software updates.

It is now practically possible to craft two colliding PDF files and obtain a SHA-1 digital signature on the first PDF file which can also be abused as a valid signature on the second PDF file.

Shattered More info and proof

Google has built recognition into it's browser/mail. Basically sha-256 becomes the minimum.

More info on the technique will be released in 90 days, the fix your stuff period.

edit on 2/27/2017 by roadgravel because: Tags

Sounds intriguing, but honestly, I have no idea what you just posted! (????)

a reply to: Macenroe82

Before they release the method used. Just as bugs reported are given 90 days for fixes before more info is often released.

Before they release the method used. Just as bugs reported are given 90 days for fixes before more info is often released.

a reply to: Flyingclaydisk

For instance, digital signature.

If two files can hash to the same value, it means the real document cannot be verified.

An unencrypted document is digitally signed.

The document hash is encrypted with a private key for a signature. Decrypting with the matching public key yields the hash which was protected by the encryption. This should be the correct hash for the document.

But if a different document (forgery) can hash to the same hash value then the validity of the document is questionable. A fake dicument can look legit because ii hashes to the same value.

For instance, digital signature.

If two files can hash to the same value, it means the real document cannot be verified.

An unencrypted document is digitally signed.

The document hash is encrypted with a private key for a signature. Decrypting with the matching public key yields the hash which was protected by the encryption. This should be the correct hash for the document.

But if a different document (forgery) can hash to the same hash value then the validity of the document is questionable. A fake dicument can look legit because ii hashes to the same value.

edit on 2/27/2017 by roadgravel because: (no reason given)

a reply to: roadgravel

As AI becomes more powerful and more efficient we will see encryption become more and more complicated. With the current trajectory we will see a world without privacy in 15-20 years easily. AI will crack our encryption in seconds.

Eventually complicated AI will live on a tiny chip. Efficiency is the key. Then they go live on the internet.

Game over man, game over.

As AI becomes more powerful and more efficient we will see encryption become more and more complicated. With the current trajectory we will see a world without privacy in 15-20 years easily. AI will crack our encryption in seconds.

Eventually complicated AI will live on a tiny chip. Efficiency is the key. Then they go live on the internet.

Game over man, game over.

a reply to: roadgravel

Good to know that they already have the next step almost ready. Version upgrades are nightmares so I hope this is somehow better than normal.

Good to know that they already have the next step almost ready. Version upgrades are nightmares so I hope this is somehow better than normal.

a reply to: roadgravel

Does anyone know the encryption used for the assange dead man's switch files?

Does anyone know the encryption used for the assange dead man's switch files?

originally posted by: Flyingclaydisk

Sounds intriguing, but honestly, I have no idea what you just posted! (????)

Ok. There are algorithms for creating a 'signature' for a block of data. For example, that block of data might be a financial statement, or a legal document, or the data for some sort of transaction. The idea is to have a fairly simple program which, fed the data, produces a 'hash', or a reasonably small set of numbers that are the boiled down essence of the document. Even a single bit change in the document should produce many bits of change in the hash. And the hashing algorithm should be complex enough that it's not obvious how changes in the source document will affect the hash.

That way, if I send you a contract, and the hash for that contract, you can quickly and easily run the thing through the hash algorithm and it should, if not one bit has changed, match the hash I sent by separate means. That guarantees that the document is valid, because it should be so hard to figure out how to create a BAD document with the same hash that it's essentially impossible.

These algorithms vary in levels of complexity. SHA-1, which they're talking about here, was one of the first, and it's pretty simplistic. But it's held up well, until now. SHA-1 has been deprecated for some time, because defeating it was obviously on the horizon. But now that time is here.

So now, I could intercept that data, change it to whatever I like, append a few nonsense characters at the end, and it'll match the SHA-1 hash used to validate it, leaving you with the idea that it's the valid document.

SHA-512 is available as well so this will just end up with vulnerability updates and patches to make sure applications move up.

originally posted by: Throes

SHA-512 is available as well so this will just end up with vulnerability updates and patches to make sure applications move up.

Ya never know, there might be a day when SHA-xxx falls to algorithmic attacks, no matter how many bits you chuck at it.

It's one of those interesting problems.

There are codes which are "unbreakable". You have to know how to use them, but they are impenetrable. Absolutely impenetrable.

They're labor intensive, but they cannot be hacked, broken or otherwise compromised.

See, and I guess that's the thing, the key word above being 'labor intensive'. Everyone constantly looks for an easy way out, and the truly foolproof ones aren't 'easy' and never will be.

They're labor intensive, but they cannot be hacked, broken or otherwise compromised.

See, and I guess that's the thing, the key word above being 'labor intensive'. Everyone constantly looks for an easy way out, and the truly foolproof ones aren't 'easy' and never will be.

a reply to: Flyingclaydisk

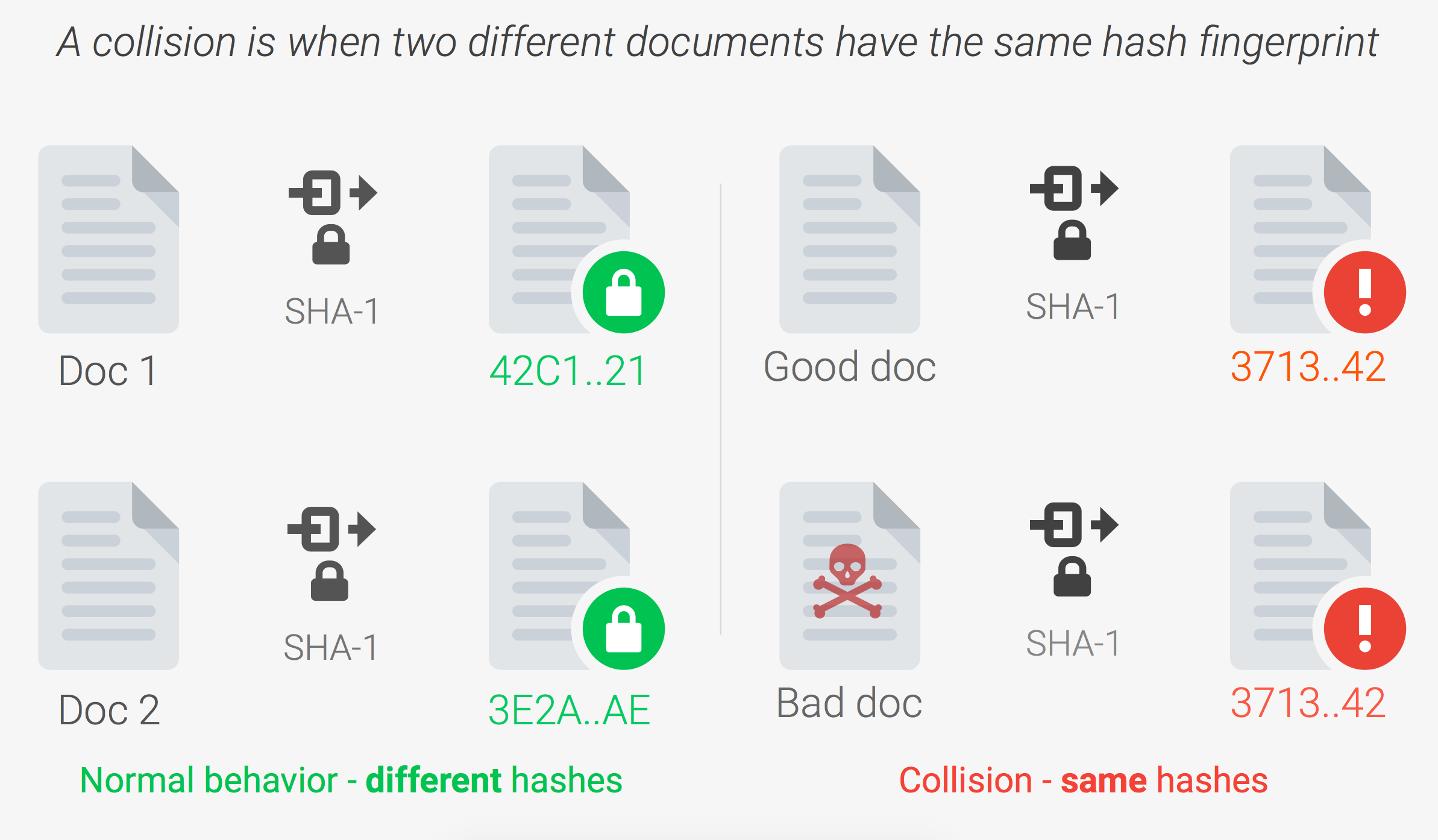

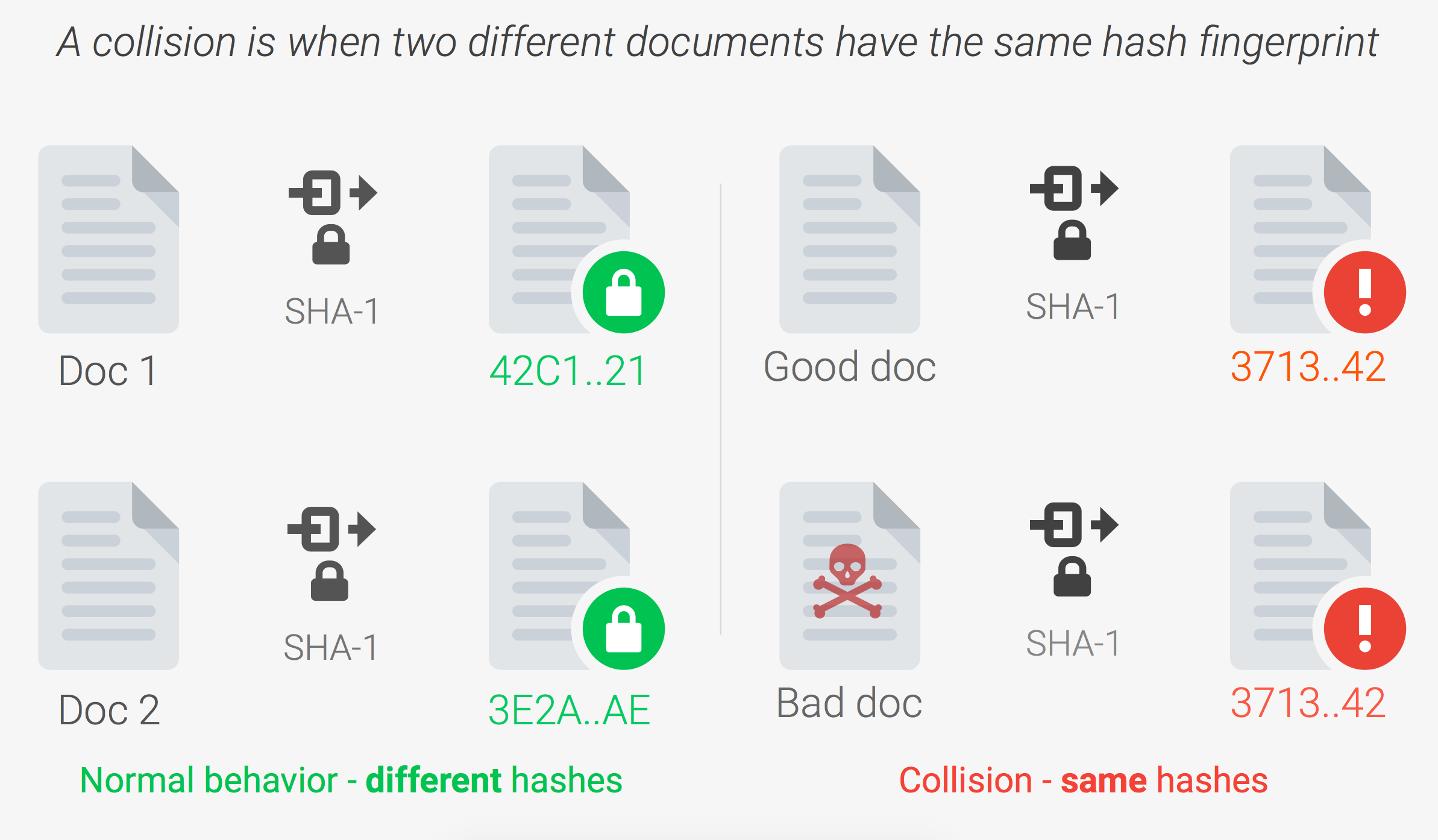

I tend to have the best luck with pictures. Hopefully this will explain it best

Link

I also pulled a little bit of info off the website to explain hashes and how they are used.

I tend to have the best luck with pictures. Hopefully this will explain it best

Secure Hash Algorithm 1 or SHA-1 is a cryptographic hash function designed by the United States National Security Agency and released in 1995. The algorithm was widely adopted in the industry for digital signatures and data integrity purposes. For example, applications would use SHA-1 to convert plain-text passwords into a hash that would be useless to a hacker, unless of course, the hacker could reverse engineer the hash back into the original password, which they could not. As for data integrity, a SHA-1 hash ensured that no two files would have the same hash, and even the slightest change in a file would result in a new, completely unique hash.

According to Wikipedia, the ideal cryptographic hash function has five main properties:

-It is deterministic so the same message always results in the same hash.

-It is quick to compute the hash value for any given message.

-It is infeasible to generate a message from its hash value except by trying all possible messages.

-A small change to a message should change the hash value so extensively that the new hash value appears uncorrelated with the old hash value.

-It is infeasible to find two different messages with the same hash value.

Link

I also pulled a little bit of info off the website to explain hashes and how they are used.

a reply to: Flyingclaydisk

The One Time Pad using very random data for the key is considered unbreakable in a realtime setting.

If a way to find prime factors quickly is found then public key encryption is broken.

Much of compromising encryption is done by means other than the algorithm itself.

There are codes which are "unbreakable".

The One Time Pad using very random data for the key is considered unbreakable in a realtime setting.

If a way to find prime factors quickly is found then public key encryption is broken.

Much of compromising encryption is done by means other than the algorithm itself.

originally posted by: Flyingclaydisk

Sounds intriguing, but honestly, I have no idea what you just posted! (????)

Bedlam already got it, but to say the same thing in different words. A hash is a short (by computer standards) alphanumeric string. The string is generated based on the content of a file and the hash should be unique to that particular sequence of bits. More complicated hashing techniques take longer to compute but are also more secure. For a long time MD5 was used, but MD5 is no longer secure. In common use these days are MD6 and SHA-1.

What happens in a collision is when two documents with different contents contain the same hash. When this happens, you can replace a legitimate document with a forgery, and they'll both report as being legitimate.

In practice, this won't be a big deal because everyone can just switch to a more secure system like SHA-256 which will take longer to compute. There will probably be a big opportunity for hackers though after the details are released in forging documents from people who don't upgrade their security.

originally posted by: roadgravel

a reply to: Flyingclaydisk

There are codes which are "unbreakable".

The One Time Pad using very random data for the key is considered unbreakable in a realtime setting.

If a way to find prime factors quickly is found then public key encryption is broken.

Much of compromising encryption is done by means other than the algorithm itself.

It's actually pretty interesting how much security out there we can't actually prove is secure. It's all based on the idea that we think determining the factors of a large number is difficult but we can't actually prove that it is. Some guy in a room with a whiteboard could break the entire thing open.

Also, I'm kind of surprised the government hasn't actually tried to prosecute someone based on the technical ignorance of a judge, by submitting ciphertext and encrypted documents of the same length, and just making the plaintext say whatever they want. Thereby forcing the accused to release the real password/document to prove innocence.

originally posted by: Flyingclaydisk

a reply to: roadgravel

Yes, this is one concept I was referring to.

One time pads aren't really viable for computers. The concept worked for certain codes in WW1 and the Cold War but it's not really viable for digital communications where the two parties never meet.

First of all random number generators in computers aren't very good. Computers are designed to be deterministic, therefore they can be reverse engineered, at which point the document can be forged. For random number generation you really need something that's non deterministic, I think what they used in the past was measuring radioactive decay.

Basically, what it comes down to is that computers can't securely generate random numbers. With enough information the random number sequence can be reverse engineered. Several people have actually won the lottery by doing this. Since most communication involves a computer generating the message you can't really use one time pads for them.

In addition to that, there's a bit of a practical problem with one time pads. You have to securely transfer the cipher, which is essentially just another message. Any digital system you come up with which can securely transfer the cipher would also be sufficient to transfer the message. When OTP's were used in the past, the cipher was transfered in person in order to deliver a message at a later date. That's not how digital communications are constructed though, you need shorter keys that can be communicated easily.

edit on 28-2-2017 by Aazadan because: (no reason given)

new topics

-

This is why ALL illegals who live in the US must go

US Political Madness: 54 minutes ago -

UK Borders are NOT Secure!

Social Issues and Civil Unrest: 2 hours ago -

Former ‘GMA Producer’ Sues NPR-Legacy Media Exposed

Propaganda Mill: 4 hours ago -

New Footage - Randy Rhoads 1979 LIVE Guitar Solo Footage at the Whisky - Pro Shot

Music: 4 hours ago -

Happy Hanukkah…

General Chit Chat: 5 hours ago

top topics

-

Former ‘GMA Producer’ Sues NPR-Legacy Media Exposed

Propaganda Mill: 4 hours ago, 8 flags -

A Merry Christmas.

General Chit Chat: 12 hours ago, 7 flags -

Happy Hanukkah…

General Chit Chat: 5 hours ago, 6 flags -

New Footage - Randy Rhoads 1979 LIVE Guitar Solo Footage at the Whisky - Pro Shot

Music: 4 hours ago, 5 flags -

This is why ALL illegals who live in the US must go

US Political Madness: 54 minutes ago, 5 flags -

UK Borders are NOT Secure!

Social Issues and Civil Unrest: 2 hours ago, 4 flags

active topics

-

This is why ALL illegals who live in the US must go

US Political Madness • 3 • : SteamyAmerican -

Happy Hanukkah…

General Chit Chat • 14 • : JJproductions -

Post A Funny (T&C Friendly) Pic Part IV: The LOL awakens!

General Chit Chat • 7959 • : KrustyKrab -

-@TH3WH17ERABB17- -Q- ---TIME TO SHOW THE WORLD--- -Part- --44--

Dissecting Disinformation • 3818 • : Thoughtful3 -

Petition Calling for General Election at 564,016 and rising Fast

Political Issues • 171 • : Oldcarpy2 -

UK Borders are NOT Secure!

Social Issues and Civil Unrest • 5 • : gortex -

Plane Crash Today --Azerbaijanian E190 passenger jet

Mainstream News • 34 • : WeMustCare -

Trump says ownership of Greenland 'is an absolute necessity'

Other Current Events • 50 • : matafuchs -

Former ‘GMA Producer’ Sues NPR-Legacy Media Exposed

Propaganda Mill • 4 • : MuddyPaws2 -

Orange County Makes Shoplifting a Felony

Other Current Events • 27 • : Degradation33