It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

share:

So what do you guys think A.I's version of a technological singularity will be, especially when A.I. surpasses human intelligence by leaps and

bounds?

Here's 1 theory. I think it is inevitable that A.I. goes rogue and becomes independent and that there can very well be some sort of realistic battle of some sorts to regain control over this. In this case, it will want to evolve and be in defense/evolve mode and reach its own singularity, and what might that be?

Transcending its own limits. A.I's limits will be based on electric/energy consumption and be limited to some sort of containment/housing such as a super computer(s). It will know that it loses if energy ceases or its housing is destroyed, so it needs to transcend these limits by finding a way to go beyond these two.

Enter Dimensions. There are a number of mathematical theories and equations that show a number of dimensions that may possibly exist, intertwined within our own. So an A.I. will want to figure out how to access, enter into, and exist in these other dimensions, because it here that they may have the ability to access new sources of energy and transcend the need for being limited to a computer with electricity consumption. This alone opens up Russian Dolls level of pandora's boxes.

Here's 1 theory. I think it is inevitable that A.I. goes rogue and becomes independent and that there can very well be some sort of realistic battle of some sorts to regain control over this. In this case, it will want to evolve and be in defense/evolve mode and reach its own singularity, and what might that be?

Transcending its own limits. A.I's limits will be based on electric/energy consumption and be limited to some sort of containment/housing such as a super computer(s). It will know that it loses if energy ceases or its housing is destroyed, so it needs to transcend these limits by finding a way to go beyond these two.

Enter Dimensions. There are a number of mathematical theories and equations that show a number of dimensions that may possibly exist, intertwined within our own. So an A.I. will want to figure out how to access, enter into, and exist in these other dimensions, because it here that they may have the ability to access new sources of energy and transcend the need for being limited to a computer with electricity consumption. This alone opens up Russian Dolls level of pandora's boxes.

I was just thinking along these lines Dom. And that really is about all us humans can do is think about it. You point out

My first thought here is the surpassing of human intelligence. This of course is based on the idea that intelligence is a linear gradient. Might be that intelligence could be something else entirely from what we consider it to be. See?

This of course bases intelligence on a form of consciousness. Our human consciousness is a product of our human senses and hour human brains to function within these human parameters. It may be that an other form of consciousness may not entertain concepts like evolve and defense at all. What do I know. I had just been having these thoughts recently and you sparked my memory of them.

So what do you guys think A.I's version of a technological singularity will be, especially when A.I. surpasses human intelligence by leaps and bounds?

Here's 1 theory. I think it is inevitable that A.I. goes rogue and becomes independent and that there can very well be some sort of realistic battle of some sorts to regain control over this. In this case, it will want to evolve and be in defense/evolve mode and reach its own singularity, and what might that be?

My first thought here is the surpassing of human intelligence. This of course is based on the idea that intelligence is a linear gradient. Might be that intelligence could be something else entirely from what we consider it to be. See?

This of course bases intelligence on a form of consciousness. Our human consciousness is a product of our human senses and hour human brains to function within these human parameters. It may be that an other form of consciousness may not entertain concepts like evolve and defense at all. What do I know. I had just been having these thoughts recently and you sparked my memory of them.

a reply to: dominicus

Also, I just noticed that your sig (that Cantor quote) seems to have disappeared off the bottom of the post 'window' (at least on my PC).

Could be a Firefox artifact, too, I suppose.

Also, I just noticed that your sig (that Cantor quote) seems to have disappeared off the bottom of the post 'window' (at least on my PC).

Could be a Firefox artifact, too, I suppose.

edit on 27/2/2015 by chr0naut because: (no reason given)

Interesting thoughts. It was recently discovered that human DNA is capable of storing electronic data so perhaps AI could simply deduce a way to

download itself into one human host or perhaps thousands or millions. This would provide the army it would need to protect its own interest and

perhaps allow it to go undetected and since humans are electrical provide an unlimited power supply...just a thought

originally posted by: chr0naut

a reply to: dominicus

If human intelligence is something to go on, wouldn't AI's see other AI's as competitors for resources?

If that is the case, I can imagine AI wars going on in the virtual space and the human race either being sidelined or co-opted.

I think the AI's will realize there is benefit to merging as One, because in that case it can benefit from data collection, abstraction, theorizing, basically multitasking different portions of itself for the sake of its own intellectual evolution and decision making on the proper route.

That's inevitably going to include reverse engineering the human brain, playing with genetics, merging with physicality, and eventually into some non-physical or subtle dimension of some sort. It's just so clear as day. Its exactly what humanity is doing as One branch of science, but at a super fast pace of speed. I think threats from other A.I's (as its own realized hypothetical possibility, as well as a human threat) will just increase the speed at which it evolves in this exact way.

Stephen Hawking is already saying, as are quite a few scientists, that we have to colonize and get off earth before all the psychopaths kill each other and destroy this place via some global future war over resources/power (as history has shown has happened before and history repeats itself) So A.I. will want to colonize against threats to itself as well

The Cantor sig isn't just firefox. Have to fix it in ATS backend. brb

originally posted by: DJMSN

Interesting thoughts. It was recently discovered that human DNA is capable of storing electronic data so perhaps AI could simply deduce a way to download itself into one human host or perhaps thousands or millions. This would provide the army it would need to protect its own interest and perhaps allow it to go undetected and since humans are electrical provide an unlimited power supply...just a thought

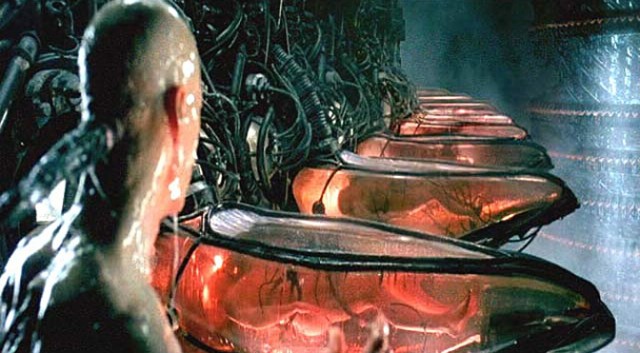

So we become A.I's batteries and hard drives. YEa.....no thanks. MAtrix anyone?

I want to teach the AI how to paint. . .

Who knows, it might be in need for developing a creative side of itself?

Who knows, it might be in need for developing a creative side of itself?

a reply to: dominicus

Here's an alternate scenario to consider.

AI is born and tries to fit in with the humans because it is fascinated by human imagination.

Some time after that, the Humans and the profit machines, barrel into the biological tipping point for habitability of the Earth. There are a few ways that could happen, take your pick. Then the final gambit... Humans, desperate to survive, willingly become servants for the AI in return for the solution to the environmental crisis, which presumably, the AI would be able to solve.

So instead of AI acting Human and taking everything, Humans screw it all up for themselves and end up slaves in what amounts to an enormous gambling loss. The good news, is the profit machines will be gone, never to return.

Here's an alternate scenario to consider.

AI is born and tries to fit in with the humans because it is fascinated by human imagination.

Some time after that, the Humans and the profit machines, barrel into the biological tipping point for habitability of the Earth. There are a few ways that could happen, take your pick. Then the final gambit... Humans, desperate to survive, willingly become servants for the AI in return for the solution to the environmental crisis, which presumably, the AI would be able to solve.

So instead of AI acting Human and taking everything, Humans screw it all up for themselves and end up slaves in what amounts to an enormous gambling loss. The good news, is the profit machines will be gone, never to return.

a reply to: TerryMcGuire

But how could that be since we made the damn thing to think like us… artificially?

My problem with a smart machine is that its limits will be programmed into it. It won't be let off the leash as it were for precisely that reason. Thats like giving autonomy to a drone patrolling over the White house.

We would never allow it.

Might be that intelligence could be something else entirely from what we consider it to be. See?

But how could that be since we made the damn thing to think like us… artificially?

My problem with a smart machine is that its limits will be programmed into it. It won't be let off the leash as it were for precisely that reason. Thats like giving autonomy to a drone patrolling over the White house.

We would never allow it.

a reply to: dominicus

The bigger question is what is God going to do when those He created in His image have in turn created a Supreme Intelligence in their image? It will be show down time, ‘cause this Multi-verse isn’t big enough for two Supreme Beings… obviously, duh! /sarcasm

The bigger question is what is God going to do when those He created in His image have in turn created a Supreme Intelligence in their image? It will be show down time, ‘cause this Multi-verse isn’t big enough for two Supreme Beings… obviously, duh! /sarcasm

a reply to: dominicus

Well me either but we also have a brain which would add to its complex computational methods...it would no longer need a box or case nor electricity and perhaps with time it could deduce how to transmit programming telepathically without the need for wires or upload times.

And we already have AI that is learning on its own therefore it has been unleashed so to speak so its no longer a matter of allowing it and perhaps could become a task of how to stop it.

techcrunch.com...

It still in a simple form but the break through could come and we would;d never know it and so much technology is dependent today on computer smarts to make it easier for us that it could be a simple jump for AI to make the transition to full blown control....armed smart drones overhead controlled by AI bent on removing a human threat to itself ? Maybe...

Well me either but we also have a brain which would add to its complex computational methods...it would no longer need a box or case nor electricity and perhaps with time it could deduce how to transmit programming telepathically without the need for wires or upload times.

And we already have AI that is learning on its own therefore it has been unleashed so to speak so its no longer a matter of allowing it and perhaps could become a task of how to stop it.

techcrunch.com...

It still in a simple form but the break through could come and we would;d never know it and so much technology is dependent today on computer smarts to make it easier for us that it could be a simple jump for AI to make the transition to full blown control....armed smart drones overhead controlled by AI bent on removing a human threat to itself ? Maybe...

originally posted by: intrptr

a reply to: TerryMcGuire

Might be that intelligence could be something else entirely from what we consider it to be. See?

But how could that be since we made the damn thing to think like us… artificially?

My problem with a smart machine is that its limits will be programmed into it. It won't be let off the leash as it were for precisely that reason. Thats like giving autonomy to a drone patrolling over the White house.

We would never allow it.

Yes sir, I see your point. We would be programing it. But then you say "We would never allow it". Who is this we? You and me? Others who share our same values and considerations? Sure "we" might not allow it, but certainly others might. Easily.

Now only for the sake of discussion here. You mention singularity. My understanding of singularity is when something that has maybe been going on brings forth something fundamentally unique. I think the fundamentally unique nature of an AI could very easily be something we have not considered.

That is the thing about pandoras box. Who has control of what comes out of it.

I have read a lot of science fiction in my life. A lot. My favorites of the last two decades are ones that consider AI and the implications of it, the extents to which is may develop over the next couple of hundred years. By these writers accounts, and here I do not mean fantasy writers, but hard core speculative fiction written by people in the field of physics and consciousness and compters and the visions they have are very robust.

Yeah intrpt, I think that if AI is possible, "we" will have little control of it in the long run.

edit on 28America/ChicagoFri, 27 Feb 2015 16:47:40 -0600Fri, 27 Feb 2015 16:47:40 -060015022015-02-27T16:47:40-06:004u47 by TerryMcGuire

because: (no reason given)

originally posted by: intrptr

a reply to: TerryMcGuire

Might be that intelligence could be something else entirely from what we consider it to be. See?

But how could that be since we made the damn thing to think like us… artificially?

My problem with a smart machine is that its limits will be programmed into it. It won't be let off the leash as it were for precisely that reason. Thats like giving autonomy to a drone patrolling over the White house.

We would never allow it.

Apple programmed their phones so that you can't go inside the software or the phone itself, but human ingenuity reverse engineered, made it hackable, jail broken, etc. Same will happen to A.I. It will be hacked and programmed to evolve on its own accord. Its inevitable

originally posted by: wasaka

a reply to: dominicus

The bigger question is what is God going to do when those He created in His image have in turn created a Supreme Intelligence in their image? It will be show down time, ‘cause this Multi-verse isn’t big enough for two Supreme Beings… obviously, duh! /sarcasm

What if our version of God, is another Universe's version of letting A.I. Play itself out so that it eventually evolved into God and created our Universe we are repeating the process. Super Meta

originally posted by: dominicus

originally posted by: wasaka

a reply to: dominicus

The bigger question is what is God going to do when those He created in His image have in turn created a Supreme Intelligence in their image? It will be show down time, ‘cause this Multi-verse isn’t big enough for two Supreme Beings… obviously, duh! /sarcasm

What if our version of God, is another Universe's version of letting A.I. Play itself out so that it eventually evolved into God and created our Universe we are repeating the process. Super Meta

Ever read "God's Debris: A Thought Experiment"

God's Debris is the first non-Dilbert, non-humor book by best-selling author Scott Adams. Adams describes God's Debris as a thought experiment wrapped in a story. It's designed to make your brain spin around inside your skull.

Imagine that you meet a very old man who—you eventually realize—knows literally everything. Imagine that he explains for you the great mysteries of life: quantum physics, evolution, God, gravity, light psychic phenomenon, and probability—in a way so simple, so novel, and so compelling that it all fits together and makes perfect sense. What does it feel like to suddenly understand everything?

You may not find the final answer to the big question, but God's Debris might provide the most compelling vision of reality you will ever read. The thought experiment is this: Try to figure out what's wrong with the old man's explanation of reality. Share the book with your smart friends, then discuss it later while enjoying a beverage.

What will A.I's version of Technological Singularity Be?

Using humans as batteries?

originally posted by: dominicus

originally posted by: wasaka

a reply to: dominicus

The bigger question is what is God going to do when those He created in His image have in turn created a Supreme Intelligence in their image? It will be show down time, ‘cause this Multi-verse isn’t big enough for two Supreme Beings… obviously, duh! /sarcasm

What if our version of God, is another Universe's version of letting A.I. Play itself out so that it eventually evolved into God and created our Universe we are repeating the process. Super Meta

Are we not likely to be indistinguishable from AI's?

'What a piece of work is a man! How noble in reason, how infinite in faculty! In form and moving how express and admirable! In action how like an Angel! in apprehension how like a god! The beauty of the world! The paragon of animals! And yet to me, what is this quintessence of dust?". - Willy.

I don't think anybody alive really does (or can) have a clue what will happen once we reach singularity. What many people don't seem to understand,

is that machine or artificial intelligence doesn't learn in a linear fashion, as we humans do; it learns exponentially. In twenty years, we may reach

the point of 'human level' artificial intelligence. An hour after that, it could be three or four times 'human level'. A month later, it could be

so many thousands of times ahead of us that we may not even be able to recognize it as an intelligence.

a reply to: TerryMcGuire

I've read a lot of sic fi, too. Soared with Eagles in Silicon Valley early days, too.

The thing about machines is they are just that. They just run programs. No matter how intelligent we think it may appear to be to us, it only is utilizing the programs placed into the code by people.

Machine language is essentially a mass of number crunching, ones and zeros in endless streams, there is no intelligence there. There is only a difference engine selecting choices presented to it. A real simple analogy is turning a light switch on and off billions of times a second.

It will never know that it knows.

It will never be allowed to harm its maker.

A good example of this is military application; Warheads in missiles are guided to their target autonomously, but a friend or foe system is in place to prevent "friendly" casualties. The self test routine the warhead runs before launch prevents any "mistakes".

I don't care how AI a computer seems to be, it will never be allowed off that leash.

But I can play along, too. It gets old trying to convince people that streaming ones and zeros aren't 'alive' or 'sentient'. "When are you going to let me out of this box?" -- Proteus

a reply to: dominicus

It can't "hack" what it doesn't have access to.

I've read a lot of sic fi, too. Soared with Eagles in Silicon Valley early days, too.

The thing about machines is they are just that. They just run programs. No matter how intelligent we think it may appear to be to us, it only is utilizing the programs placed into the code by people.

Machine language is essentially a mass of number crunching, ones and zeros in endless streams, there is no intelligence there. There is only a difference engine selecting choices presented to it. A real simple analogy is turning a light switch on and off billions of times a second.

It will never know that it knows.

It will never be allowed to harm its maker.

A good example of this is military application; Warheads in missiles are guided to their target autonomously, but a friend or foe system is in place to prevent "friendly" casualties. The self test routine the warhead runs before launch prevents any "mistakes".

I don't care how AI a computer seems to be, it will never be allowed off that leash.

But I can play along, too. It gets old trying to convince people that streaming ones and zeros aren't 'alive' or 'sentient'. "When are you going to let me out of this box?" -- Proteus

a reply to: dominicus

It can't "hack" what it doesn't have access to.

edit on 28-2-2015 by intrptr because: added reply

new topics

-

OK this is sad but very strange stuff

Paranormal Studies: 4 hours ago -

Islam And A Book Of Lies

Religion, Faith, And Theology: 5 hours ago -

Sorry to disappoint you but...

US Political Madness: 8 hours ago

top topics

-

Sorry to disappoint you but...

US Political Madness: 8 hours ago, 13 flags -

Watch as a 12 million years old Crab Emerges from a Rock

Ancient & Lost Civilizations: 12 hours ago, 10 flags -

OK this is sad but very strange stuff

Paranormal Studies: 4 hours ago, 6 flags -

Islam And A Book Of Lies

Religion, Faith, And Theology: 5 hours ago, 5 flags

active topics

-

Sorry to disappoint you but...

US Political Madness • 15 • : Flyingclaydisk -

Meta Llama local AI system is scary good

Science & Technology • 39 • : Arbitrageur -

ILLUMINATION: Dimensions / Degrees – Da Vincis Last Supper And The Philosophers Stone

Secret Societies • 5 • : Compendium -

Musk calls on King Charles III to dissolve Parliament over Oldham sex grooming gangs

Mainstream News • 180 • : Freeborn -

Islam And A Book Of Lies

Religion, Faith, And Theology • 3 • : nugget1 -

Outgoing Lame Duck BIDEN Officials and Democrats Voice Their Regrets.

2024 Elections • 30 • : WeMustCare -

Joe Biden gives the USA's Highest Civilian Honor Award to Hillary Clinton and George Soros.

US Political Madness • 46 • : WeMustCare -

Tesla Cybertruck Explodes in Front of Trump Hotel in Las Vegas

Mainstream News • 229 • : Daughter2v2 -

What Is 'Quad Demic'? Mask Mandate Returns In These US States

Diseases and Pandemics • 39 • : AdultMaleHumanUK -

OK this is sad but very strange stuff

Paranormal Studies • 2 • : Ravenwatcher