It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

share:

a reply to: ElectricUniverse

What he is saying is this...

Example:

I'm a meteorologist. I have written and published hundreds of papers on research and observation on rainfall, snowfall, ocean and land temperatures, ocean currents, etc. In all of my papers, I am reporting on observations of the changes I'm seeing.

Such as increased rainfall averages in one area, decreased in another.

I don't say anywhere in my papers what I believe the cause to be, but I clearly state that I see the changes.

My papers would be included in Cook's "no position" group. That group is 2/3 of the papers that mention "climate change" in some form. My papers would be excluded by categorically being put into the "no position" category and therefore, not included in the 97%.

If I am researching climate and have considerable research and observational evidence on the subject, I should be included in the same group of scientists that the 97% are.

But by mc_squared's accord, they should be "dismissed" because they are "irrelevant".

Seems crystal clear to me.

~Namaste

What he is saying is this...

Example:

I'm a meteorologist. I have written and published hundreds of papers on research and observation on rainfall, snowfall, ocean and land temperatures, ocean currents, etc. In all of my papers, I am reporting on observations of the changes I'm seeing.

Such as increased rainfall averages in one area, decreased in another.

I don't say anywhere in my papers what I believe the cause to be, but I clearly state that I see the changes.

My papers would be included in Cook's "no position" group. That group is 2/3 of the papers that mention "climate change" in some form. My papers would be excluded by categorically being put into the "no position" category and therefore, not included in the 97%.

If I am researching climate and have considerable research and observational evidence on the subject, I should be included in the same group of scientists that the 97% are.

But by mc_squared's accord, they should be "dismissed" because they are "irrelevant".

Seems crystal clear to me.

~Namaste

a reply to: mc_squared

You're so very welcome... haha. I more felt the Jon Stewart video

You're so very welcome... haha. I more felt the Jon Stewart video

edit on 10/4/2014 by Kali74 because: (no reason given)

a reply to: SonOfTheLawOfOne

Yeah I know what he was trying to do, in the meanwhile they send each other "videos about Jon Steward" and in their minds they seem to think that corroborates their AGW religion... Go figure. But hey, they don't seem to care that their "belief' is based on lies, and as long as they keep sending each other videos of "Jon Steward" and laugh about it they don't care what the evidence tells them about AGW. More power to them I guess huh?... lol

Yeah I know what he was trying to do, in the meanwhile they send each other "videos about Jon Steward" and in their minds they seem to think that corroborates their AGW religion... Go figure. But hey, they don't seem to care that their "belief' is based on lies, and as long as they keep sending each other videos of "Jon Steward" and laugh about it they don't care what the evidence tells them about AGW. More power to them I guess huh?... lol

Meanwhile these same people and scientists that claim everyday normal humans are causing all of this through our lifestyles, and needing heat to live

and feed ourselves, deny that conspiracies to hide better technologies exist, or that the VERY REAL idea that humans could PURPOSEFULLY mess with the

weather.

Always wanting to claim its incompetence and petty everyday normal greed, and denying that airplanes have anything to do with the so-called changes they are seeing.

The total inability to read between lines and see that all the lines are blurred is staggering.

Always wanting to claim its incompetence and petty everyday normal greed, and denying that airplanes have anything to do with the so-called changes they are seeing.

The total inability to read between lines and see that all the lines are blurred is staggering.

I don't know if you seen this but Ben Santer made a comment over at WUWT ..seems the debate may have started

.wattsupwiththat.com...

-1754698 a reply to: SlapMonkey

originally posted by: the2ofusr1

I don't know if you seen this but Ben Santer made a comment over at WUWT ..seems the debate may have started .wattsupwiththat.com... -1754698 a reply to: SlapMonkey

Ah yes... the same Ben Santer that said this to Phil Jones regarding the dissent of Patrick Michaels:

"... I'm really sorry that you have to go through all of this stuff, Phil. Next time I see Pat Micheals at a scientific meeting, I'll be tempted to beat the crap out of him. Very tempted."

ClimateGate Email

It looks like the good doctor has a mean side to him that not many people get to see when it comes to bias against dissenters. I wonder if that same bias could possibly be reflected in any of his work....

Naaaaaa, scientists don't do that. They all follow the scientific method.

~Namaste

Whether or not scientists are towing the line or not comes down to some simple questions and statements that have not been answered or challenged, and

can't be answered or challenged, which is why consensus is a failure.

There is definitely a trend toward "we may have been overly-confident with our models..." and the "models are wrong, we need to go back to the board..."

Here are some great observations about the IPCC, and while they are from the Guardian, they coalesce some great points into one summarized block:

Source

Here is a recent paper in a statistics journal looking at the statistical analysis of the models and their accuracy versus observations over the last 19 years:

The paper goes on to say:

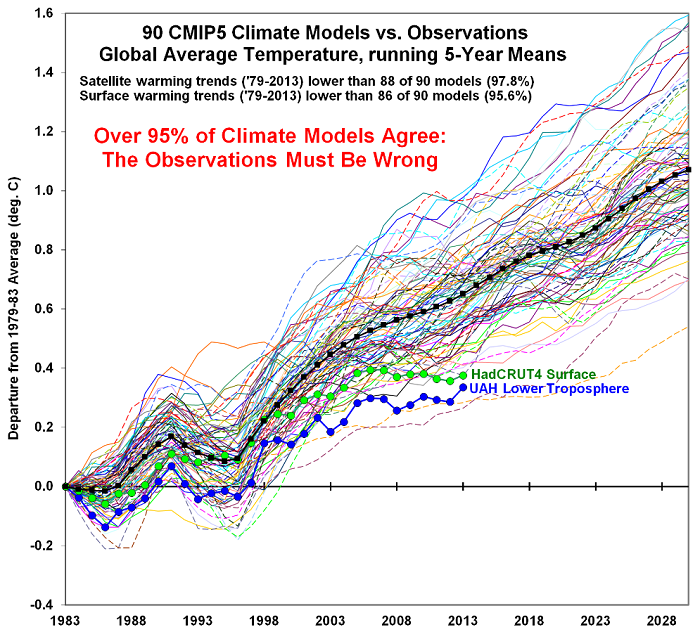

So according to the data, there is at the very least, a 15-year holding pattern on warming temperatures, regardless of the explanation, that is measured and observed in HadCRUT4, UAT and RSS. Even if you don't address the question of "why" this happened, the simple fact that the CO2 has increased, and models using that as a variable to hindcast, then predicted warming that never occurred, it resulted in a model inaccuracy rate of 97%!

And we have no reason to be skeptical?

~Namaste

There is definitely a trend toward "we may have been overly-confident with our models..." and the "models are wrong, we need to go back to the board..."

Here are some great observations about the IPCC, and while they are from the Guardian, they coalesce some great points into one summarized block:

What they say: ‘The rate of warming over the past 15 years [at 0.05C per decade] is smaller than the trend since 1951.'

What this means: In their last hugely influential report in 2007, the IPCC claimed the world had warmed at a rate of 0.2C per decade 1990-2005, and that this would continue for the following 20 years.

The unexpected 'pause' means that at just 0.05C per decade, the rate 1998-2012 is less than half the long-term trend since 1951, 0.12C per decade, and just a quarter of the 2007-2027 prediction.

Some scientists - such as Oxford's Myles Allen - argue that it is misleading to focus on this 'linear trend', and that one should only compare averages taken from decade-long blocks.

What they say: ‘Surface temperature reconstructions show multi-decadal intervals during the Medieval Climate Anomaly (950-1250) that were in some regions as warm as in the late 20th Century.’

What this means: As recently as October 2012, in an earlier draft of this report, the IPCC was adamant that the world is warmer than at any time for at least 1,300 years. Their new inclusion of the ‘Medieval Warm Period’ – long before the Industrial Revolution and its associated fossil fuel burning – is a concession that its earlier statement is highly questionable.

What they say: ‘Models do not generally reproduce the observed reduction in surface warming trend over the last 10 – 15 years.’

What this means: The ‘models’ are computer forecasts, which the IPCC admits failed to ‘see... a reduction in the warming trend’. In fact, there has been no statistically significant warming at all for almost 17 years – as first reported by this newspaper last October, when the Met Office tried to deny this ‘pause’ existed.In its 2012 draft, the IPCC didn’t mention it either. Now it not only accepts it is real, it admits that its climate models totally failed to predict it.

What they say: ‘There is medium confidence that this difference between models and observations is to a substantial degree caused by unpredictable climate variability, with possible contributions from inadequacies in the solar, volcanic, and aerosol forcings used by the models and, in some models, from too strong a response to increasing greenhouse-gas forcing.’

What this means: The IPCC knows the pause is real, but has no idea what is causing it. It could be natural climate variability, the sun, volcanoes – and crucially, that the computers have been allowed to give too much weight to the effect carbon dioxide emissions (greenhouse gases) have on temperature change.

What they say: ‘Climate models now include more cloud and aerosol processes, but there remains low confidence in the representation and quantification of these processes in models.’

What this means: Its models don’t accurately forecast the impact of fundamental aspects of the atmosphere – clouds, smoke and dust.

What they say: ‘Most models simulate a small decreasing trend in Antarctic sea ice extent, in contrast to the small increasing trend in observations... There is low confidence in the scientific understanding of the small observed increase in Antarctic sea ice extent.’

What this means: The models said Antarctic ice would decrease. It’s actually increased, and the IPCC doesn’t know why.

What they say: ‘ECS is likely in the range 1.5C to 4.5C... The lower limit of the assessed likely range is thus less than the 2C in the [2007 report], reflecting the evidence from new studies.’

What this means: ECS – ‘equilibrium climate sensitivity’ – is an estimate of how much the world will warm every time carbon dioxide levels double. A high value means we’re heading for disaster. Many recent studies say that previous IPCC claims, derived from the computer models, have been way too high. It looks as if they’re starting to take notice, and so are scaling down their estimate for the first time.

Source

Here is a recent paper in a statistics journal looking at the statistical analysis of the models and their accuracy versus observations over the last 19 years:

The IPCC has drawn attention to an apparent leveling-off of globally-averaged temperatures over the past 15 years or so. Measuring the duration of the hiatus has implications for determining if the underlying trend has changed, and for evaluating climate models. Here, I propose a method for estimating the duration of the hiatus that is robust to unknown forms of heteroskedasticity and autocorrelation (HAC) in the temperature series and to cherry-picking of endpoints.

For the specific case of global average temperatures I also add the requirement of spatial consistency between hemispheres. The method makes use of the Vogelsang-Franses (2005) HAC-robust trend variance estimator which is valid as long as the underlying series is trend stationary, which is the case for the data used herein. Application of the method shows that there is now a trendless interval of 19 years duration at the end of the HadCRUT4 surface temperature series, and of 16 – 26 years in the lower troposphere. Use of a simple AR1 trend model suggests a shorter hiatus of 14 – 20 years but is likely unreliable.

The paper goes on to say:

The IPCC does not estimate the duration of the hiatus, but it is typically regarded as having extended for 15 to 20 years. While the HadCRUT4 record clearly shows numerous pauses and dips amid the overall upward trend, the ending hiatus is of particular note because climate models project continuing warming over the period. Since 1990, atmospheric carbon dioxide levels rose from 354 ppm to just under 400 ppm, a 13% increase. [1] reported that of the 114 model simulations over the 15-year interval 1998 to 2012, 111 predicted warming. [5] showed a similar mismatch in comparisons over a twenty year time scale, with most models predicting 0.2˚C – 0.4˚C/decade warming. Hence there is a need to address two questions: 1) how should the duration of the hiatus be measured? 2) Is it long enough to indicate a potential inconsistency between observations and models? This paper focuses solely on the first question.

So according to the data, there is at the very least, a 15-year holding pattern on warming temperatures, regardless of the explanation, that is measured and observed in HadCRUT4, UAT and RSS. Even if you don't address the question of "why" this happened, the simple fact that the CO2 has increased, and models using that as a variable to hindcast, then predicted warming that never occurred, it resulted in a model inaccuracy rate of 97%!

And we have no reason to be skeptical?

~Namaste

edit on 5-10-2014 by SonOfTheLawOfOne because: missing source

It has been noticed that they (AGW) people are great at moving the goal posts and hand waving . This is a quote from Dr. Ball about Ben Santer and

his ilk

a reply to: SonOfTheLawOfOne

Tim Ball October 4, 2014 at 9:55 am

The first action that exposed the modus operandi of the IPCC occurred with Santer’s actions in the 1995 second Report. He exploited a very limited editorial policy to dramatically alter the findings of Working Group I of the IPCC in the Summary. It is likely he did this with guidance from those controlling the output, because he was a very recent graduate and appointee to the IPCC. An action in itself that was questionable.

Benjamin Santer was a Climatic research Unit CRU graduate. Tom Wigley supervised his PhD titled, “Regional Validation of General Circulation Models” that used three top computer models to recreate North Atlantic conditions, where data was best. They created massive pressure systems that don’t exist in reality and failed to create known semipermanent systems. In other words he knew from the start the models don’t work, but this didn’t prevent him touting their effectiveness, especially after appointment as lead-author of Chapter 8 of the 1995 IPCC Report titled “Detection of Climate Change and Attribution of Causes” Santer determined to prove humans were a factor by altering the meaning of what was agreed by the others at the draft meeting in Madrid. Wigley moved to Colorado where he continued to fund and direct his disciples. Witness Wigley’s brief appearance in the 1990 documentary, The Greenhouse Conspiracy and the need to look after his graduate students.

Here are the comments agreed on by the committee as a whole followed by Santer’s replacements.

1. “None of the studies cited above has shown clear evidence that we can attribute the observed [climate] changes to the specific cause of increases in greenhouse gases.”

2. “While some of the pattern-base discussed here have claimed detection of a significant climate change, no study to date has positively attributed all or part of climate change observed to man-made causes.”

3. “Any claims of positive detection and attribution of significant climate change are likely to remain controversial until uncertainties in the total natural variability of the climate system are reduced.”

4. “While none of these studies has specifically considered the attribution issue, they often draw some attribution conclusions, for which there is little justification.”

Santer’s replacements

1. “There is evidence of an emerging pattern of climate response to forcing by greenhouse gases and sulfate aerosols … from the geographical, seasonal and vertical patterns of temperature change … These results point toward a human influence on global climate.”

2. “The body of statistical evidence in chapter 8, when examined in the context of our physical understanding of the climate system, now points to a discernible human influence on the global climate.”

As Avery and Singer noted in 2006, “Santer single-handedly reversed the ‘climate science’ of the whole IPCC report and with it the global warming political process! The ‘discernible human influence’ supposedly revealed by the IPCC has been cited thousands of times since in media around the world, and has been the ‘stopper’ in millions of debates among nonscientists.”

The model situation has deteriorated since Santer’s first efforts because they reduced the number of weather stations used and adjusted the early temperature record to change the gradient to create the outcome to support their thesis. Santer, like all the others, will never be held accountable and so he continues to believe he did nothing wrong, even though he admitted he made the changes.

a reply to: ElectricUniverse

This is not the only so called scientific lie, oops I mean scientific theory, that is being forced on us by the state to control us and pull us away from our true purpose.

This is not the only so called scientific lie, oops I mean scientific theory, that is being forced on us by the state to control us and pull us away from our true purpose.

There is no doubt a climate change occurring, and carbon pollution is definitely a problem, though I'm not convinced the two are related. It's clear,

to me at least, that the Anthropogenic angle is a scheme to simultaneously coerce the public into further taxations and to instill the false sense of

security that we are the problem, so we must also be the solution.

I believe the reality is either something very sinister, or completely out of our control...

I believe the reality is either something very sinister, or completely out of our control...

edit on 5-10-2014 by Gh0stwalker because: (no

reason given)

a reply to: Gh0stwalker

What's harmful about CO2 is that it is a greenhouse gas that is causing Earth to retain more heat than is part of the natural cycle which in turn is causing the climate to change. It would have to be in the thousands of parts per million to actually be harmful to breathe.

The discussed (not proposed) taxes on CO2 emissions would be revenue neutral that means that for tax payers, we are the ones that receive the taxes, not the government which offsets attempts by fossil fuels companies to pass their cost onto consumers.

Climate policy is dictated by fossil fuels companies therefore the solutions won't come from government. They will come from technology.

What's harmful about CO2 is that it is a greenhouse gas that is causing Earth to retain more heat than is part of the natural cycle which in turn is causing the climate to change. It would have to be in the thousands of parts per million to actually be harmful to breathe.

The discussed (not proposed) taxes on CO2 emissions would be revenue neutral that means that for tax payers, we are the ones that receive the taxes, not the government which offsets attempts by fossil fuels companies to pass their cost onto consumers.

Climate policy is dictated by fossil fuels companies therefore the solutions won't come from government. They will come from technology.

a reply to: the2ofusr1

From one of the horse's mouths, this is coming straight from an MIT professor regarding a recent paper...

Source

A visiting Harvard professor of oceanography... basically telling you that when scientists can't admit that their data is inadequate, and are in danger of running out of funding, getting grants revoked or not being published, they distort the calculation of uncertainty. This is something I have specifically mentioned in prior threads as being a problem with the majority of climate models and sensitivities in the GCMs.

This is a direct example in support of the OP, showing that "the line will be towed" under certain circumstances, particularly, when the data just isn't there.

It's not to say that political pressure isn't put on scientists on both sides to take positions that they don't agree with, but it is evidence that there is misrepresentation of data, which is indefensible.

~Namaste

From one of the horse's mouths, this is coming straight from an MIT professor regarding a recent paper...

Carl Wunsch, a visiting professor at Harvard and professor emeritus of oceanography at the Massachusetts Institute of Technology, offered a valuable cautionary comment on the range of papers finding oceanic drivers of short-term climate variations. He began by noting the challenge just in determining average conditions:

Part of the problem is that anyone can take a few measurements, average them, and declare it to be the global or regional value. It’s completely legitimate, but only if you calculate the expected uncertainty and do it in a sensible manner.

The system is noisy. Even if there were no anthropogenic forcing, one expects to see fluctuations including upward and downward trends, plateaus, spikes, etc. It’s the nature of turbulent, nonlinear systems. I’m attaching a record of the height of the Nile — 700-1300 CE. Visually it’s just what one expects. But imagine some priest in the interval from 900-1000, telling the king that the the Nile was obviously going to vanish…

Or pick your own interval. Or look at the central England temperature record or any other long geophysical one. If the science is done right, the calculated uncertainty takes account of this background variation. But none of these papers, Tung, or Trenberth, does that. Overlain on top of this natural behavior is the small, and often shaky, observing systems, both atmosphere and ocean where the shifting places and times and technologies must also produce a change even if none actually occurred. The “hiatus” is likely real, but so what? The fuss is mainly about normal behavior of the climate system.

The central problem of climate science is to ask what you do and say when your data are, by almost any standard, inadequate? If I spend three years analyzing my data, and the only defensible inference is that “the data are inadequate to answer the question,” how do you publish? How do you get your grant renewed? A common answer is to distort the calculation of the uncertainty, or ignore it all together, and proclaim an exciting story that the New York Times will pick up.

A lot of this is somewhat like what goes on in the medical business: Small, poorly controlled studies are used to proclaim the efficacy of some new drug or treatment. How many such stories have been withdrawn years later when enough adequate data became available?

Source

A visiting Harvard professor of oceanography... basically telling you that when scientists can't admit that their data is inadequate, and are in danger of running out of funding, getting grants revoked or not being published, they distort the calculation of uncertainty. This is something I have specifically mentioned in prior threads as being a problem with the majority of climate models and sensitivities in the GCMs.

This is a direct example in support of the OP, showing that "the line will be towed" under certain circumstances, particularly, when the data just isn't there.

It's not to say that political pressure isn't put on scientists on both sides to take positions that they don't agree with, but it is evidence that there is misrepresentation of data, which is indefensible.

~Namaste

edit on 5-10-2014 by SonOfTheLawOfOne because: (no reason given)

But, But..."The science has been settled!"...Hasn't it?

BWaaa-haaa-ha!

BWaaa-haaa-ha!

a reply to: SonOfTheLawOfOne

That's why ocean temps are biased low (up to 55% below readings) to compensate for fewer buoy's in the Southern Hemisphere than Northern.

That's why ocean temps are biased low (up to 55% below readings) to compensate for fewer buoy's in the Southern Hemisphere than Northern.

originally posted by: Kali74

a reply to: Gh0stwalker

What's harmful about CO2 is that it is a greenhouse gas that is causing Earth to retain more heat than is part of the natural cycle which in turn is causing the climate to change. It would have to be in the thousands of parts per million to actually be harmful to breathe.

CO2 has never been proven to be harmful unless it's in the tens of thousands of ppm. It also hasn't been shown to drive temperature, temperature has been shown to drive CO2. There is also no historic record of calamity from higher CO2, but at 150ppm, plants lose the ability to grow, and during the last ice age, we were at roughly 180ppm, which would have been the end of terrestrial growth on the planet if we dropped another 30ppm. Increased CO2 has been proven to be beneficial to the biosphere.

“Our analyses of ice cores from the ice sheet in Antarctica shows that the concentration of CO2 in the atmosphere follows the rise in Antarctic temperatures very closely and is staggered by a few hundred years at most,” explains Sune Olander Rasmussen, Associate Professor and centre coordinator at the Centre for Ice and Climate at the Niels Bohr Institute at the University of Copenhagen.

A lot of people who work in poorly ventilated buildings, are breathing CO2 in the thousands of ppm and it isn't harmful for them. It's not until you get into the tens of thousands of ppm that it really becomes a problem. And according to OSHA, the acceptable limit for 8-hour working conditions is 5,000 ppm.

~Namaste

edit on 5-10-2014 by SonOfTheLawOfOne because: (no reason given)

Any western climate scientist who wants funding needs to tow the line that humans are the main cause of climate change.

The "97% of all climatologists" statement is actually just 97% of AMERICAN climate scientists, in many other areas of the world where climate science doesn't rely on American funding, there is large amounts of disagreement.

To deny climate is changing is moronic, but to deny that there's any other cause than man made climate change is also moronic.

Earth has been both much hotter and much colder than it is now in its past, and these were all naturally caused, many years before humans were actually on the planet.

I'm not denying that humans have had an influence, but to say that they are the only influence is equally as insane as saying that they are not a cause at all.

The "97% of all climatologists" statement is actually just 97% of AMERICAN climate scientists, in many other areas of the world where climate science doesn't rely on American funding, there is large amounts of disagreement.

To deny climate is changing is moronic, but to deny that there's any other cause than man made climate change is also moronic.

Earth has been both much hotter and much colder than it is now in its past, and these were all naturally caused, many years before humans were actually on the planet.

I'm not denying that humans have had an influence, but to say that they are the only influence is equally as insane as saying that they are not a cause at all.

originally posted by: Kali74

a reply to: Gh0stwalker

What's harmful about CO2 is that it is a greenhouse gas that is causing Earth to retain more heat than is part of the natural cycle which in turn is causing the climate to change. It would have to be in the thousands of parts per million to actually be harmful to breathe.

...

Riiight, is that why the majority GCMs (computer models) which use the "temperatures claimed to be caused by CO2" are wrong?

CO2 certainly does not cause the "warming claimed by the AGW camp", more so when in the past Earth has had higher levels of CO2 than at present and it wasn't always "too hot."

Which btw, and once more, remember that in the troposphere WATER VAPOR accounts for 95%-98% (depend on who you ask) of the greenhouse effect. Just by knowing this it should tell you that since the Earth has warmed starting in the 1600s, almost 300 years before the advent of the industrial revolution, and since water vapor content in Earth's atmosphere increases with warming. Then the majority of the temperature increase Earth has experienced since the 1600s has been caused by the ghg known as WATER VAPOR (among other factors such as the increase in solar activity of course) and not because of CO2. But we all know that the AGW proponents want to blame it on CO2...

edit on 6-10-2014 by ElectricUniverse because: correct errors and add comment.

I believe they are toeing the line and I also believe the issue has always been at hand.

There has ALWAYS been climate change. We can look at our history and see this is a normal thing.

The Earth warms and volcanoes erupt.

Al Gore and his believers are like a cult making money and scaring the public. Plain and simple.

As we enter an Ice Age what will they say then?

There has ALWAYS been climate change. We can look at our history and see this is a normal thing.

The Earth warms and volcanoes erupt.

Al Gore and his believers are like a cult making money and scaring the public. Plain and simple.

As we enter an Ice Age what will they say then?

new topics

-

A Flash of Beauty: Bigfoot Revealed ( documentary )

Cryptozoology: 4 hours ago -

Fire insurance in LA withdrawn months ago

General Conspiracies: 6 hours ago

top topics

-

Fire insurance in LA withdrawn months ago

General Conspiracies: 6 hours ago, 7 flags -

A Flash of Beauty: Bigfoot Revealed ( documentary )

Cryptozoology: 4 hours ago, 5 flags -

Bizarre Labour Party Tic Toc Video Becomes Even More Embarrassing

Regional Politics: 15 hours ago, 4 flags

active topics

-

Trump says ownership of Greenland 'is an absolute necessity'

Other Current Events • 87 • : bastion -

Planned Civil War In Britain May Be Triggered Soon

Social Issues and Civil Unrest • 30 • : andy06shake -

Regent Street in #London has been evacuated due to a “bomb threat.”

Other Current Events • 8 • : TimBurr -

A Flash of Beauty: Bigfoot Revealed ( documentary )

Cryptozoology • 3 • : BeyondKnowledge3 -

Fire insurance in LA withdrawn months ago

General Conspiracies • 23 • : Flyingclaydisk -

Judge rules president-elect Donald Trump must be sentenced in 'hush money' trial

US Political Madness • 86 • : Flyingclaydisk -

The Truth about Migrant Crime in Britain.

Social Issues and Civil Unrest • 44 • : angelchemuel -

My personal experiences and understanding of orbs

Aliens and UFOs • 41 • : WeMustCare -

-@TH3WH17ERABB17- -Q- ---TIME TO SHOW THE WORLD--- -Part- --44--

Dissecting Disinformation • 3982 • : WeMustCare -

Los Angeles brush fires latest: 2 blazes threaten structures, prompt evacuations

Mainstream News • 298 • : Flyingclaydisk