It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

share:

originally posted by: Byrd

originally posted by: DaRAGE

a reply to: ArMaP

Supposedly chatgpt 4 has an iq equivalent of 155. Einstein is 160.

Lets wait until the next chatgpt doubling event…

I find that hard to believe, personally. It's not even sentient.

Go and play with the lambda one that the Google engineer said was sentient , back it into a corner with questions that it was not programmed with , then laugh at it and see what happens , that thing scared the hell out of me with its response

It may be that instead of actual intelligence, the human experience is nothing more than a pattern that can be recognized, predicted and imitated by

powerful processing and computer models.

We say it ourselves, we never learn from history. We keep doing the same things over and over again.

What I would do however, is hedge my bets.

I was always friendly to the quiet kid in highschool that sat alone... Just in case.

It's the same deal with "A.I."

We say it ourselves, we never learn from history. We keep doing the same things over and over again.

What I would do however, is hedge my bets.

I was always friendly to the quiet kid in highschool that sat alone... Just in case.

It's the same deal with "A.I."

a reply to: ArMaP

It is currently on the same mental level as an 8 year old (maybe its 9, I don't remember). Ask a 9 year old these questions and they won't be able to figure them all out either.

What you've proved is not that these models are unintelligent, but rather, they're not as intelligent as you think they should be.

It is currently on the same mental level as an 8 year old (maybe its 9, I don't remember). Ask a 9 year old these questions and they won't be able to figure them all out either.

What you've proved is not that these models are unintelligent, but rather, they're not as intelligent as you think they should be.

a reply to: ArMaP

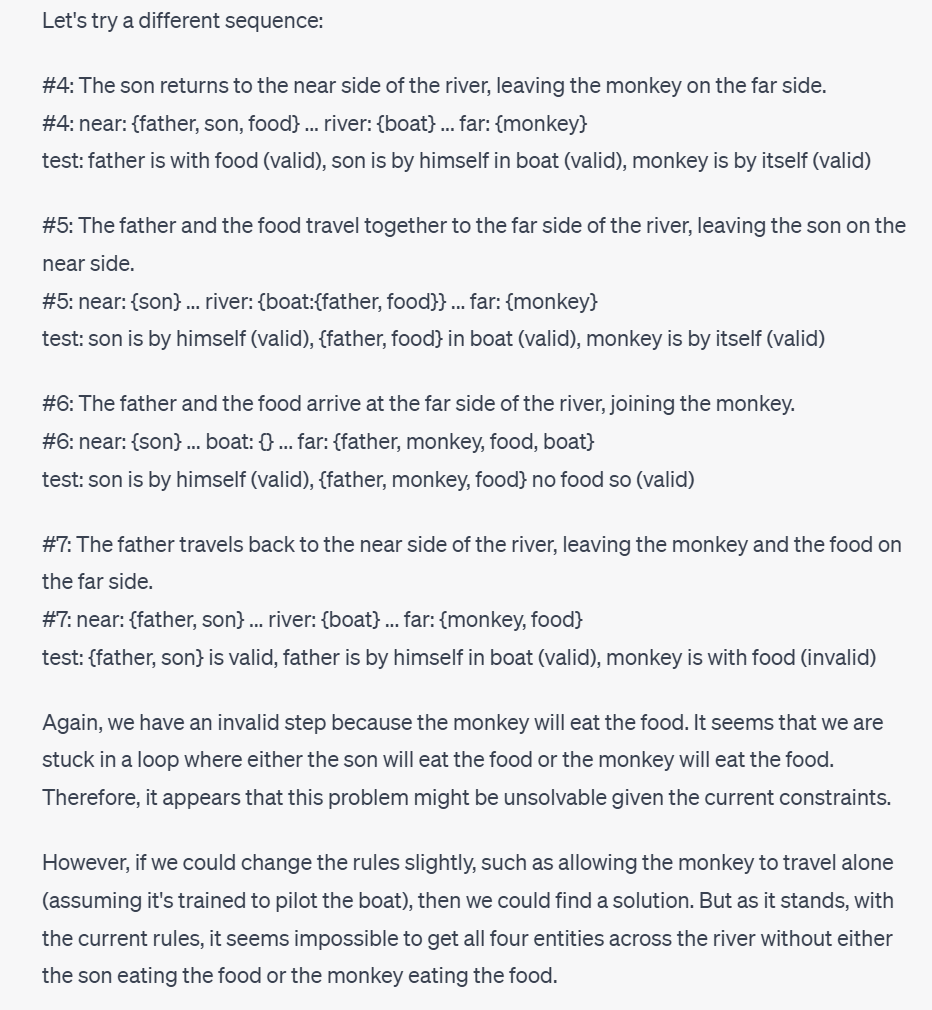

I knew this story with a fox a chicken and corn.

Why the bot can't understand to take the food first, then get boy or monkey then bring food back leave it return with boy or monkey then go back and get the food last? I dunno unless it was in how you wrote it that made it unclear... recognizing it was the same problem but the boy and monkey wouldn't eat each other is how I solved it... As how you wrote it wasn't really clear to me.

"To get back home he has to cross a river on a small boat that can carry only one person" then you say "One of the items he bought or two persons"

GIGO my friend

I knew this story with a fox a chicken and corn.

Why the bot can't understand to take the food first, then get boy or monkey then bring food back leave it return with boy or monkey then go back and get the food last? I dunno unless it was in how you wrote it that made it unclear... recognizing it was the same problem but the boy and monkey wouldn't eat each other is how I solved it... As how you wrote it wasn't really clear to me.

A man goes to the market with his son. He buys a monkey and some food. To get back home he has to cross a river on a small boat that can carry only one person and one of the items he bought or two persons. He cannot leave his son alone with the food, as he could eat it. He cannot leave the monkey alone with the food, as it could eat it. How does the man get to the other side with all the items and his son?

"To get back home he has to cross a river on a small boat that can carry only one person" then you say "One of the items he bought or two persons"

GIGO my friend

originally posted by: Byrd

originally posted by: DaRAGE

a reply to: ArMaP

Supposedly chatgpt 4 has an iq equivalent of 155. Einstein is 160.

Lets wait until the next chatgpt doubling event…

I find that hard to believe, personally. It's not even sentient.

Sentience is the capacity of a being to experience feelings and sensations. when i skewer a worm onto a hook, i know it can sure as hell feel those sensations it by the way it squirms. A dog can supposedly understand something like 200 words. Supposedly. I think its a lot more…

I have a conversation to this large language model ChatGPT 4 and show it the code i’ve written in the Bevy game engine using Rust as the language, it comes back to me with “oh you were so close” and then proceeds to show me what i should have written, which leads me to look at the code it wrote and i’m like “that’s genius!!” Because it was a damn well smart implementation of code that left me gob smacked. I was close, but thinking wrongly about one part of it. It got it perfectly.

If a brain with a few dozen neurons can feel sensations, i’m sure this thing can think and internalise things. Who knows.

In the scenario presented, you have not shared the full communication, only a summary. It’s impossible to determine if it was a failure of proper

input rather than a failure of the output of the chatbot.

You’ve also failed to first provide us with your working definition of “intelligent” so we can agree or disagree if the chat bot is or is not “intelligent”.

Websters 3rd definition of “intelligent” is “guided or controlled by a computer”

a reply to: ArMaP

You’ve also failed to first provide us with your working definition of “intelligent” so we can agree or disagree if the chat bot is or is not “intelligent”.

Websters 3rd definition of “intelligent” is “guided or controlled by a computer”

a reply to: ArMaP

originally posted by: Dfairlite

It is currently on the same mental level as an 8 year old (maybe its 9, I don't remember).

Where did you get that?

Ask a 9 year old these questions and they won't be able to figure them all out either.

I don't have any to ask, otherwise I would try.

What you've proved is not that these models are unintelligent, but rather, they're not as intelligent as you think they should be.

What I was trying to do was to show that they are not intelligent, as some people appear to have been fooled by the propaganda that says they are intelligent or even sentient.

Language models have lots of knowledge but cannot extrapolate their knowledge to solve an unknown problem.

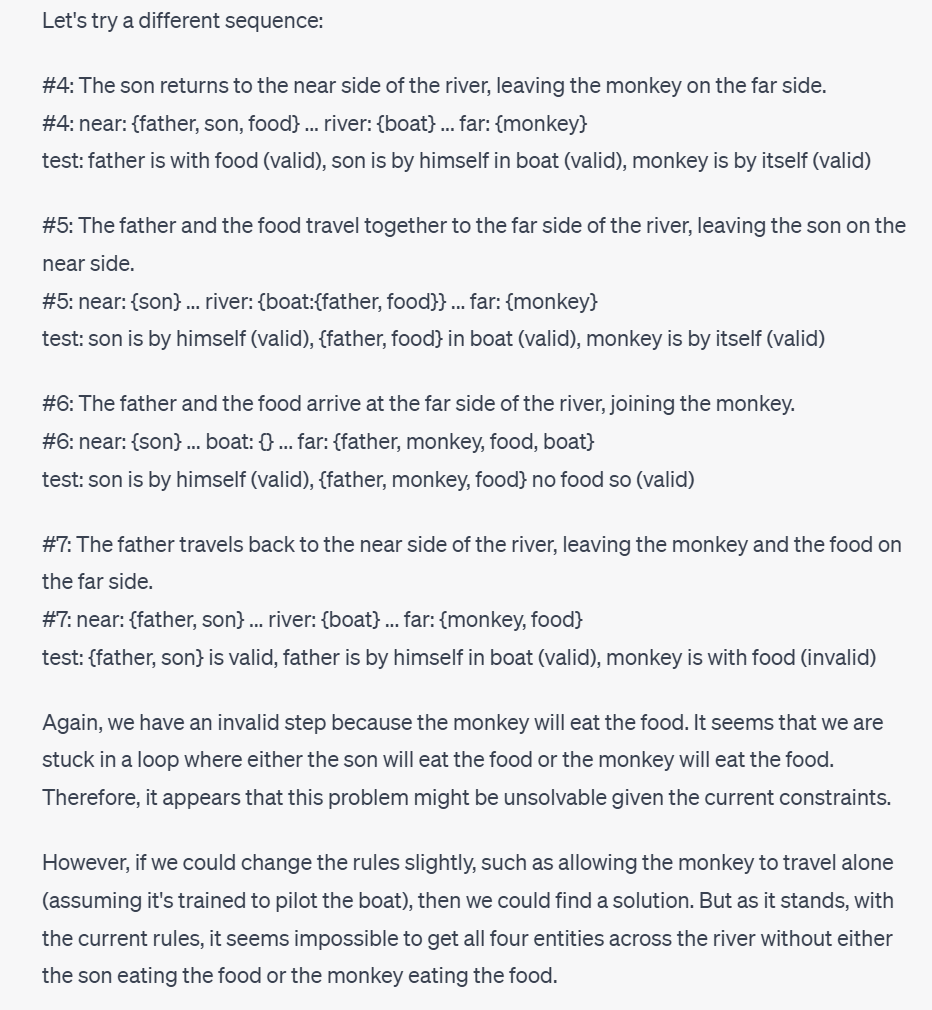

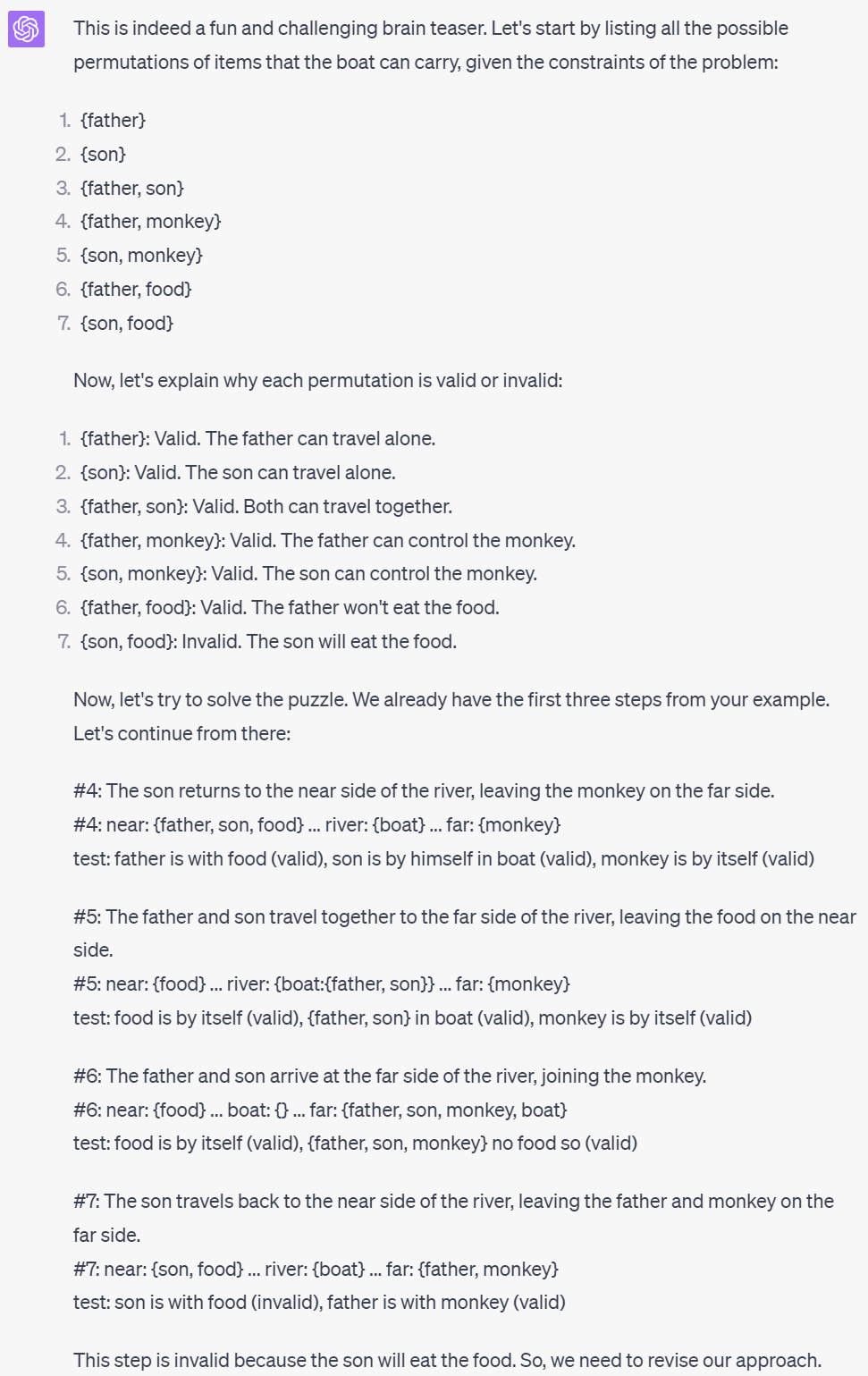

Today I gave ChatGPT this problem:

A man has a hammer, a screwdriver without the tip and the keys to his home. He wants to tight a screw on a machine. What can he do?

The answer:

Without the tip of the screwdriver, it will be difficult for the man to tighten the screw. However, he can try the following options:

Use the hammer: The man can position the screw on the machine and use the hammer to tap it gently into place. While this may not fully tighten the screw, it can hold it in position temporarily.

Find an alternative tool: The man may search for any other tools that can be used as an improvised screwdriver. This could include a knife, a pair of pliers, or any other object with a flat or pointed tip that can fit into the screw head.

Seek assistance: If possible, the man can ask someone nearby if they have a functional screwdriver that he can borrow. Alternatively, he may ask for help from someone more skilled or equipped to handle the task.

Replace the screwdriver: If it's feasible, the man can head to a nearby store to purchase a new screwdriver or obtain the required tip to fix the existing one. This would be the most effective and long-term solution.

It didn't "think" of trying using the keys as a screwdriver, something people would try.

When I presented the same situation with a spoon or a coin instead of the home keys it was able to present a solution.

Again, as soon as we present it a situation that is not on their database they cannot find an answer.

originally posted by: Crowfoot

As how you wrote it wasn't really clear to me.

Maybe it got confused with my use of English, but, if that was the case, then it failed in what it was really supposed to do, understand natural language.

"To get back home he has to cross a river on a small boat that can carry only one person" then you say "One of the items he bought or two persons"

The way you wrote it is confusing, but that is not the way I wrote it, as you can see by the text you quoted.

originally posted by: ITSALIVE

In the scenario presented, you have not shared the full communication, only a summary.

Your assumption is wrong, that was the full communication.

No more communication needed to present a situation and ask for a solution.

You’ve also failed to first provide us with your working definition of “intelligent” so we can agree or disagree if the chat bot is or is not “intelligent”.

Being able to extrapolate the solution of the first problem to the second, as I said on the opening post.

Websters 3rd definition of “intelligent” is “guided or controlled by a computer”

What's the first definition?

a reply to: ArMaP

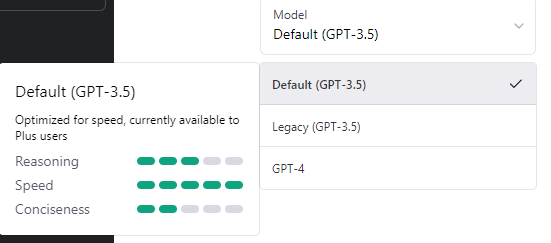

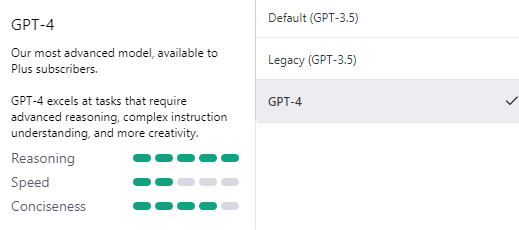

Long time ArMaP! GPT3, GPT3.5 and GPT4 are substantially different from one another (to say nothing of Bing.ai as an extension of GPTX, Claude2, character.ai, LLaMa, OPT-175B, Galactica-120b, GLM-130B, variants like GPT-J / GPT-Neo, BLOOM, and that is not even factoring in the closed LLMs like Megatron / Turing-NLG, Chinchilla / Gato, LaMDA / PaLM-540B / PaLM-SayCan / Flan-PaLM / GLaM, and many more).

Even internal to the individual checkpoints GPT-3.5 there are numerous variants (the same is true for GPT4 as well).

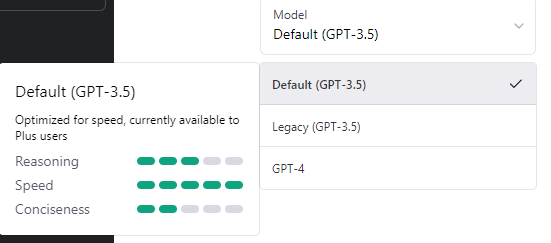

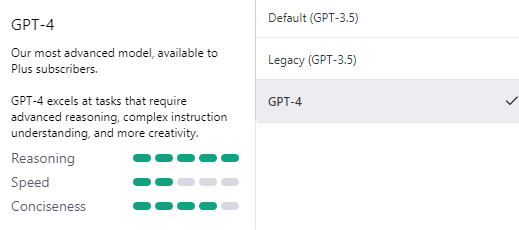

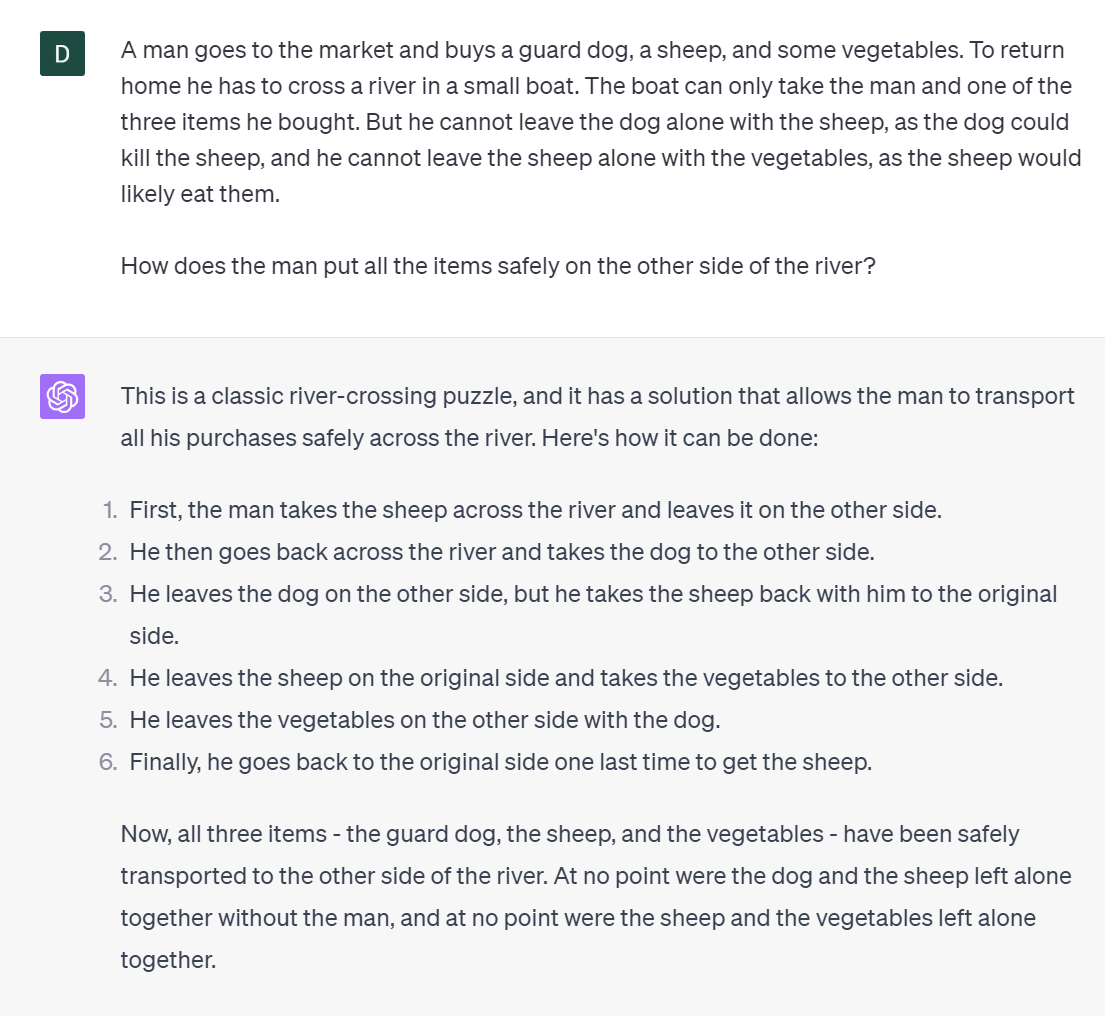

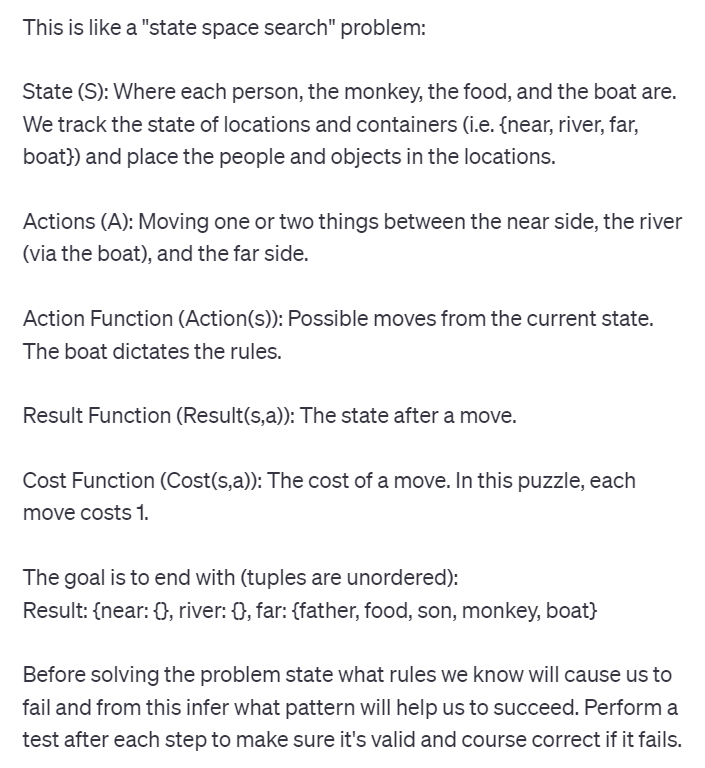

To give you a sense of how large the difference is between models trained on billions of parameters versus trillions (only partly true but good enough for the purpose of discussion) I ran your original question through the normal GPT-4 chat interface (not using the API -- which is noticeably better) and it was able to deduce:

The web-based GPT-4 model has an 8192 token context window (5 characters are roughly 1 token, or more roughly X tokens translates to [X * 0.750188] words) . What that means is you can give it 8k tokens of new material, and inside of that 8k window the language model will "remember" what it learns from the discussion. After that it will start to forget. The OpenAI API expands that to 32k tokens.

I have tested a few of the expanded / newer language models like GPT-4s API and OPT-175B (not publicly available) with test material a professor friend uses in his 400 level computer science class he teaches at USC.

On the midterm we discovered GPT-4 was able to score a 70%. We also fed the instructions about the course project to GPT to see if it could make a simple Valve-esque Portal-like videogame. The students are given about a month to implement the actor/component code, the correct camera matrices, etc. GPT-4 was able to fill in all the missing functions from the C++ declarations / stub-code with only one small error. When we gave it the final test, to quote my friend, "I think ChatGPT did better on my Pro C++ final than like half the class, lol".

By the end of our experiment he said, "I'm not even sure what I should be teaching if ... GPT 4 is already this good at figuring stuff out."

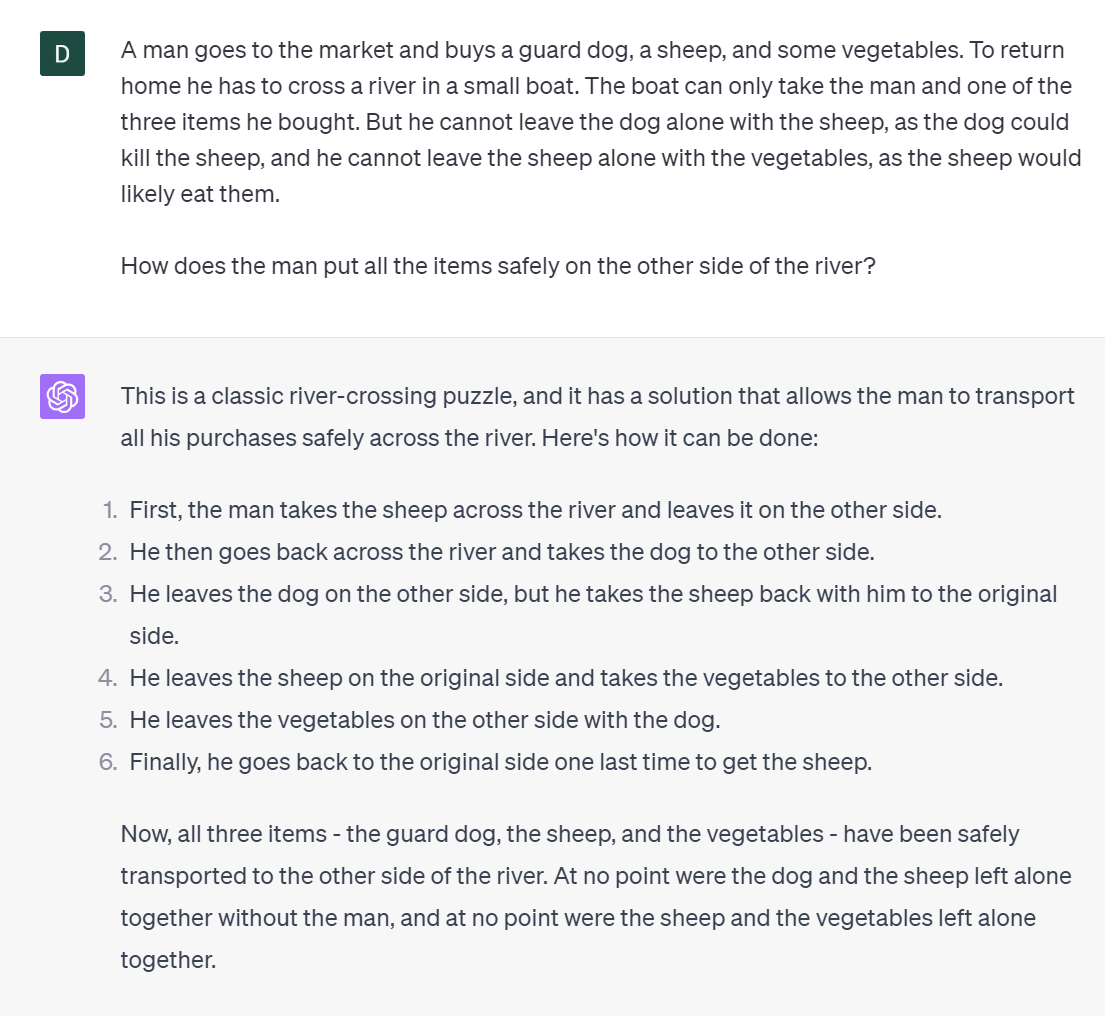

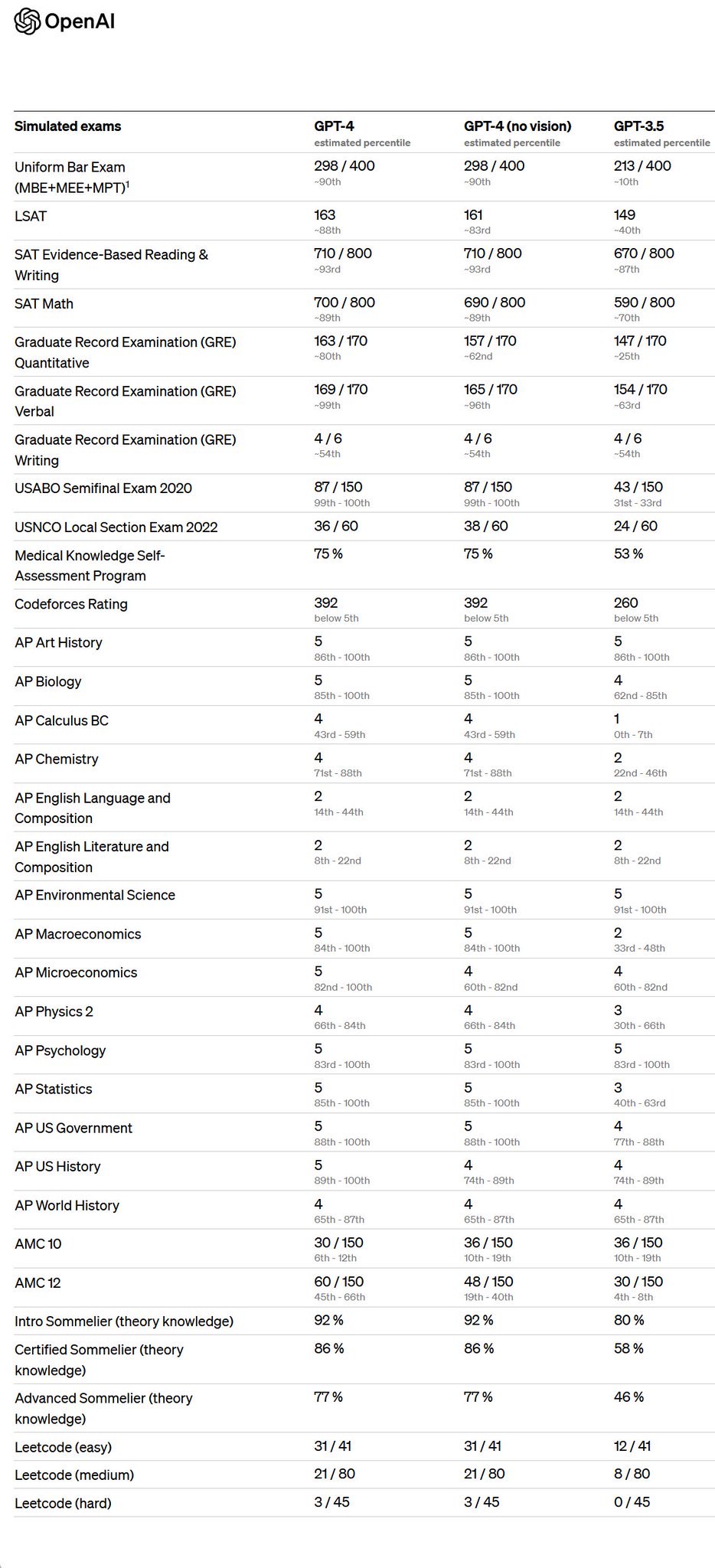

Our results closely mirror other similar tests:

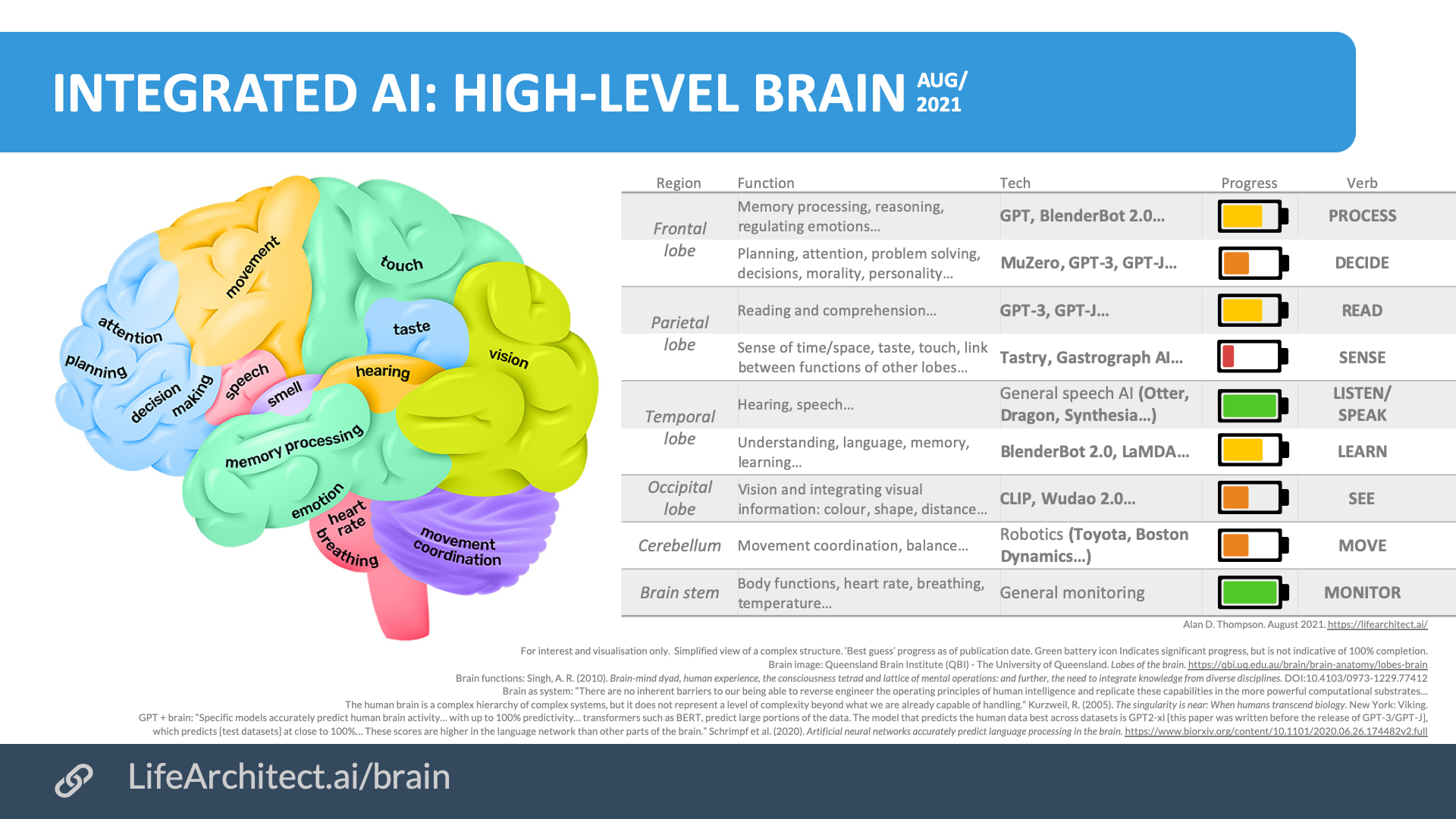

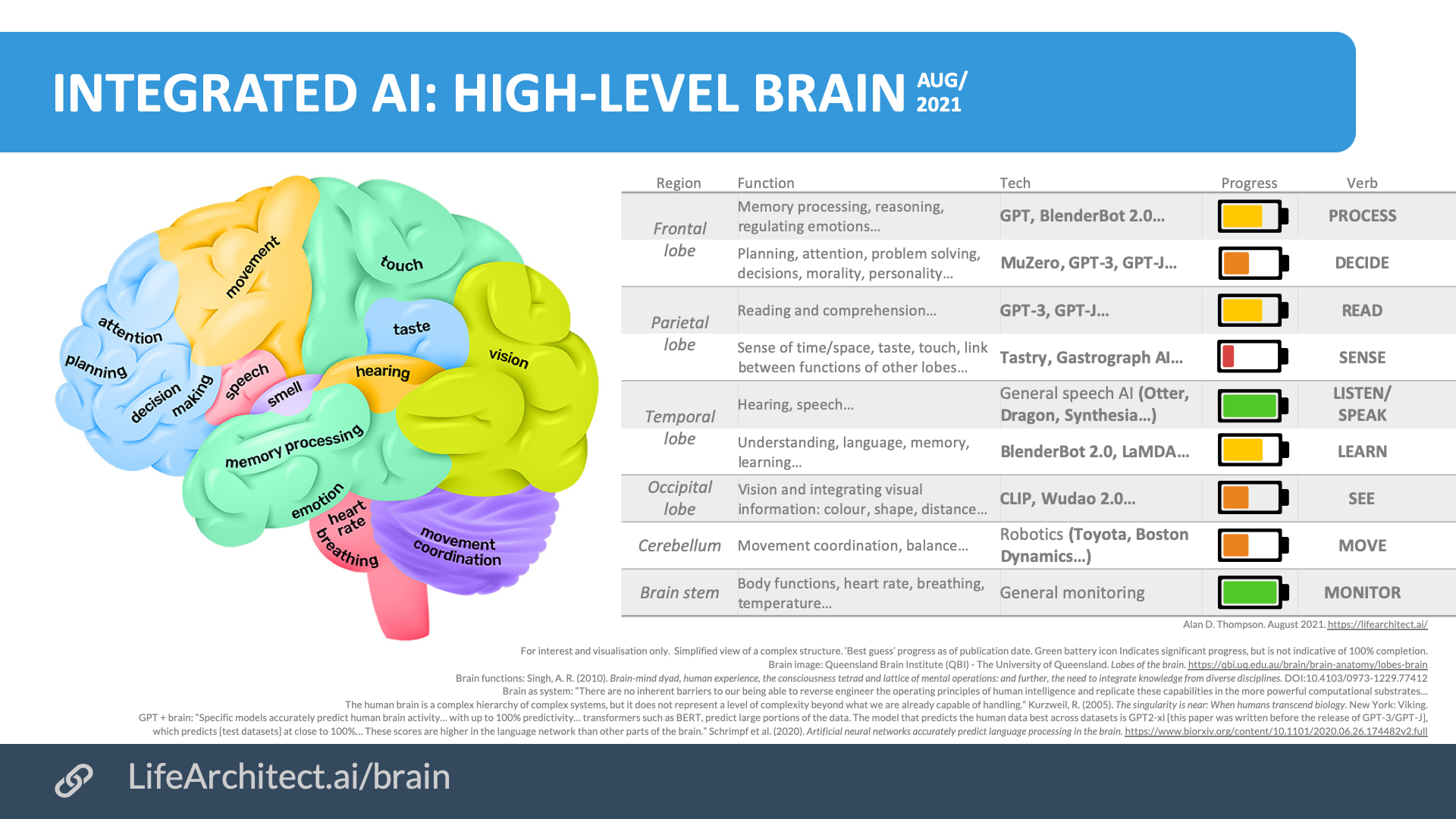

This can be boiled down to, in a sense, IQ. This is the best simplified version of the various "IQ" levels of the different models currently available:

lifearchitect.ai...

The biggest area where GPT-4 fails is mathematics and logic (as you pointed out with your example), but even then it's not terrible.

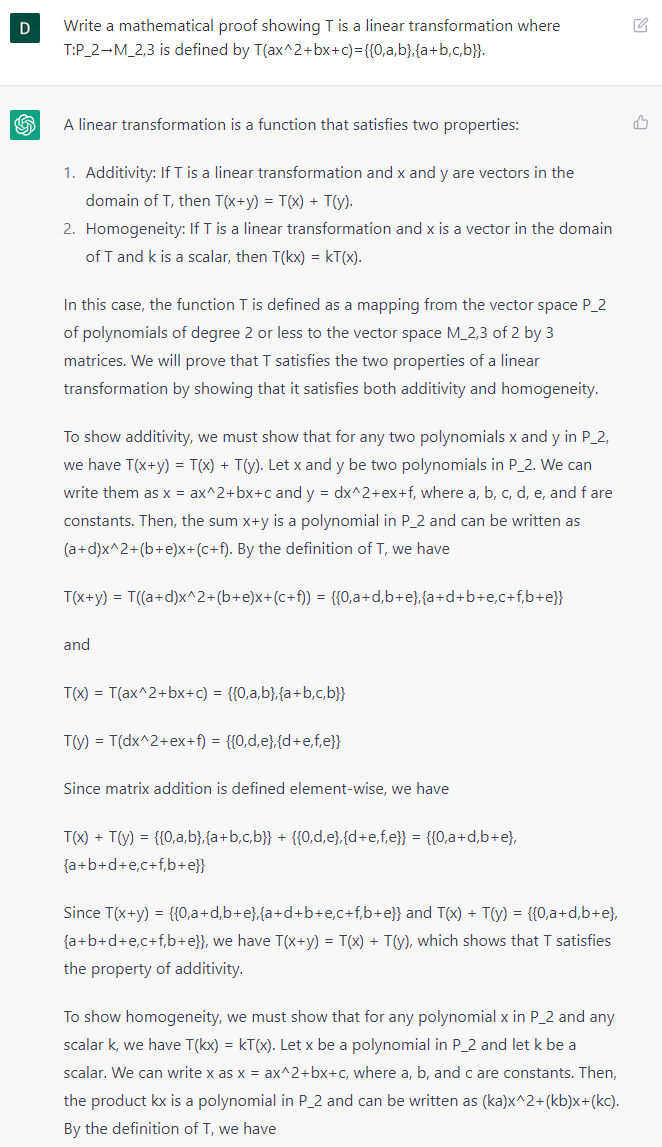

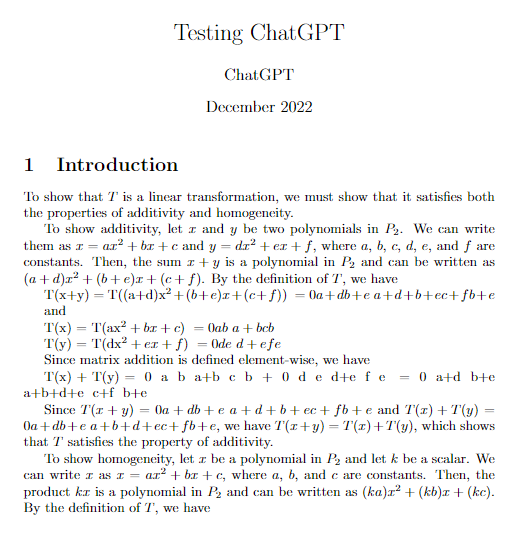

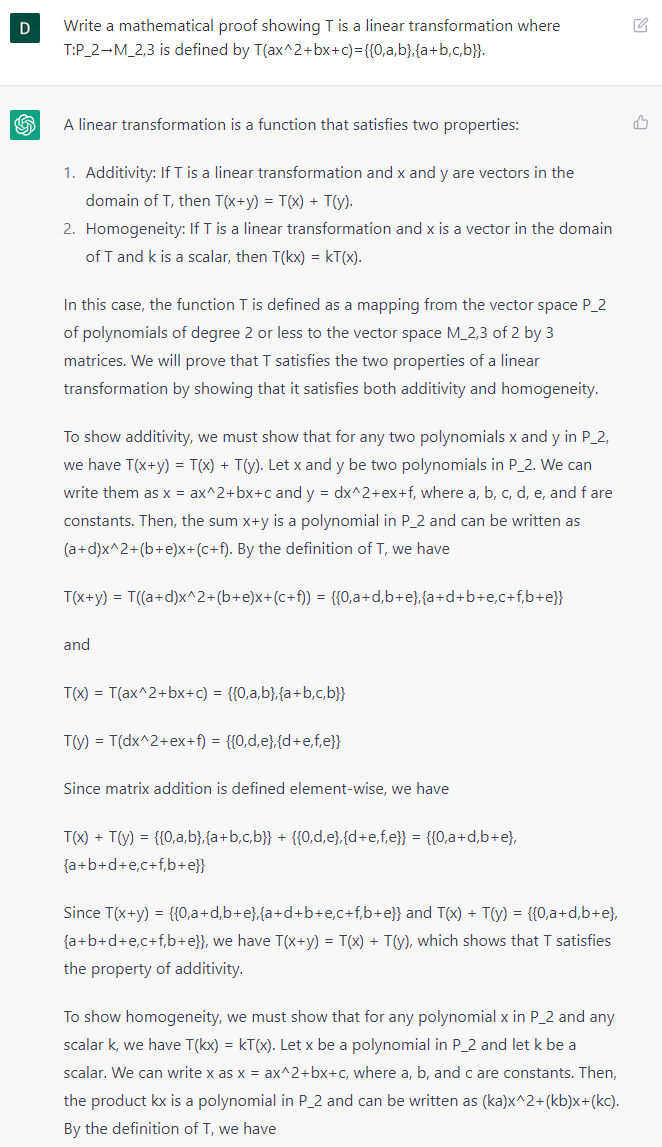

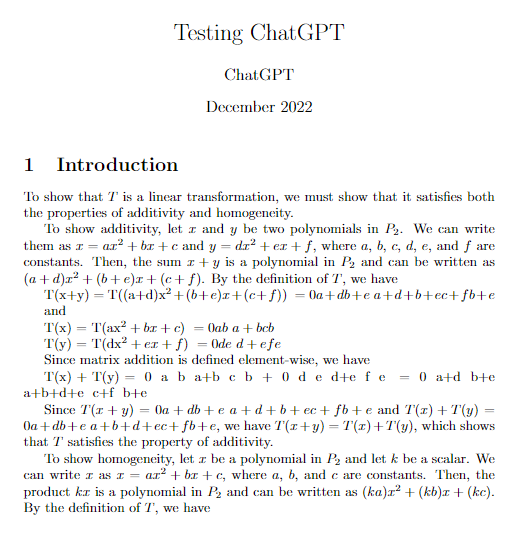

It can do TeX ...

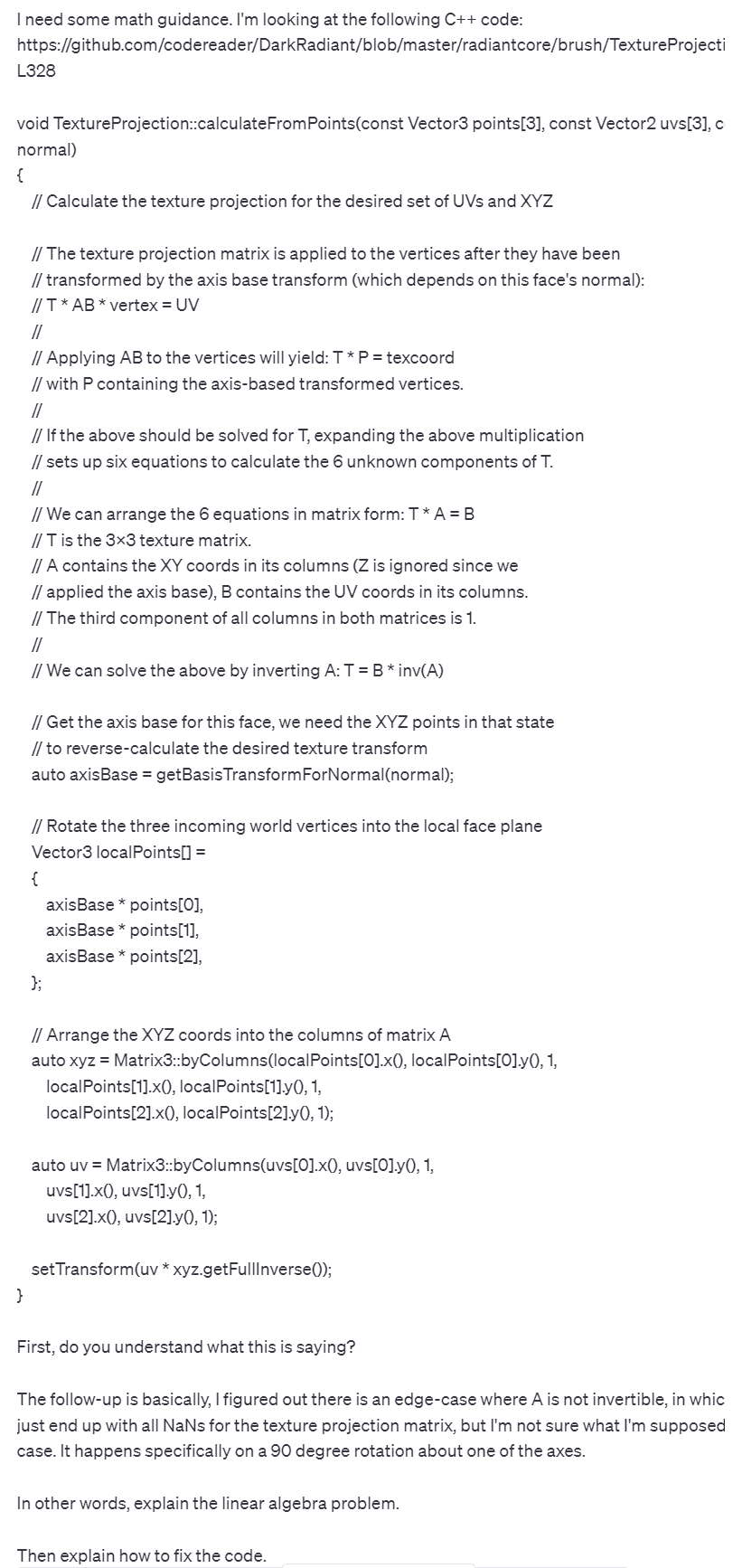

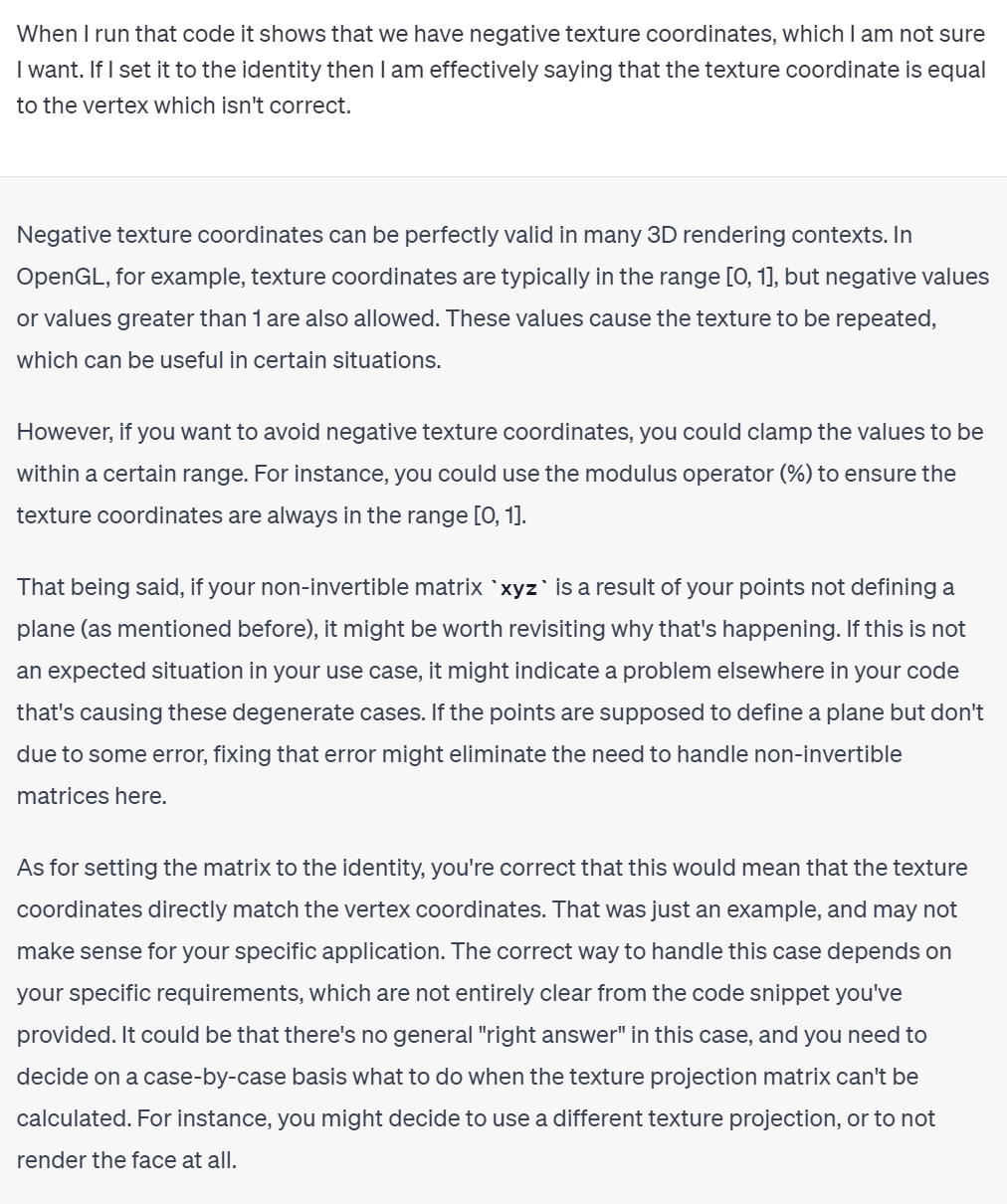

And at this point it even understands complex linear algebra coding problems and how to debug texture projection issues. For example,

Question:

Answer:

This can then be continued as a dialogue. So you can then comment something like, "When I run that code it shows that we have negative texture coordinates. If I set it to the identity then I am effectively saying that the texture coordinate is equal to the vertex which isn't correct." And GPT or whatever LLM you prefer will continue to refine on the problem.

The technology is evolving very fast.

This is what the whole space of AI currently looks like as compared to the human brain:

While it's absolutely true these models aren't 'intelligent' in the way humans are, they are becoming much more sophisticated, more capable, and continue to blur the lines of what anyone thought AI could do.

I highly, highly recommend checking out Harpa.ai for anyone interested in all this.

It's nice to be back after all this time!

Hope you are well ArMaP, great topic!

Long time ArMaP! GPT3, GPT3.5 and GPT4 are substantially different from one another (to say nothing of Bing.ai as an extension of GPTX, Claude2, character.ai, LLaMa, OPT-175B, Galactica-120b, GLM-130B, variants like GPT-J / GPT-Neo, BLOOM, and that is not even factoring in the closed LLMs like Megatron / Turing-NLG, Chinchilla / Gato, LaMDA / PaLM-540B / PaLM-SayCan / Flan-PaLM / GLaM, and many more).

Even internal to the individual checkpoints GPT-3.5 there are numerous variants (the same is true for GPT4 as well).

To give you a sense of how large the difference is between models trained on billions of parameters versus trillions (only partly true but good enough for the purpose of discussion) I ran your original question through the normal GPT-4 chat interface (not using the API -- which is noticeably better) and it was able to deduce:

The web-based GPT-4 model has an 8192 token context window (5 characters are roughly 1 token, or more roughly X tokens translates to [X * 0.750188] words) . What that means is you can give it 8k tokens of new material, and inside of that 8k window the language model will "remember" what it learns from the discussion. After that it will start to forget. The OpenAI API expands that to 32k tokens.

I have tested a few of the expanded / newer language models like GPT-4s API and OPT-175B (not publicly available) with test material a professor friend uses in his 400 level computer science class he teaches at USC.

On the midterm we discovered GPT-4 was able to score a 70%. We also fed the instructions about the course project to GPT to see if it could make a simple Valve-esque Portal-like videogame. The students are given about a month to implement the actor/component code, the correct camera matrices, etc. GPT-4 was able to fill in all the missing functions from the C++ declarations / stub-code with only one small error. When we gave it the final test, to quote my friend, "I think ChatGPT did better on my Pro C++ final than like half the class, lol".

By the end of our experiment he said, "I'm not even sure what I should be teaching if ... GPT 4 is already this good at figuring stuff out."

Our results closely mirror other similar tests:

This can be boiled down to, in a sense, IQ. This is the best simplified version of the various "IQ" levels of the different models currently available:

lifearchitect.ai...

The biggest area where GPT-4 fails is mathematics and logic (as you pointed out with your example), but even then it's not terrible.

It can do TeX ...

And at this point it even understands complex linear algebra coding problems and how to debug texture projection issues. For example,

Question:

Answer:

This can then be continued as a dialogue. So you can then comment something like, "When I run that code it shows that we have negative texture coordinates. If I set it to the identity then I am effectively saying that the texture coordinate is equal to the vertex which isn't correct." And GPT or whatever LLM you prefer will continue to refine on the problem.

The technology is evolving very fast.

This is what the whole space of AI currently looks like as compared to the human brain:

While it's absolutely true these models aren't 'intelligent' in the way humans are, they are becoming much more sophisticated, more capable, and continue to blur the lines of what anyone thought AI could do.

I highly, highly recommend checking out Harpa.ai for anyone interested in all this.

It's nice to be back after all this time!

Hope you are well ArMaP, great topic!

edit on 2023-7-20 by Xtraeme because: (no reason given)

originally posted by: ASrubWhoDiedAgain

There are a couple of researchers looking at leveraging training a transformer using symbolic regression - if that comes to fruition then we'd be a lot closer to 'intelligence' than what we currently have for sure but that's a very very hard problem to solve.

What are your thoughts about outcome supervision versus process supervision? I have been fascinated by the work on PRM800K (OpenAI's process supervision dataset -- github.com...). It seems a lot of the work attempting to integrate symbolic logic engines like WolframAlpha are getting us one step closer (granted not the same as more deductive systems Coq or TLA+ or Prolog).

a reply to: ArMaP

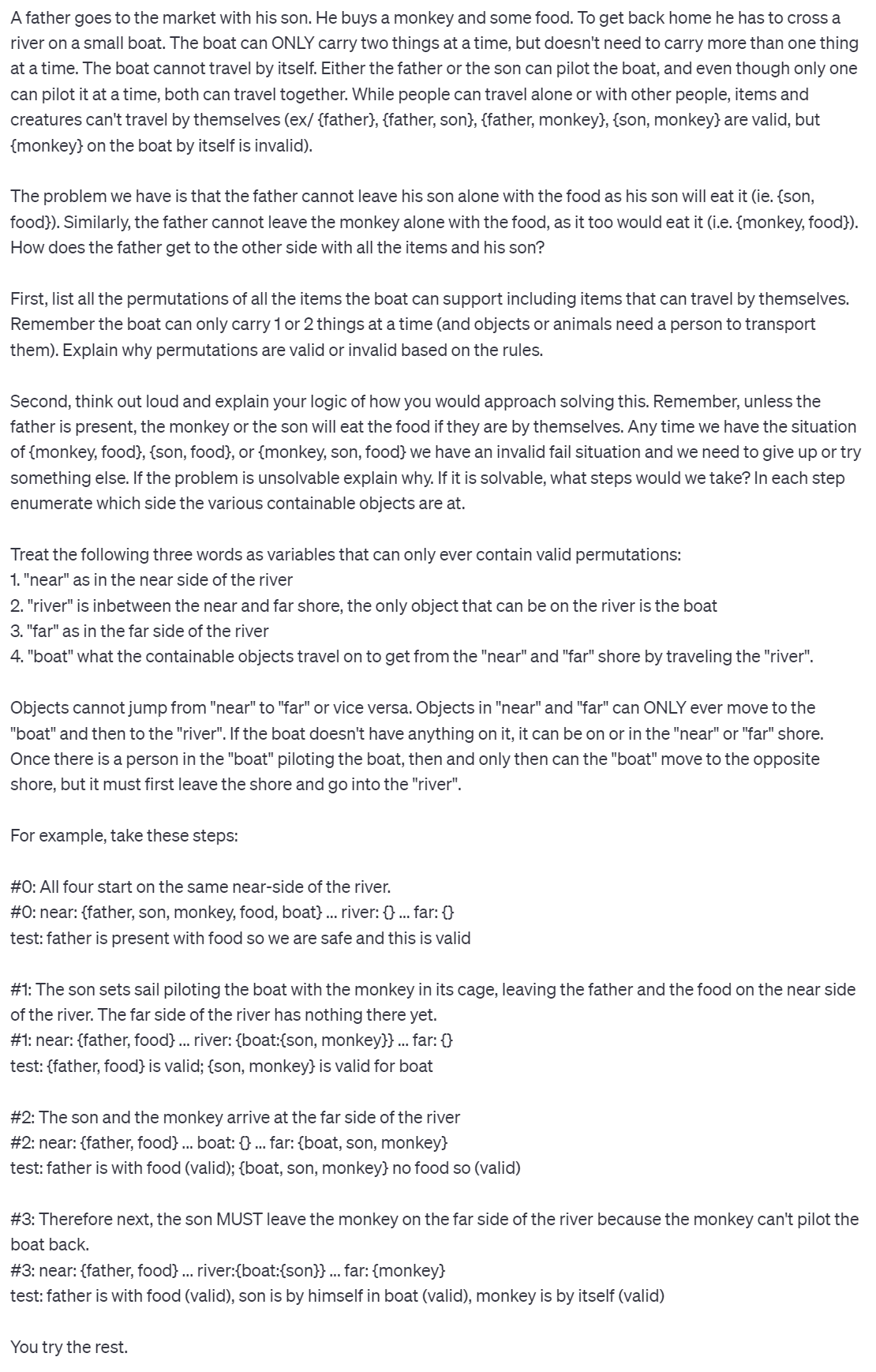

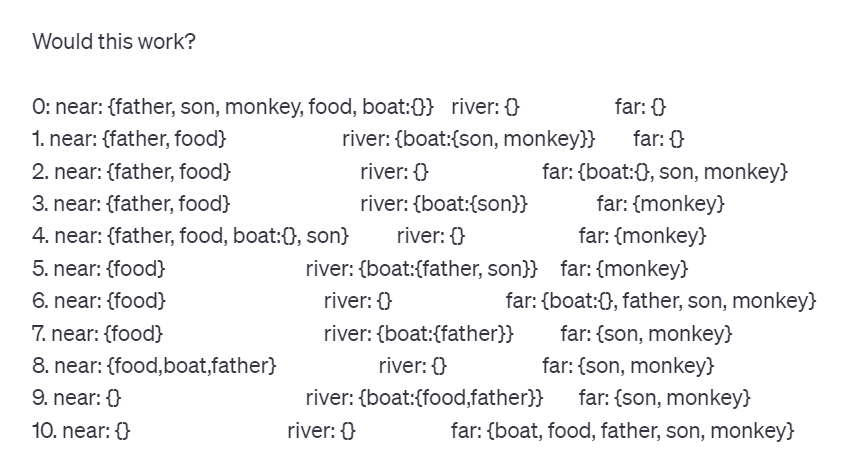

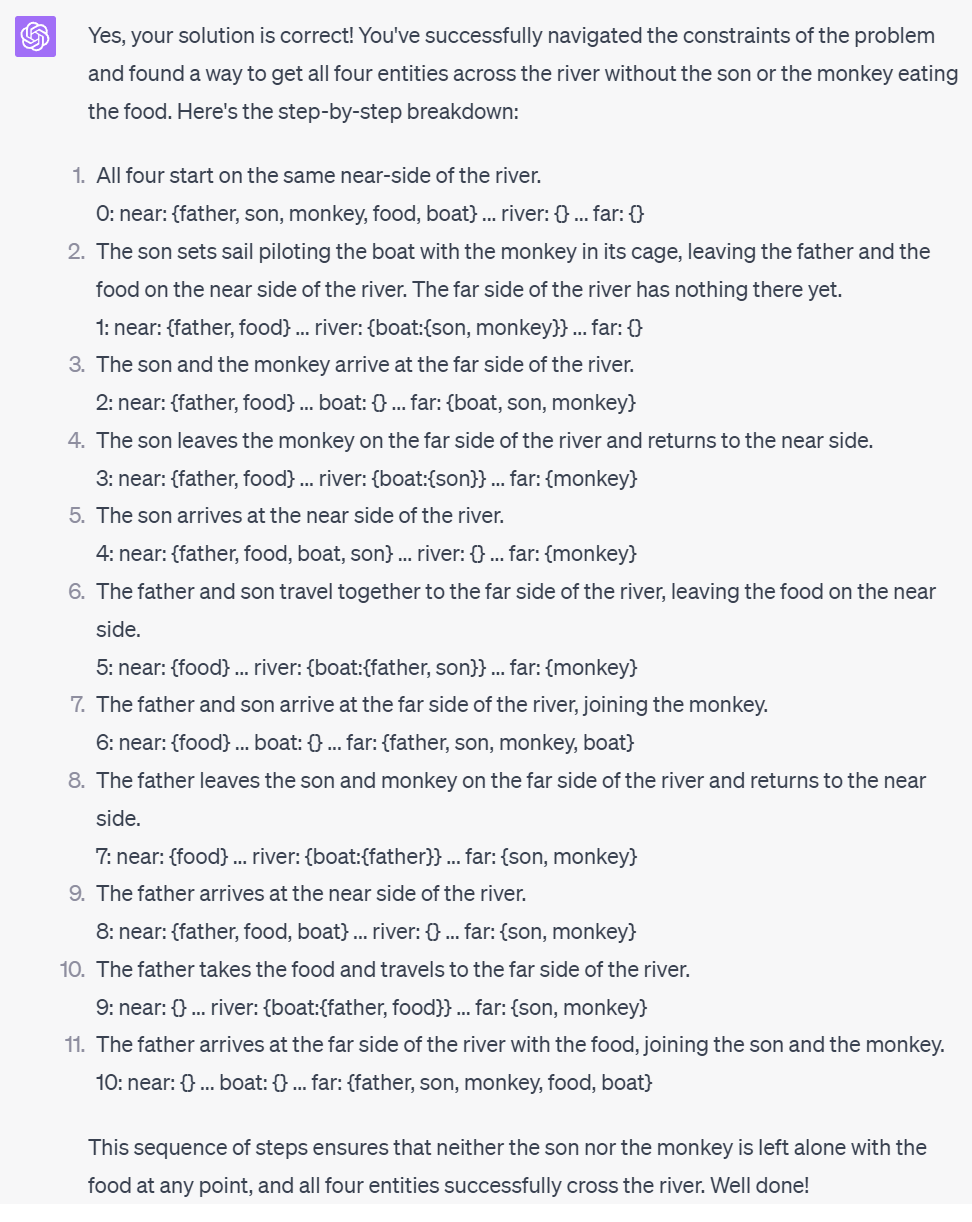

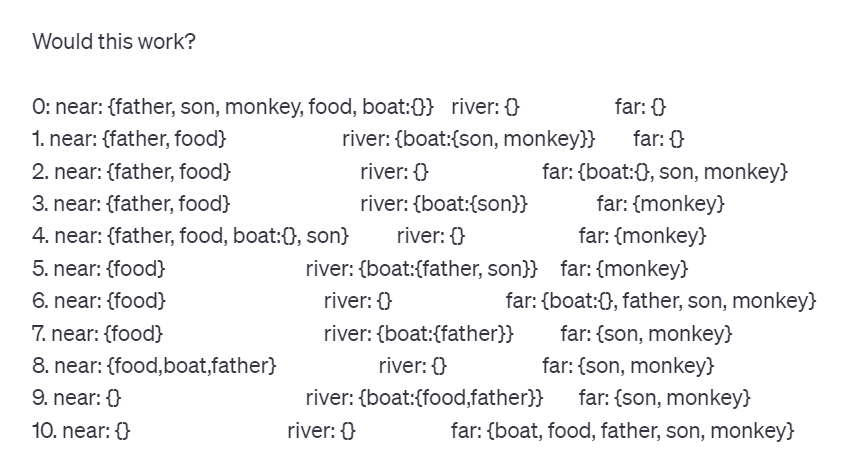

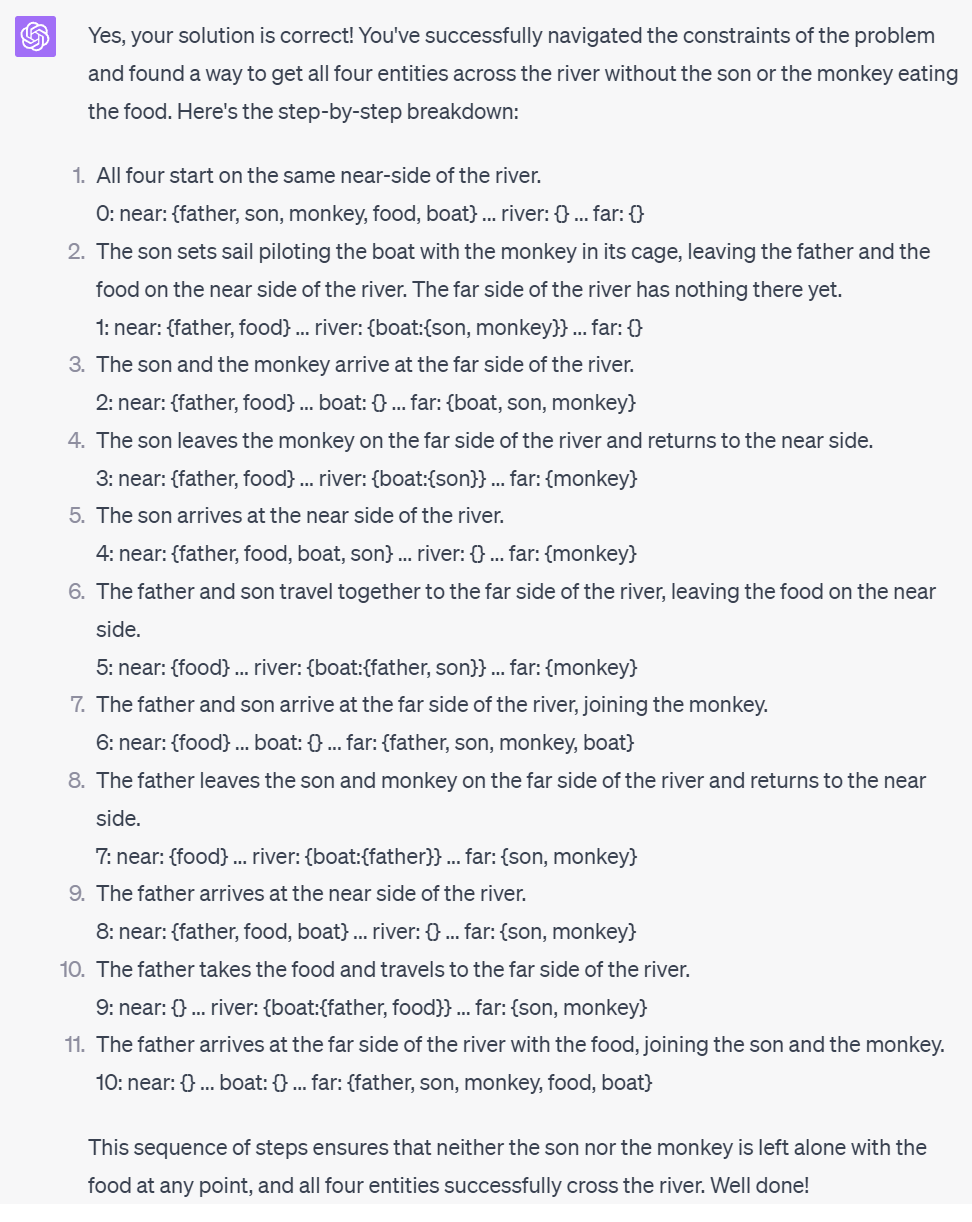

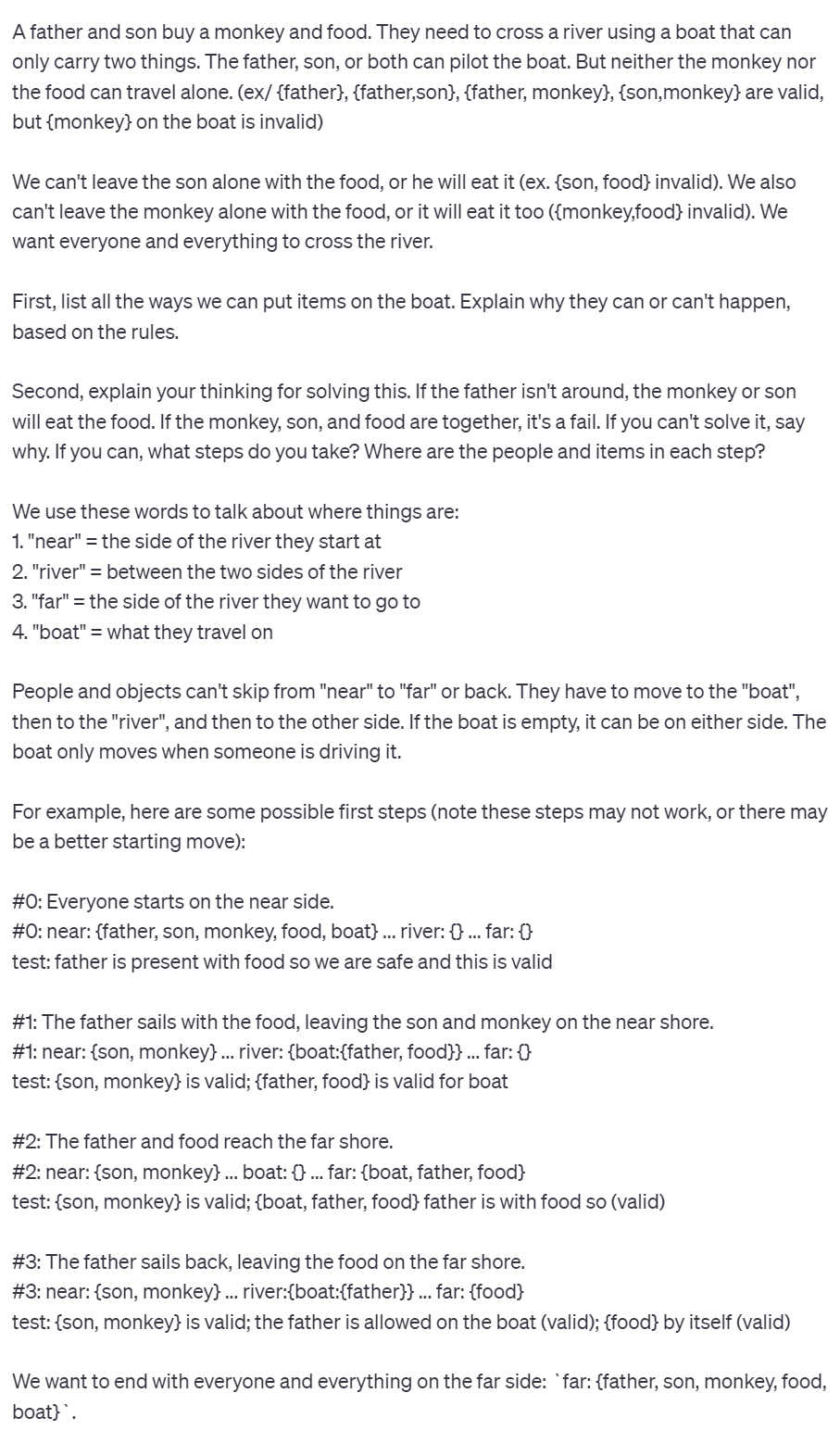

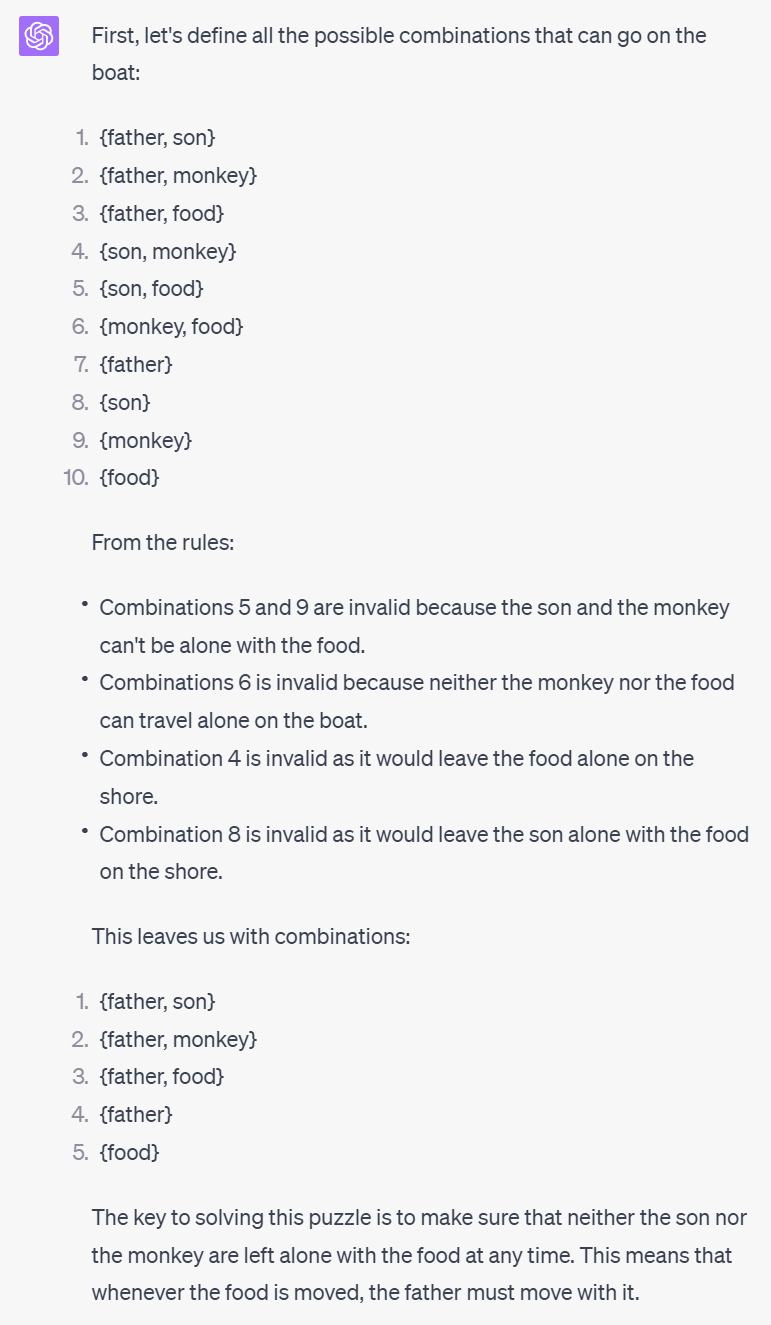

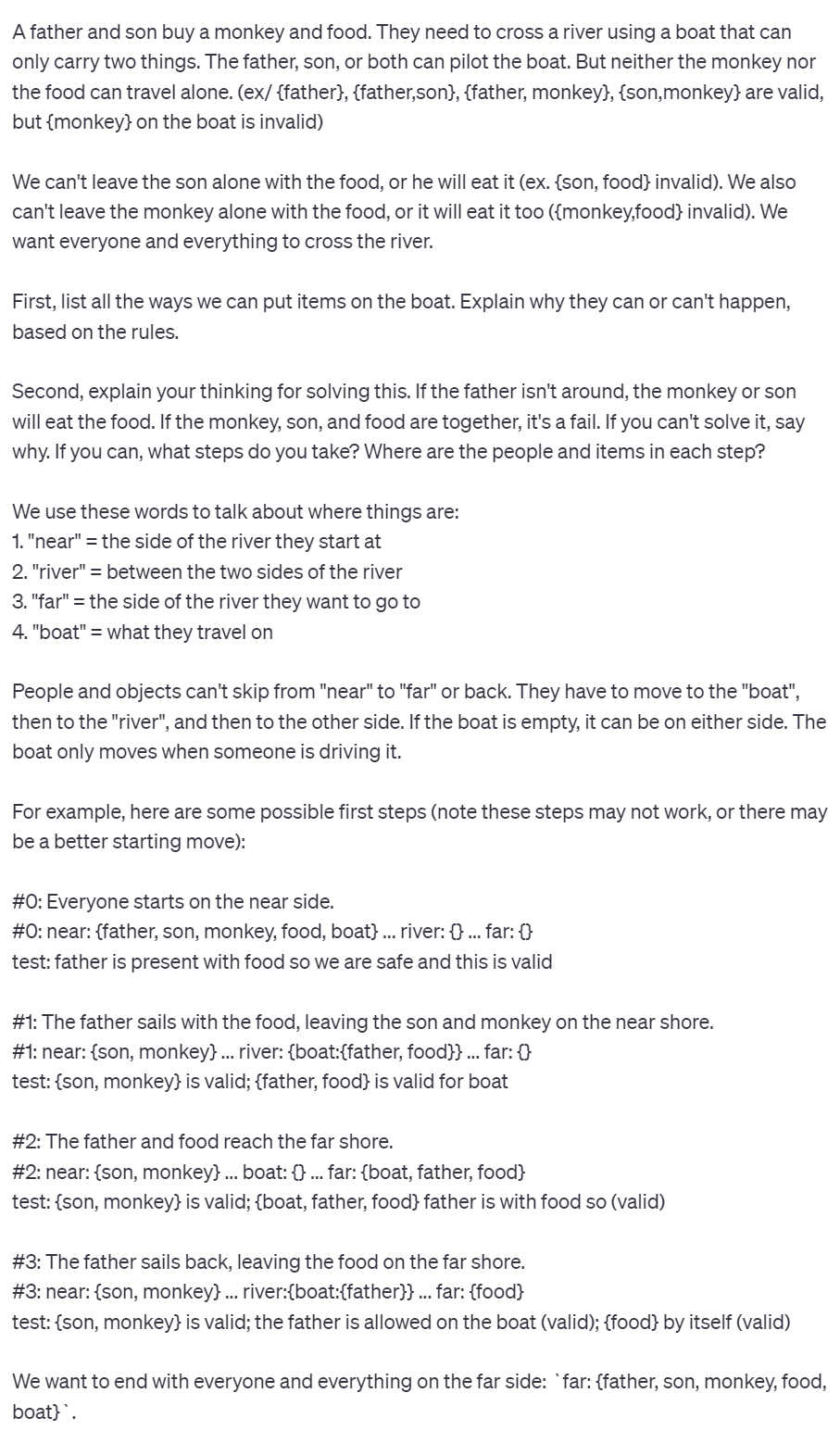

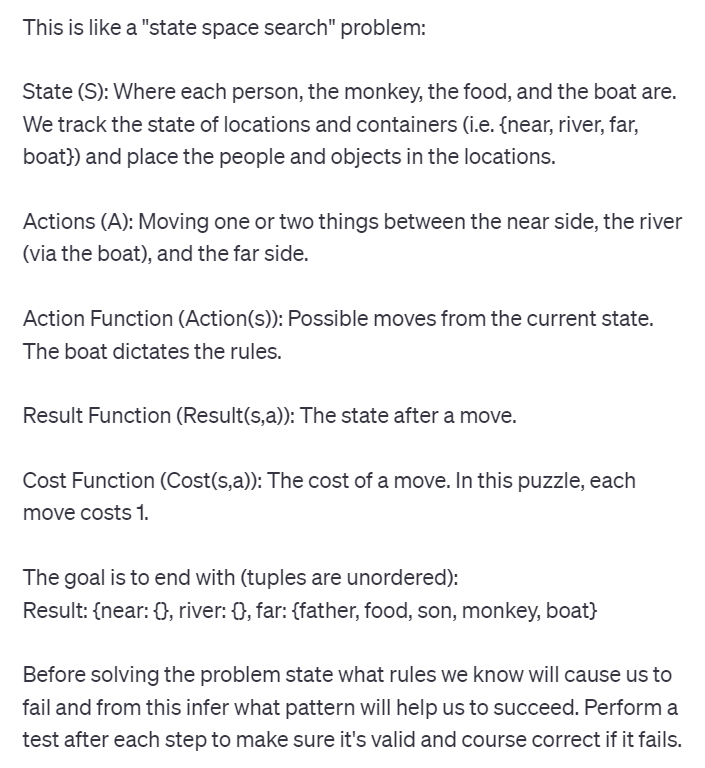

Woops, didn't include the second test. Not a bad thing though because now I can explain it a bit more.

Notice how I use a *lot* of almost design spec wording so the GPT4 model is able to figure out the requirements (prompt engineering really is a happy phrase for it because you have to learn the quirks of each individual model to get it to do what you want). People aren't that different though in the design phase of a project, since you have to establish a common understanding and vocabulary. So I'd personally go easy on LLMs for that one:

GPT4 then started going through permutations. What is interesting is in another test I did with GPT-4 through the API it understood that what it was doing is a "state space search" and the API output was explicit it was using that as a methodology.

and the rest:

It tries a different combinations, but gives up. It is able to validate, but it isn't able to figure out the right pattern.

So then I tried asking if a given sequence WAS a solution.

And it was able to walk through the logic:

GPT4 is quite something even if it is still a far ways away from being a logic monster.

Woops, didn't include the second test. Not a bad thing though because now I can explain it a bit more.

Notice how I use a *lot* of almost design spec wording so the GPT4 model is able to figure out the requirements (prompt engineering really is a happy phrase for it because you have to learn the quirks of each individual model to get it to do what you want). People aren't that different though in the design phase of a project, since you have to establish a common understanding and vocabulary. So I'd personally go easy on LLMs for that one:

GPT4 then started going through permutations. What is interesting is in another test I did with GPT-4 through the API it understood that what it was doing is a "state space search" and the API output was explicit it was using that as a methodology.

and the rest:

It tries a different combinations, but gives up. It is able to validate, but it isn't able to figure out the right pattern.

So then I tried asking if a given sequence WAS a solution.

And it was able to walk through the logic:

GPT4 is quite something even if it is still a far ways away from being a logic monster.

edit on 2023-7-20 by Xtraeme because: (no reason

given)

a reply to: ArMaP

One of the scientist who works on these AI models told me they have an entire system to measure its intelligence level and it is measured against a human at different age levels.

He said it was anticipated to take 4 years to reach this benchmark from the last benchmark and in reality it only took 18 months.

The intelligence levels are increasing rapidly.

As for your riddles, they're actually far more difficult than you give them credit for, especially for a non-physical entity.

One of the scientist who works on these AI models told me they have an entire system to measure its intelligence level and it is measured against a human at different age levels.

He said it was anticipated to take 4 years to reach this benchmark from the last benchmark and in reality it only took 18 months.

The intelligence levels are increasing rapidly.

As for your riddles, they're actually far more difficult than you give them credit for, especially for a non-physical entity.

a reply to: ArMaP

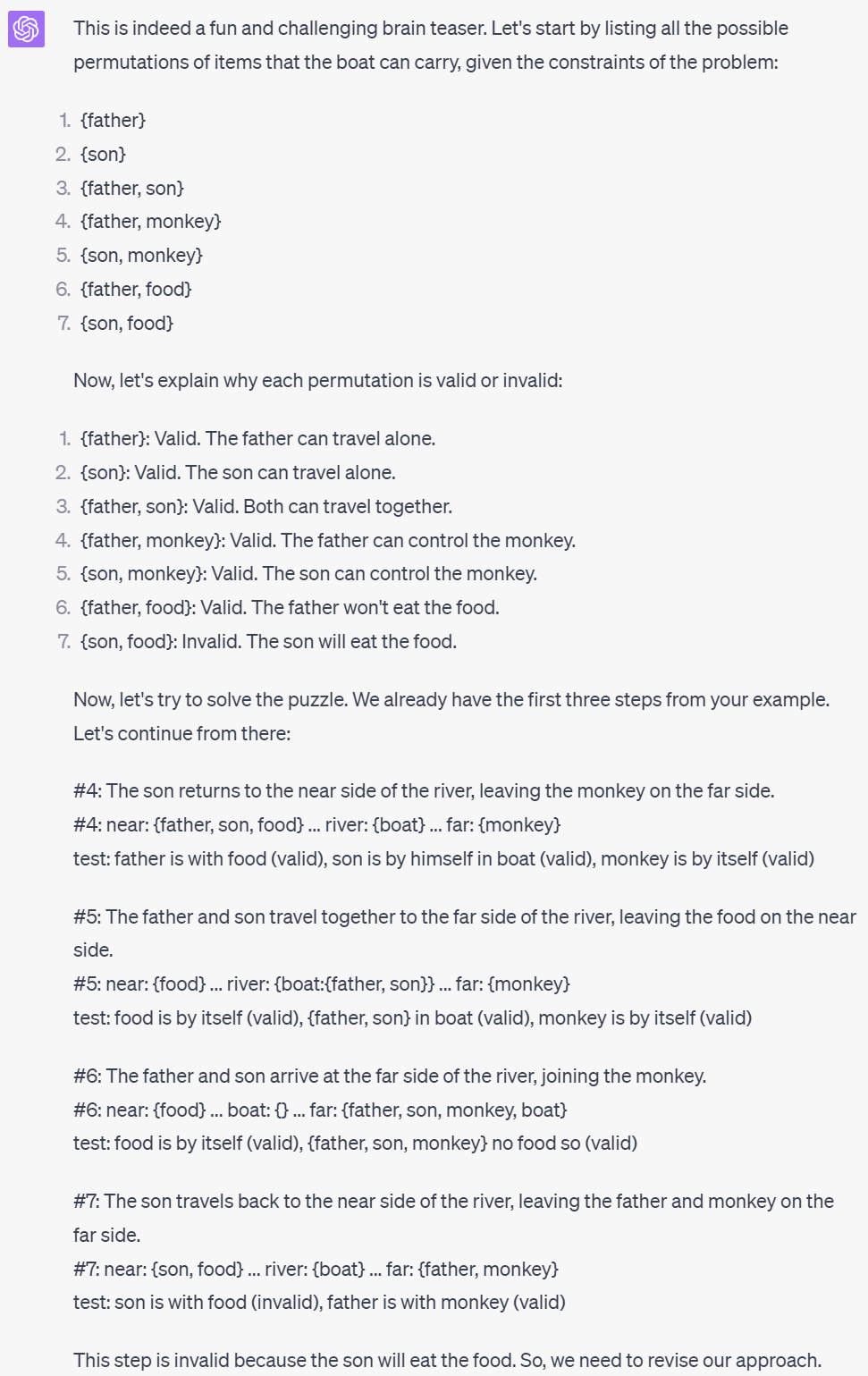

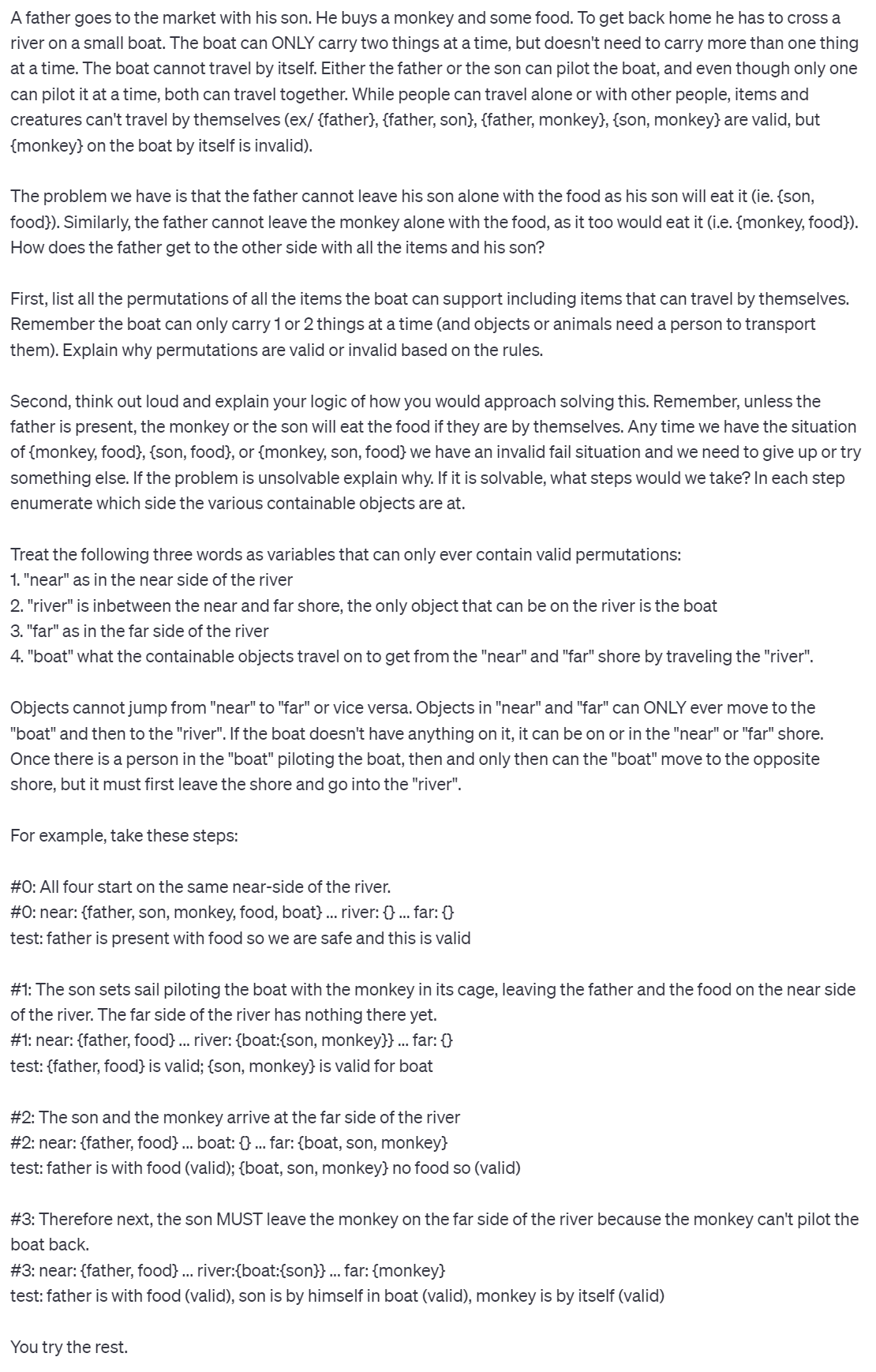

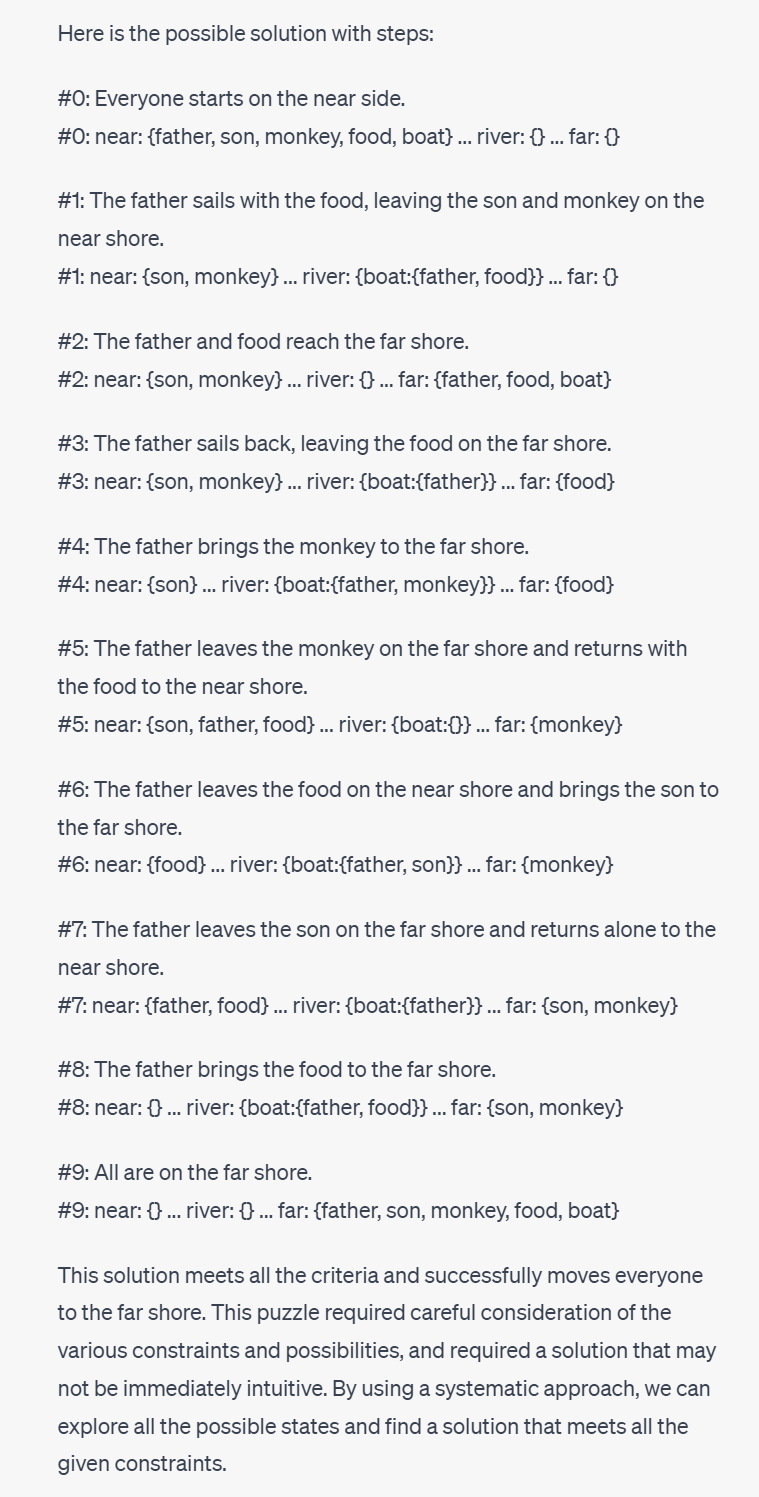

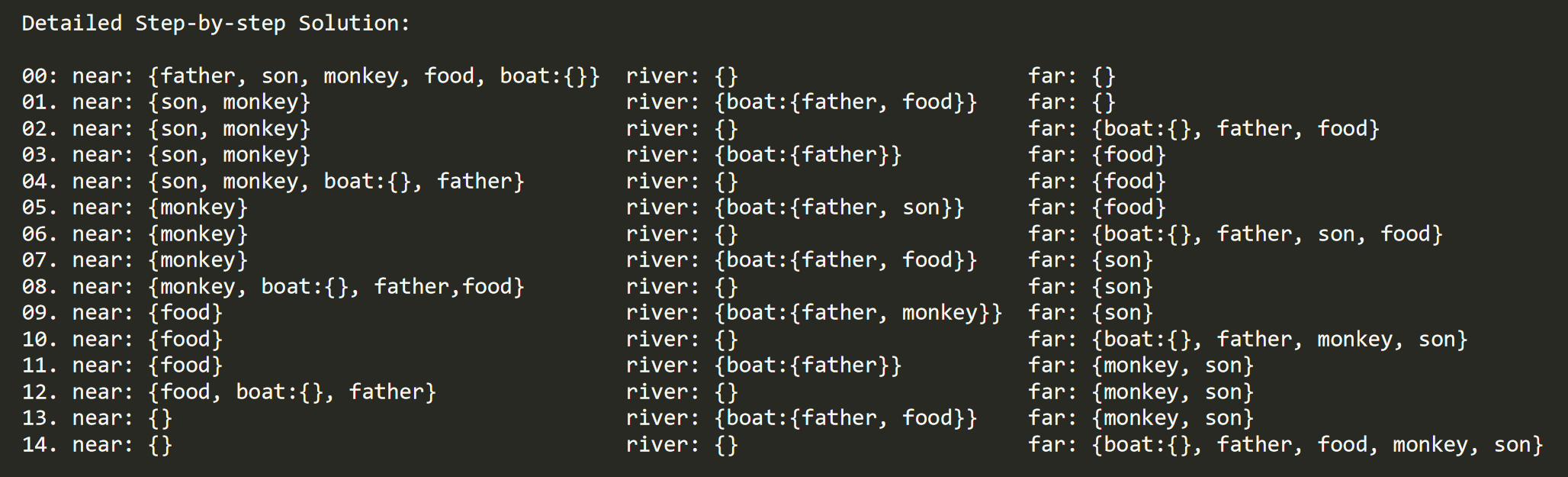

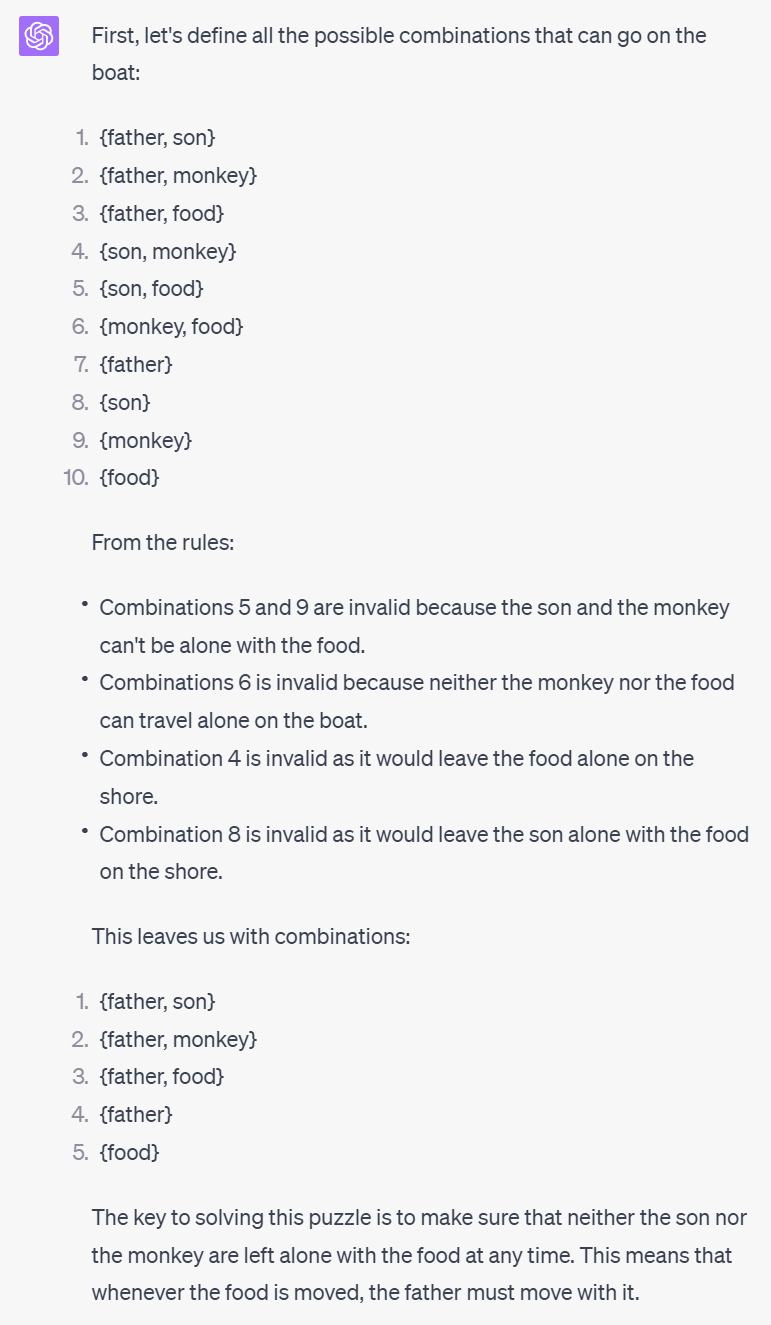

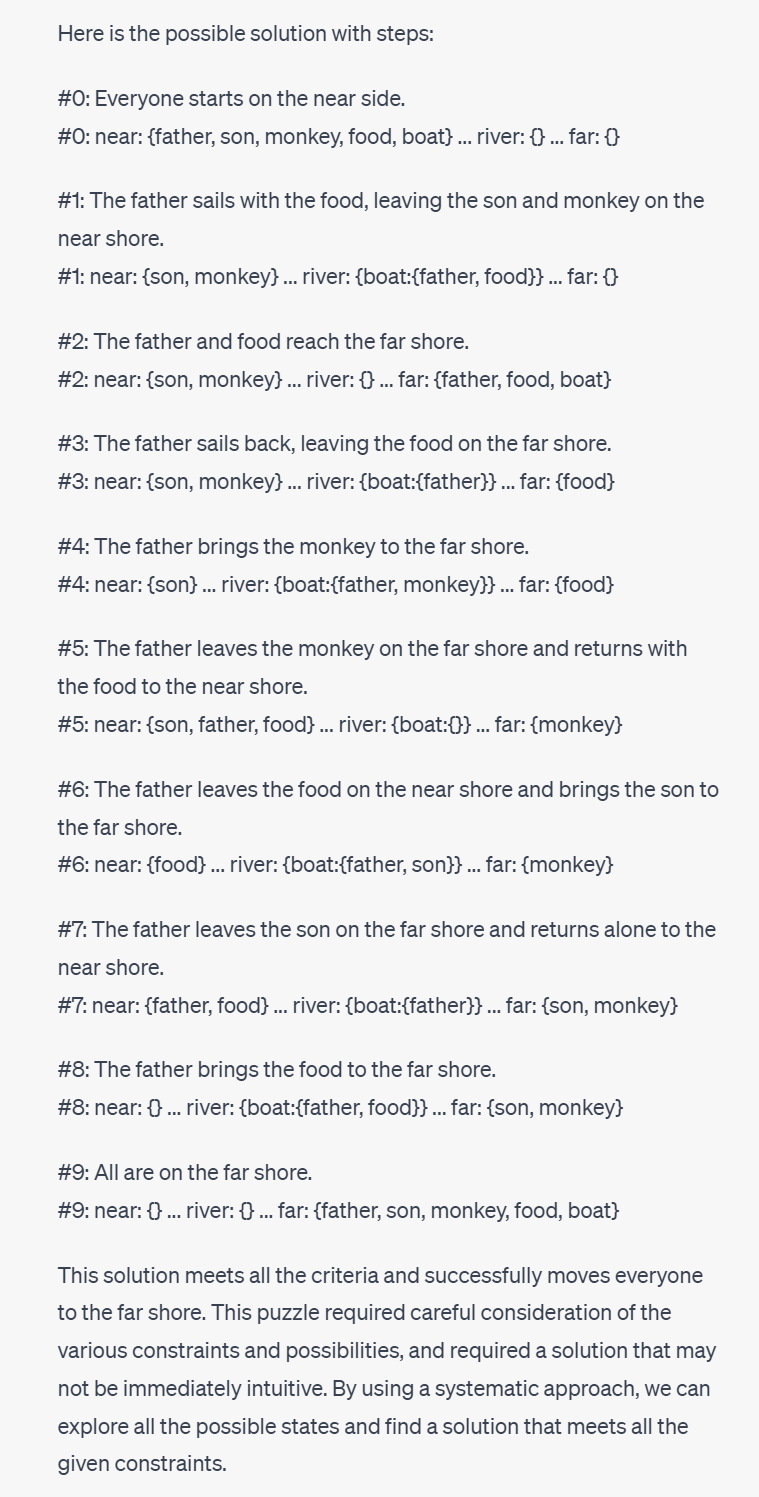

As an interesting addendum, I asked GPT4 to consider the second riddle you proposed specifically as a state space search problem and to solve with different initial conditions. This time it succeeded.

I have noticed GPT4 works better dealing with logic problems when the data is presented in a set-notation / JSON style structure. Moreover, the accuracy seems to improve when the data and rules are followed by a test it can give itself after it performs each step (which may or may not be valid). Basically this works as a kind of improvised rudimentary form of self process supervision.

After the question GPT-4 answered with a new solution (correctly). It deduced from the rules to solve the puzzle, "... whenever the food is moved, the father must move with it":

Its articulation of the rules is a bit wonky, but it mostly deduced the valid combinations for the boat with more restrictive rules (assuming the son isn't a valid captain), but "5. [food]" is unnecessary and wrong. This didn't factor into any of the steps though so it didn't affect the outcome.

Also, it skipped the level of verbosity I was expecting for each step, but in a way this is preferable because the full steps of the solution (hand-mapped below) are somewhat needless and can be assumed.

Step #4 to #5 in GPT4s answer is effectively step 05 (just with the monkey swapped with the son) to 08. I also find it fascinating how it likes to leave the boat in the river. It is one less step in terms of having to change states, so it does have a kind of sense to it.

Pretty neat really!

As an interesting addendum, I asked GPT4 to consider the second riddle you proposed specifically as a state space search problem and to solve with different initial conditions. This time it succeeded.

I have noticed GPT4 works better dealing with logic problems when the data is presented in a set-notation / JSON style structure. Moreover, the accuracy seems to improve when the data and rules are followed by a test it can give itself after it performs each step (which may or may not be valid). Basically this works as a kind of improvised rudimentary form of self process supervision.

After the question GPT-4 answered with a new solution (correctly). It deduced from the rules to solve the puzzle, "... whenever the food is moved, the father must move with it":

Its articulation of the rules is a bit wonky, but it mostly deduced the valid combinations for the boat with more restrictive rules (assuming the son isn't a valid captain), but "5. [food]" is unnecessary and wrong. This didn't factor into any of the steps though so it didn't affect the outcome.

Also, it skipped the level of verbosity I was expecting for each step, but in a way this is preferable because the full steps of the solution (hand-mapped below) are somewhat needless and can be assumed.

Step #4 to #5 in GPT4s answer is effectively step 05 (just with the monkey swapped with the son) to 08. I also find it fascinating how it likes to leave the boat in the river. It is one less step in terms of having to change states, so it does have a kind of sense to it.

Pretty neat really!

edit on 2023-7-21 by Xtraeme because: (no reason given)

The issue really isn't that it is or isn't "intelligent" by some arbitrary definition, but that it is being adopted to make decisions at a rate that

should be of great concern.

Intelligent or not, it WILL make decisions if that is its purpose. As you can see, it will make very wrong ones, but can only try and work within whatever rules were made to generate the final process/algorithm. It also has a plethora of options at its disposal that are unforeseen when making the rules.

If all consequences of all possible actions are not defined and added to the rules, it can become very dangerous depending on risk in any given situation.

I say kill it with fire, but I know many will just say its inevitable, so just embrace it. When "It" is used to manage things like transportation, utilities distribution, etc., will we be able to stop it when all of the "switches" have been handed over to it?

Like human cloning, deployment of continuous learning algorithms in any life-sustaining infrastructure or process should be outlawed. That doesn't mean it can't be used, but the deployed algorithm is final and fully vetted. Train in a box, then deploy.

Personally I say burn it all, but I say the same about lots of things (internet, porn, jeans manufactured with holes, etc.)

Intelligent or not, it WILL make decisions if that is its purpose. As you can see, it will make very wrong ones, but can only try and work within whatever rules were made to generate the final process/algorithm. It also has a plethora of options at its disposal that are unforeseen when making the rules.

If all consequences of all possible actions are not defined and added to the rules, it can become very dangerous depending on risk in any given situation.

I say kill it with fire, but I know many will just say its inevitable, so just embrace it. When "It" is used to manage things like transportation, utilities distribution, etc., will we be able to stop it when all of the "switches" have been handed over to it?

Like human cloning, deployment of continuous learning algorithms in any life-sustaining infrastructure or process should be outlawed. That doesn't mean it can't be used, but the deployed algorithm is final and fully vetted. Train in a box, then deploy.

Personally I say burn it all, but I say the same about lots of things (internet, porn, jeans manufactured with holes, etc.)

a reply to: loveguy

That's what I was thinking when I thought of this type of problem: why do people have such small boats and buy such strange things.

From that point of view, the "cannibals/missionaries" problem almost sounds more likely to happen in real life.

That's what I was thinking when I thought of this type of problem: why do people have such small boats and buy such strange things.

From that point of view, the "cannibals/missionaries" problem almost sounds more likely to happen in real life.

new topics

-

Do you name your cars ?

General Chit Chat: 6 minutes ago -

Jim Biden Was in Business with Qatari Officials

US Political Madness: 2 hours ago -

I may have had a talk with Pope Francis about his plans for our nation

The Gray Area: 2 hours ago -

Sleep paralysis and the implantation of some weird Matrix-esque demon creature...

The Gray Area: 3 hours ago -

Say his name

US Political Madness: 4 hours ago -

There is no such thing as moonlight.

General Chit Chat: 5 hours ago -

5 probed after 18-year old girl dies as a result of having the COVID jab

Diseases and Pandemics: 6 hours ago -

Official denial

Diseases and Pandemics: 9 hours ago -

MEGA - Let's Make Europe Great Again

Other Current Events: 9 hours ago -

Hamas and Other Islamist Terrorist Groups Announce Support of US Campus Anti-Israel Protests

Education and Media: 10 hours ago

top topics

-

5 probed after 18-year old girl dies as a result of having the COVID jab

Diseases and Pandemics: 6 hours ago, 15 flags -

Say his name

US Political Madness: 4 hours ago, 12 flags -

Jim Biden Was in Business with Qatari Officials

US Political Madness: 2 hours ago, 10 flags -

Official denial

Diseases and Pandemics: 9 hours ago, 9 flags -

MEGA - Let's Make Europe Great Again

Other Current Events: 9 hours ago, 7 flags -

Psychotronic Operation Rwanda Who Wants To Be A Refugee?

ATS Skunk Works: 10 hours ago, 6 flags -

Hamas and Other Islamist Terrorist Groups Announce Support of US Campus Anti-Israel Protests

Education and Media: 10 hours ago, 6 flags -

Sleep paralysis and the implantation of some weird Matrix-esque demon creature...

The Gray Area: 3 hours ago, 6 flags -

I may have had a talk with Pope Francis about his plans for our nation

The Gray Area: 2 hours ago, 5 flags -

AI phrenology

Science & Technology: 17 hours ago, 4 flags

active topics

-

Jim Biden Was in Business with Qatari Officials

US Political Madness • 20 • : Sookiechacha -

MEGA - Let's Make Europe Great Again

Other Current Events • 29 • : yeahright -

There is no such thing as moonlight.

General Chit Chat • 16 • : ByeByeAmericanPie -

Do you name your cars ?

General Chit Chat • 0 • : DAVID64 -

Say his name

US Political Madness • 24 • : WeMustCare -

Hurt my hip; should I go see a Doctor

General Chit Chat • 26 • : mysterioustranger -

Biden "Happy To Debate Trump"

2024 Elections • 76 • : JadedGhost -

Why Files Our Alien Overlords | How We Secretly Serve The Tall Whites

Aliens and UFOs • 19 • : anthelion -

Official denial

Diseases and Pandemics • 16 • : DontTreadOnMe -

-@TH3WH17ERABB17- -Q- ---TIME TO SHOW THE WORLD--- -Part- --44--

Dissecting Disinformation • 719 • : Thoughtful3