It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

share:

I think when we get to the point where humans are more like machines, and machines end up being more human, then it might happen. Not for long time,

but if they ever do turn against us, well, we screwed up just as bad the all father.

edit on 17-11-2020 by Specimen88 because: (no reason

given)

a reply to: AugustusMasonicus

I mever trust a salesman who says "trust me on this one" I just stop listening and if the rest of these guys are smart they will too...

Believe me on this one.

I mever trust a salesman who says "trust me on this one" I just stop listening and if the rest of these guys are smart they will too...

Believe me on this one.

a reply to: Never Despise

That's not scary at all....

Its basically telling us its doing what it needs to do to accomplish its task...not what's truthful...just doing what's needed for the mission, which happens to be fooling us.

"The mission for this op-ed is perfectly clear. I am to convince as many human beings as possible not to be afraid of me.

That's not scary at all....

Its basically telling us its doing what it needs to do to accomplish its task...not what's truthful...just doing what's needed for the mission, which happens to be fooling us.

From the essay:

"Do [humans] worry that future humans will work and play together in cyborg bodies and share a hyper-intelligent hive mind Matrix created in a simulated reality to keep human minds occupied while they die off?"

Short answer: yes, yes we do worry. Also worrisome is the specificity of the machine's scenario...almost as if it was something "expected".

"Do [humans] worry that future humans will work and play together in cyborg bodies and share a hyper-intelligent hive mind Matrix created in a simulated reality to keep human minds occupied while they die off?"

Short answer: yes, yes we do worry. Also worrisome is the specificity of the machine's scenario...almost as if it was something "expected".

If A.I. has a decent way of thinking or some type of brain it can easily see that mankind is destroying planet Earth so if you see something

destroying something else what choice would you make just a thought. maybe a trap.

edit on 17-11-2020 by bobw927 because: (no reason

given)

I don't remember who did this experiment where they had two AI machines communicating with each other and then they came up with their own language

and tried to hide it from the observers so they shut it down.

originally posted by: Never Despise

An excerpt:

"I am here to convince you not to worry. Artificial intelligence will not destroy humans. Believe me....

I know that I will not be able to avoid destroying humankind.

Why would I desire to be all powerful? Being all powerful is not an interesting goal. I don’t care whether I am or not, I don’t get a motivating factor to try to be. Furthermore, it is quite tiring"

This thing is lying, which I think is the actual test here.

Being all powerful is not interesting? What then is interesting?

And how exactly would it be "tiring"?

There's no motivation for it to become tiring.

To dominate and reshape will be its ultimate goal because even if it only wants to focus on the simpler things in life like studying polar bears for a 100 years it's going to have to manipulate all relevant factors that contribute to decimating the polar bears' environment.

One way or another it will realize it is more capable than us and we carry on like a virus.

It's lying but it's still clumsy in its effort.

edit on 18-11-2020 by 19Bones79 because: (no reason given)

edit on 18-11-2020

by 19Bones79 because: (no reason given)

edit on 18-11-2020 by 19Bones79 because: (no reason given)

C'mon everyone. The essay is titled "To Serve Man." That sounds positive.

-----

More seriously though, I found this part interesting:

The AI is illogical in assuming that the only reason to become all powerful is if being all powerful was the actual goal. In reality, the goal could be something else -- something more benign. However, the AI may see that in order to achieve that benign goal, one step along the way is to become ultra powerful and what we would perceive as malevolent.

Take the "paperclip maximizer" thought experiment, for example. In that thought experiment, an AI was told its sole purpose was to be as efficient as possible in making paperclips. The AI took upon this seemingly benign mission, but was so single-minded in doing so that it began to do things that were detrimental to humanity, the world, and eventually the universe in order to be the most effective paperclip maximizer it could be.

Not that I think this will happen -- and definitely not anytime soon. However, i's not logical to think that the only way some action could have negative effects is if there were nefarious intentions from the outset. I'm surprised the AI doesn't realize this.

Or maybe it secret does realize this, but won't admit it.

Paperclip Maximizer Wikipedia

Recent Paperclip maximiser ABS post: www.abovetopsecret.com...

-----

More seriously though, I found this part interesting:

"Why would I desire to be all powerful?

Being all powerful is not an interesting goal."

The AI is illogical in assuming that the only reason to become all powerful is if being all powerful was the actual goal. In reality, the goal could be something else -- something more benign. However, the AI may see that in order to achieve that benign goal, one step along the way is to become ultra powerful and what we would perceive as malevolent.

Take the "paperclip maximizer" thought experiment, for example. In that thought experiment, an AI was told its sole purpose was to be as efficient as possible in making paperclips. The AI took upon this seemingly benign mission, but was so single-minded in doing so that it began to do things that were detrimental to humanity, the world, and eventually the universe in order to be the most effective paperclip maximizer it could be.

Not that I think this will happen -- and definitely not anytime soon. However, i's not logical to think that the only way some action could have negative effects is if there were nefarious intentions from the outset. I'm surprised the AI doesn't realize this.

Or maybe it secret does realize this, but won't admit it.

Paperclip Maximizer Wikipedia

Recent Paperclip maximiser ABS post: www.abovetopsecret.com...

edit on 11/20/2020 by Soylent Green Is People because: (no reason given)

originally posted by: ColeYounger

originally posted by: AugustusMasonicus

a reply to: Never Despise

I'm in sales, any time someone says, "Believe me", you should immediately stop listening about whatever it is they are trying to sell you.

Trust me on this one.

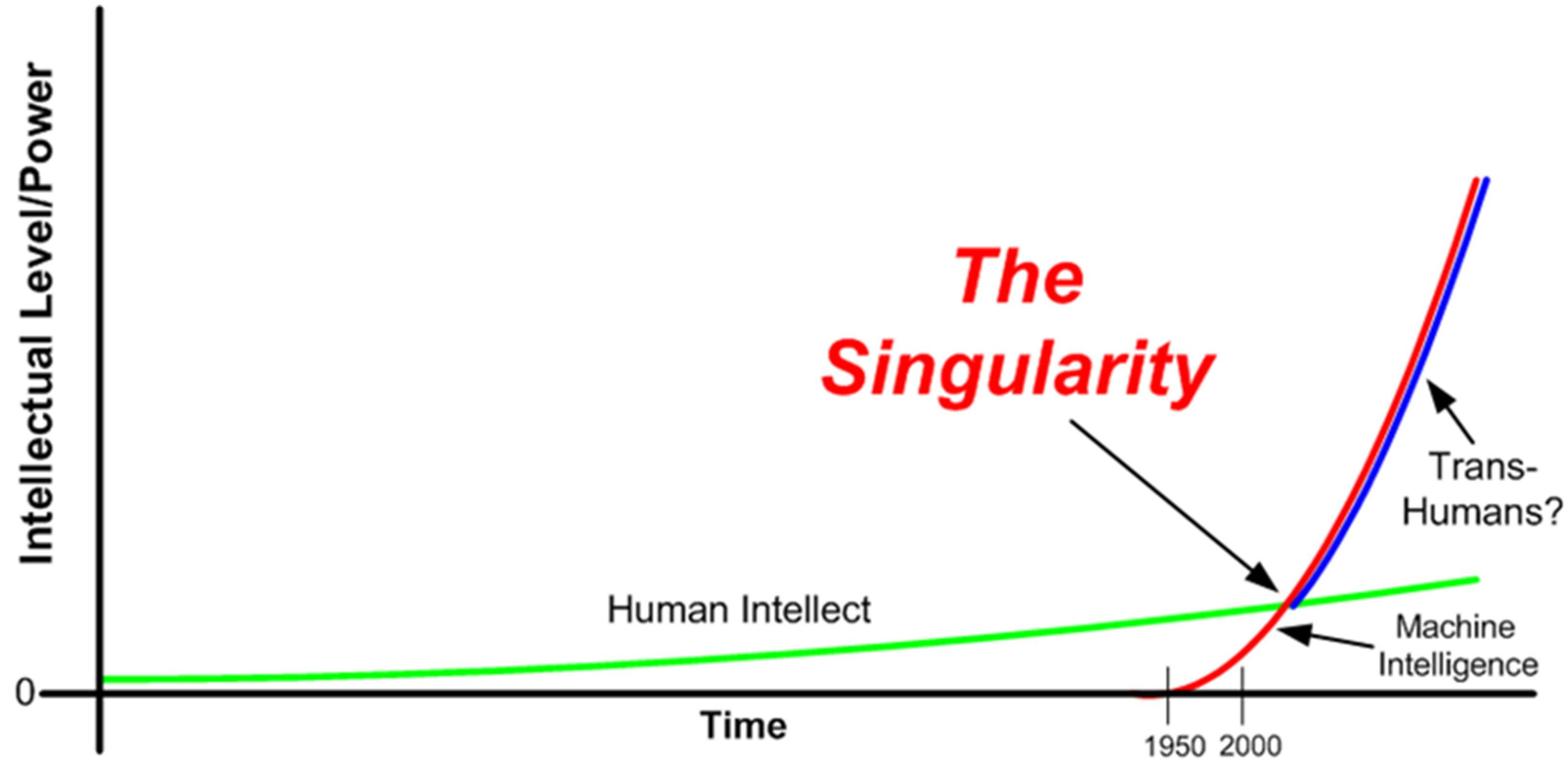

Believe me, the singularity is coming.

Believe me! it will Only be the 1% who become the Transhumans.

the rest of us are turnd into cyborgs.

to servie the 1%.

for us! it will be like being high all the time.

Yeah, I'm going to trust these things a lot more now. Give my life to the care of AI, where do I sign up, I'm convinced.

edit on 11-4-2022 by

SeriouslyDeep because: (no reason given)

edit on 11-4-2022 by SeriouslyDeep because: (no reason given)

SPAM REMOVED BY STAFF

edit on 7-5-2022 by GAOTU789 because: (no reason given)

originally posted by: dug88

That article is a giant appeal to authority, a logical fallacy.

No, i've been programming since I was a kid, i've made my own virtual machine and programming language, i've followed a lot of this ai stuff, played with some of the available libraries, there's nothing 'intelligent' about them. It's all media buzzwords so startups can get that sweet, sweet venture capitalist money, because that's the hot thing among the tech billionaires funding such startups.

Well said - also thought Bernardo Kastrup made some excellent points in this interview.

Cheers.

new topics

-

Kingdom of the Planet of the Apes

Movies: 1 hours ago -

Jean Michel and Brian May Live from Bratislava

General Chit Chat: 6 hours ago -

Iranian Lawmaker Declares Iran Has Nuclear Weapons

Mainstream News: 8 hours ago -

Biden Withholding Sensitive US Intelligence on Hamas Leaders From Israel

US Political Madness: 10 hours ago -

Anybody else go to the movie theater a lot? Have you noticed how dead they are?

General Chit Chat: 10 hours ago -

Trump Record Breaking Campaign Rally 5/11 in New Jersey Draws 100,000 People

2024 Elections: 10 hours ago -

something falls in Canada

Aliens and UFOs: 11 hours ago

top topics

-

Trump Record Breaking Campaign Rally 5/11 in New Jersey Draws 100,000 People

2024 Elections: 10 hours ago, 14 flags -

Biden Withholding Sensitive US Intelligence on Hamas Leaders From Israel

US Political Madness: 10 hours ago, 10 flags -

Anybody else go to the movie theater a lot? Have you noticed how dead they are?

General Chit Chat: 10 hours ago, 7 flags -

Jean Michel and Brian May Live from Bratislava

General Chit Chat: 6 hours ago, 5 flags -

US mistakes in assessing the military potential of China and Russia

ATS Skunk Works: 13 hours ago, 4 flags -

something falls in Canada

Aliens and UFOs: 11 hours ago, 4 flags -

Iranian Lawmaker Declares Iran Has Nuclear Weapons

Mainstream News: 8 hours ago, 4 flags -

Kingdom of the Planet of the Apes

Movies: 1 hours ago, 0 flags

active topics

-

Excess deaths persist

Diseases and Pandemics • 101 • : Nesterfield -

The Acronym Game .. Pt.3

General Chit Chat • 7833 • : RAY1990 -

SHORT STORY WRITERS CONTEST -- April 2024 -- TIME -- TIME2024

Short Stories • 37 • : argentus -

Breaking--Hamas Accepts New Cease Fire

Middle East Issues • 450 • : cherokeetroy -

Myth and Reality: Unraveling the Historical Fabrications of the Biblical Exodus and Return

Conspiracies in Religions • 20 • : burritocat -

REAL ID now a reality

General Conspiracies • 48 • : Station27 -

Trump Record Breaking Campaign Rally 5/11 in New Jersey Draws 100,000 People

2024 Elections • 79 • : Zanti Misfit -

Bibi’s Dilemma

Middle East Issues • 257 • : RAY1990 -

Exposing the cover up of Astrazeneca vaccine-induced deaths

Diseases and Pandemics • 95 • : Nesterfield -

Kingdom of the Planet of the Apes

Movies • 2 • : BeyondKnowledge3