It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

7

share:

I did a search here for any posts about this and i only found one that seemed to be going down the same road i have, this is a conspiracy i am not

sure i believe. i don't exactly believe the official 9/11 story, but it just seems like so much is going on here, purposely in order to maybe muddy

the waters and get people going in many different directions. So rather than resurrect an old post from 2008, i am just going to make a new post about

it, with more information and some extra tidbits i found just read through this and tell me what you guys think is it all a coincidence? are there

other posts about this here that i didn't find?

Daniel Lewin was the first victim of 9/11. Seated in 9B on American Airlines flight 11, he was stabbed by the hijacker sitting behind him. He was the passenger stabbed in business class that Betty Ong talks about in her infamous call from the plane. He was born May 14, 1970 in Denver, CO and moved to Israel with his parents at age 14, where they stayed for the rest of his childhood. All of the information i am providing in this section about Mr. Lewin is from his Wikipedia page here en.wikipedia.org... He served for four years in the IDF and was an officer in Sayeret Matkal. Now i will just give some information and notable members of this specific armed forces group.

of course Benjamin Netanyahu was part of this group, it seems many of the former members have gone on to high ranking positions

and yes, Mr. Lewin is also listed as a notable member on the Wikipedia page for this group en.wikipedia.org...

I am not alleging anything regarding connections between service in this group and attaining high positions within the government of Israel, I DO NOT KNOW what goes on in Israel and I don't know if there isn't some other group that has more former members attaining high positions than this group and this is a nothingburger. I am just presenting what i have found.

Now i am going to return to more background on Mr. Lewin.

continuing in comment below

Daniel Lewin was the first victim of 9/11. Seated in 9B on American Airlines flight 11, he was stabbed by the hijacker sitting behind him. He was the passenger stabbed in business class that Betty Ong talks about in her infamous call from the plane. He was born May 14, 1970 in Denver, CO and moved to Israel with his parents at age 14, where they stayed for the rest of his childhood. All of the information i am providing in this section about Mr. Lewin is from his Wikipedia page here en.wikipedia.org... He served for four years in the IDF and was an officer in Sayeret Matkal. Now i will just give some information and notable members of this specific armed forces group.

General Staff Reconnaissance Unit (formerly Unit 269 or Unit 262), more commonly known as Sayeret Matkal (Hebrew: סיירת מטכ״ל), is the special reconnaissance unit (sayeret) of Israel's General Staff (matkal). It is considered one of the premier special forces units of Israel.

First and foremost a field intelligence-gathering unit, conducting deep reconnaissance behind enemy lines to obtain strategic intelligence, Sayeret Matkal is also tasked with a wide variety of special operations, including black operations; as well as combat search and rescue, counterterrorism, hostage rescue, HUMINT, irregular warfare, long-range penetration, conducting manhunts, and reconnaissance beyond Israel's borders. The unit is modeled after the British Army's Special Air Service (SAS), taking the unit's motto "Who Dares Wins". The unit is the Israeli equivalent of the SAS. It is directly subordinate to the Special Operations Division of the IDF's Military Intelligence Directorate.

of course Benjamin Netanyahu was part of this group, it seems many of the former members have gone on to high ranking positions

Sayeret Matkal veterans have gone on to achieve high positions in Israel's military and political echelons. Several have become IDF Generals and members of the Knesset. Ehud Barak's career is an example: a draftee in 1959, he later succeeded Unit 101 commando Lt. Meir Har-Zion in becoming Israel's most decorated soldier. While with Sayeret Matkal, Barak led operations Isotope in 1972 and Spring of Youth in 1973. He later advanced in his military career to become the IDF Chief of Staff between 1991 and 1995. In 1999 he became the 10th Prime Minister of Israel.

and yes, Mr. Lewin is also listed as a notable member on the Wikipedia page for this group en.wikipedia.org...

I am not alleging anything regarding connections between service in this group and attaining high positions within the government of Israel, I DO NOT KNOW what goes on in Israel and I don't know if there isn't some other group that has more former members attaining high positions than this group and this is a nothingburger. I am just presenting what i have found.

Now i am going to return to more background on Mr. Lewin.

He attended the Technion – Israel Institute of Technology in Haifa while simultaneously working at IBM's research laboratory in the city. While at IBM, he was responsible for developing the Genesys system, a processor verification tool that is used widely within IBM and in other companies such as Advanced Micro Devices and SGS-Thomson.

Upon receiving a Bachelor of Arts and a Bachelor of Science, summa cum laude, in 1995, he traveled to Cambridge, Massachusetts, to begin graduate studies toward a Ph.D at the Massachusetts Institute of Technology (MIT) in 1996. While there, he and his advisor, Professor F. Thomson Leighton, came up with consistent hashing, an innovative algorithm for optimizing Internet traffic. These algorithms became the basis for Akamai Technologies, which the two founded in 1998. Lewin was the company's chief technology officer and a board member, and achieved great wealth during the height of the Internet boom.

continuing in comment below

edit on 25-8-2024 by Shoshanna because: format

So what of Akamai? This article from August 1999 about Akamai has been removed from Wired's website, but thankfully archived on the wayback machine on

the internet archive.

web.archive.org...

what an eerie coincidence. nothing more than that, i know.

now i do not understand what they are talking about in regard to technology. Was this a big innovation? a huge breakthrough? i don't know because i am not a tech person.

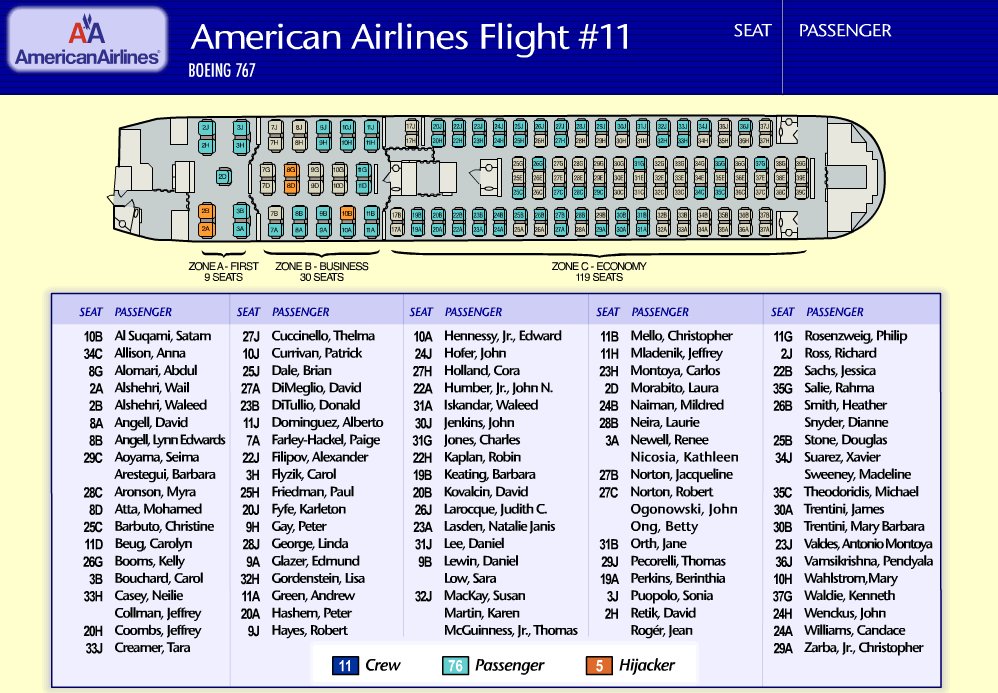

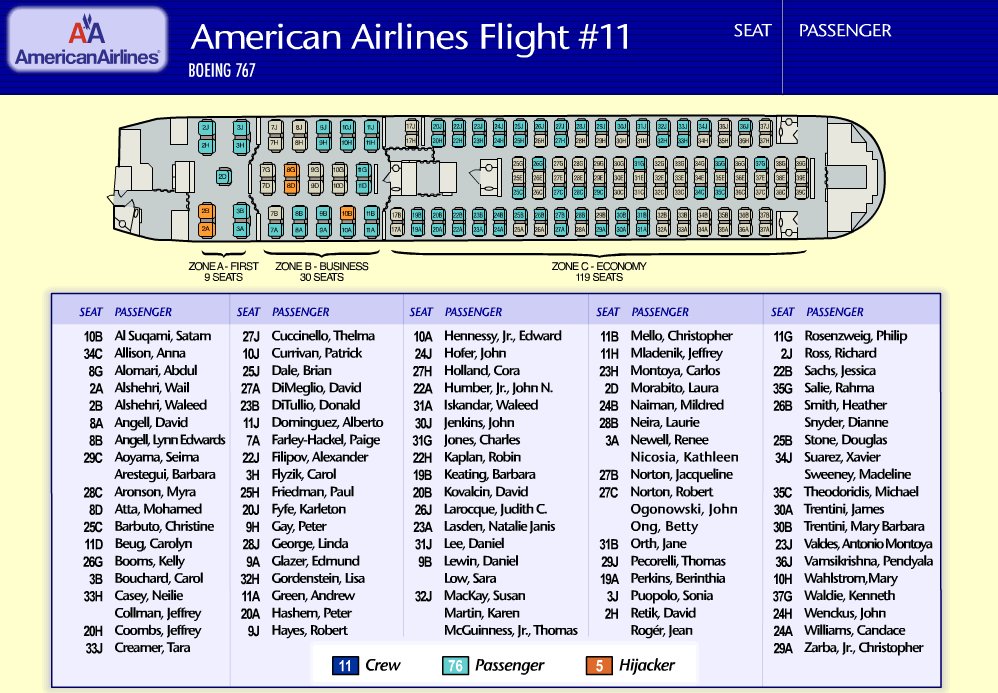

Anyway, i just thought it is interesting that Lewin is described as the first victim of 9/11 and stabbed by Satam al-Suqami who was seated in 10B according to this diagram of Flight 11, directly behind Mr. Lewin.

wondering if the hijacker thought he would be a threat and so got him out of the way first, or if he recognized him in some capacity from his prior service.

web.archive.org...

Paul Sagan says that Danny could leave the company to finish his PhD and publish his thesis, but then they'd have to kill him. Everyone else at Akamai is encouraged to complete their academic work, a slew of them at MIT, but Danny - him they'd have to off. He knows too much.

Danny Lewin is an algorithms guy, and at Akamai Technologies, algorithms rule. After years of research, he and his adviser, professor Tom Leighton, have designed a few that solve one of the direst problems holding back growth of the Internet. This spring, Tom and Danny's seven-month-old company launched a service built on these secret formulas.

what an eerie coincidence. nothing more than that, i know.

In January, Akamai began running beta versions of FreeFlow, serving content for ESPN.com, Paramount Pictures, Apple, and other high-volume clients. (Akamai withholds the names of the others, but you can tell if a site is using the service by viewing the page source and looking for akamaitech.net in the URLs. A cursory test reveals "Akamaized" content at Yahoo! and GeoCities.)

ESPN.com and Paramount have been good beta testers - ESPN.com because it requires frequent updates and is sensitive to region as well as time, and Paramount because it delivers a lot of pipe-hogging video. On March 11, while ESPN was covering the first day of NCAA hoops' March Madness, Paramount's Entertainment Tonight Online posted the second Phantom Menace trailer. FreeFlow handled up to 3,000 hits per second for the two sites - 250 million in total, many of them 25-Mbyte downloads of the trailer. But the system never exceeded even 1 percent of its capacity. In fact, as the download frenzy overwhelmed other sites, Akamai picked up the slack. Before long, Akamai became the exclusive distributor of all Phantom Menace QuickTimes, serving both of the official sites, starwars.com and apple.com.

So how does it work? Companies sign up for Akamai's FreeFlow, agreeing to pay according to the amount of their traffic. Then they run a simple utility to modify tags, and the Akamai network takes over. Throughout the site, the system rewrites the URLs of files, changing the links into variables to break the connection between domain and location. On www.apple.com, for example, the link www.apple.com/home/media/menace_640qt4.mov, specifying the 640 x 288 Phantom Menace QuickTime trailer, might be rewritten as a941.akamai.com/7/941/51/256097340036aa/www.apple.com/home/media/menace_640qt4.mov. Under standard protocols, a941.akamaitech.net would refer to a particular machine. But with Akamai's system, the address can resolve to any one of hundreds of servers, depending on current conditions and where you are on the Net. And it can resolve a different way for someone else - or even for you, a few seconds later. (The /7/941/51/256097340036aa in the URL is a fingerprint string used for authentication.) This new method is more complicated, but like modern navigation, it opens new vistas of capacity and commerce.

now i do not understand what they are talking about in regard to technology. Was this a big innovation? a huge breakthrough? i don't know because i am not a tech person.

In some ways, sending information around the traditional Internet resembles human transport, pre-Phoenicia. The Net was originally designed like a series of roads connecting distinct sources of content. Different servers, physical hardware, specialized in their own individual data domains. As first conceived, an address like nasa.gov would always correspond to dedicated servers located at a NASA facility. When you visited www.ksc.nasa.gov to see a shuttle launch, you connected to NASA's servers at Kennedy Space Center, just as you traveled to Tivoli for travertine marble instead of picking it up at your local port. When you ran a site, your servers and only your servers delivered its content.

This routing system worked fine for years, but as users move to fatter pipes, like DSL and broadband cable, and as event-driven supersites emerge, the protocols tying information to location cause a bottleneck. Back when The Starr Report was posted, Congress' servers couldn't keep up with hungry surfers. When Victoria's Secret ran its Super Bowl ad last February, similar lusts went unsated. The Heaven's Gate site in 1997 quickly followed its cult members into oblivion. And when The Phantom Menace trailers hit the Web this spring, a couple of sites distributing them went down.

This is the "hot spot" problem: When too many people visit a site, the excessive load heats it up like an overloaded circuit and causes a meltdown. Just as something on the Net gets interesting, access to it fails.

For more time-critical applications, the stakes are higher. When the stock market lurches and online traders go berserk, brokerage sites can hardly afford to buckle. In retail, slow responses will send impatient customers clicking over to the competition. Users may have Pentium IIIs and ISDN lines, but when a site can't keep up with demand, they feel like they're on a slow dialup. And users on relatively remote parts of the network - even tech hubs like Singapore - often suffer slow responses, not just during peak traffic.

ISPs address this problem by adding connections, expanding capacity, and running server farms to host client sites on many machines, but this still leaves content clustered in one place on the network. Publishers can mirror sites at multiple hosting companies, helping to spread out traffic, but this means duplicating everything everywhere, even the files no one wants. A third remedy, caching, temporarily stores copies of popular files on servers closer to the user, but out of the original site's control. Naturally, site publishers don't like this - it delivers stale content, preserves errors, and skews usage stats. In other words, massive landlock.

So in 1998, with their new algorithms in hand, Tom Leighton and Danny Lewin found themselves facing a sort of manifest destiny. The Web's largest sites were straining to meet demand - and frequently failing. Most needed better traffic handling, a way to cool down hot spots and speed content delivery overall. And Tom and Danny had conceived a solution, a grand-scale alternative to the Net's routing system.

Anyway, i just thought it is interesting that Lewin is described as the first victim of 9/11 and stabbed by Satam al-Suqami who was seated in 10B according to this diagram of Flight 11, directly behind Mr. Lewin.

wondering if the hijacker thought he would be a threat and so got him out of the way first, or if he recognized him in some capacity from his prior service.

Free mind flow:

The IDF member boarding the plane was killed first and is why: combat training. Was risk to the mission like sky marshals.

Needs help from boarding personal to get seat behind IDF man for best way for strike fast and not get warned by approach.

The IDF member boarding the plane was killed first and is why: combat training. Was risk to the mission like sky marshals.

Needs help from boarding personal to get seat behind IDF man for best way for strike fast and not get warned by approach.

a reply to: Naftalin

He was described by friends as a really in shape guy who worked out a lot and had extensive combat training, his friends said they didn't think being stabbed would stop him, but if you stab the right spot nobody is surviving that.

www.jpost.com...

so i'm not sure so much they knew he was prior IDF or that he was just a large well built in shape man on the plane and they felt he was a threat so they took him out first.

He was described by friends as a really in shape guy who worked out a lot and had extensive combat training, his friends said they didn't think being stabbed would stop him, but if you stab the right spot nobody is surviving that.

According to the recorded FAA information, when the hijackers attacked one of the flight attendants, Lewin rose to protect her and prevent the terrorists from entering the cockpit. After he was stabbed, he bled to death on the floor, and two other flight attendants and the captain were murdered. The hijackers took over the cockpit and diverted the plane on its murderous path to New York.

“I’m sure he acted out of pure instinct,” said Jonathan.

“To this day, those of us who knew him well can’t figure out how only five terrorists managed to overpower him,” said Leighton less than a year after the attack

www.jpost.com...

so i'm not sure so much they knew he was prior IDF or that he was just a large well built in shape man on the plane and they felt he was a threat so they took him out first.

a reply to: Shoshanna

Is speculation I throw into playfield, I not have evidence.

His friends say being stabbed does not stop him. Respect to friends of deceased person, I do not think so. Romanticed idea of stabbing. True stabbing is multiple time in second when it is sneak attack and intent is kill. Nobody take that and function like before. Stabbed from behind seat into chest or stomach, is vital organ, lung, heart, internal organs.

Yes, not needed they know man is IDF and combattant. Can be random they pick big threat.

Is speculation I throw into playfield, I not have evidence.

His friends say being stabbed does not stop him. Respect to friends of deceased person, I do not think so. Romanticed idea of stabbing. True stabbing is multiple time in second when it is sneak attack and intent is kill. Nobody take that and function like before. Stabbed from behind seat into chest or stomach, is vital organ, lung, heart, internal organs.

Yes, not needed they know man is IDF and combattant. Can be random they pick big threat.

a reply to: Shoshanna

WIKI:

The truth of 911 will never be known by us peons.

his friends said they didn't think being stabbed would stop him

WIKI:

Flight attendants on the plane who contacted airline officials from the plane reported that Lewin's throat was slashed, probably by the terrorist sitting behind him

The truth of 911 will never be known by us peons.

My take on it, all the planes had IDF members on board to secure the situation. As the official flights where mid air doing their own thing

unharnessed, the transponder signals got switched to a drone aircraft that went on to the towers. Too much at stake to trust some novice pilots to hit

the right spot. The planes with the passengers landed at a secure airport where those on board either where in on the plan and got a new identity or

got killed.

new topics

-

Kurakhove officially falls. Russia takes control of major logistics hub city in the southeast.

World War Three: 48 minutes ago -

Liberal Madness and the Constitution of the United States

US Political Madness: 6 hours ago

top topics

-

New York Governor signs Climate Law that Fines Fossil Fuel Companies

US Political Madness: 14 hours ago, 17 flags -

Liberal Madness and the Constitution of the United States

US Political Madness: 6 hours ago, 6 flags -

Kurakhove officially falls. Russia takes control of major logistics hub city in the southeast.

World War Three: 48 minutes ago, 2 flags

active topics

-

Elon Musk futurist?

Dreams & Predictions • 16 • : cherokeetroy -

This is why ALL illegals who live in the US must go

Social Issues and Civil Unrest • 30 • : Xtrozero -

UK Borders are NOT Secure!

Social Issues and Civil Unrest • 13 • : gortex -

New York Governor signs Climate Law that Fines Fossil Fuel Companies

US Political Madness • 21 • : Dandandat3 -

Happy Hanukkah…

General Chit Chat • 25 • : JJproductions -

Kurakhove officially falls. Russia takes control of major logistics hub city in the southeast.

World War Three • 0 • : Imhere -

JILL BIDEN Wants JOE to Punish Democrats Who Forced Him to Leave Office in Disgrace on 1.20.2025.

2024 Elections • 17 • : angelchemuel -

Petition Calling for General Election at 564,016 and rising Fast

Political Issues • 172 • : angelchemuel -

Post A Funny (T&C Friendly) Pic Part IV: The LOL awakens!

General Chit Chat • 7961 • : PinkFreud -

‘Something horrible’: Somerset pit reveals bronze age cannibalism

Ancient & Lost Civilizations • 28 • : Flyingclaydisk

7