It looks like you're using an Ad Blocker.

Please white-list or disable AboveTopSecret.com in your ad-blocking tool.

Thank you.

Some features of ATS will be disabled while you continue to use an ad-blocker.

share:

This is a very important paper because it exposes the materialist mindset of many in the Science community that treat any discussion or evaluation of

evidence outside of their blind materialism as sacrilegious.

They start with the priori that science has to be explained in a way that feeds their materialism and it doesn't matter that materialism can't explain gaps littered throughout science.

This is strictly based on belief. There's no law that states all of science must be explained in a way that pleases blind materialist.

www.dailygrail.com...

The article then hits at the heart of the matter.

The paper begins by noting the reason for presenting an overview and discussion of the topic: “Most psychologists could reasonably be described as uninformed skeptics — a minority could reasonably be described as prejudiced bigots — where the paranormal is concerned”. Indeed, it quotes one cognitive scientist as stating that the acceptance of psi phenomena would “send all of science as we know it crashing to the ground”.

www.dailygrail.com...

UNINFORMED SKEPTICS!! WOW!!

PREJUDICED BIGOTS in the area of Psi! Again, this is Journal of the American Psychological Association. It doesn't get more mainstream than this!

www.apa.org...

Psi Research has been around for years and has a HUGE accumulation of evidence. Sadly, the evidence gets ignored in favor of blind rejection because it goes against someones belief and it has nothing to do with science. Here's an older video of Dean Radin called The Taboo of Psi. He goes over a lot of evidence.

Some of the methods used in Psi Research was adopted by Psychology Research.

www.dailygrail.com...

The paper then goes over evidence for everything from Implicit cognition to Remote Viewing. It's a really good read, so check it out!

Here's the published paper.

The experimental evidence for parapsychological phenomena: A review.

www.ncbi.nlm.nih.gov...

They start with the priori that science has to be explained in a way that feeds their materialism and it doesn't matter that materialism can't explain gaps littered throughout science.

This is strictly based on belief. There's no law that states all of science must be explained in a way that pleases blind materialist.

Is controversial research into telepathy and other seeming ‘super-powers’ of the mind starting to be more accepted by orthodox science? In its latest issue, American Psychologist – the official peer-reviewed academic journal of the American Psychological Association – has published a paper that reviews the research so far into parapsychological (‘psi’) abilities, and concludes that the “evidence provides cumulative support for the reality of psi, which cannot be readily explained away by the quality of the studies, fraud, selective reporting, experimental or analytical incompetence, or other frequent criticisms.”

The new paper – “The experimental evidence for parapsychological phenomena: a review“, by Etzel Cardeña of Lund University – also discusses recent theories from physics and psychology “that present psi phenomena as at least plausible”, and concludes with recommendations for further progress in the field.

www.dailygrail.com...

The article then hits at the heart of the matter.

The paper begins by noting the reason for presenting an overview and discussion of the topic: “Most psychologists could reasonably be described as uninformed skeptics — a minority could reasonably be described as prejudiced bigots — where the paranormal is concerned”. Indeed, it quotes one cognitive scientist as stating that the acceptance of psi phenomena would “send all of science as we know it crashing to the ground”.

www.dailygrail.com...

UNINFORMED SKEPTICS!! WOW!!

PREJUDICED BIGOTS in the area of Psi! Again, this is Journal of the American Psychological Association. It doesn't get more mainstream than this!

www.apa.org...

Psi Research has been around for years and has a HUGE accumulation of evidence. Sadly, the evidence gets ignored in favor of blind rejection because it goes against someones belief and it has nothing to do with science. Here's an older video of Dean Radin called The Taboo of Psi. He goes over a lot of evidence.

Some of the methods used in Psi Research was adopted by Psychology Research.

Cardeña also notes that, despite its current, controversial reputation, the field of psi research has a long history of introducing methods later integrated into psychology (e.g. the first use of randomization, along with systematic use of masking procedures; the first comprehensive use of meta-analysis; study preregistration; pioneering contributions to the psychology of hallucinations, eyewitness reports, and dissociative and hypnotic phenomena). And some of psychology’s most respected names, historically, have also shared an interest in parapsychology, including William James, Hans Berger (inventor of the EEG), Sigmund Freud, and former American Psychological Association (APA) president Gardner Murphy.

www.dailygrail.com...

The paper then goes over evidence for everything from Implicit cognition to Remote Viewing. It's a really good read, so check it out!

Here's the published paper.

The experimental evidence for parapsychological phenomena: A review.

www.ncbi.nlm.nih.gov...

edit on 29-6-2018 by neoholographic because: (no reason given)

a reply to: neoholographic

Excellent thread neo.

The points you've touched on are very important to consider, in the very least.

Outright denial and logical fallacies such as claiming that a lack of evidence is evidence of lack, etc. have no place in true empiricism. Needless to say, some of us are certain of various evidences.

I've been down this road. Put the most hardened skeptic, cookie cutter, by the books psych doctor around me for even a single, in person conversation, and they'll realize they were quite simply, short sighted. What can I say...I bleed high strangeness, as many ATS'rs do.

I really don't mean that in offense, I mean that as a blunt fact, and many many folks out there are right on that level of awareness.

Excellent thread neo.

The points you've touched on are very important to consider, in the very least.

Outright denial and logical fallacies such as claiming that a lack of evidence is evidence of lack, etc. have no place in true empiricism. Needless to say, some of us are certain of various evidences.

I've been down this road. Put the most hardened skeptic, cookie cutter, by the books psych doctor around me for even a single, in person conversation, and they'll realize they were quite simply, short sighted. What can I say...I bleed high strangeness, as many ATS'rs do.

I really don't mean that in offense, I mean that as a blunt fact, and many many folks out there are right on that level of awareness.

edit on 6292018 by CreationBro because: (no reason given)

a reply to: neoholographic

Thanks for the info mate.

I do recall one farcical denial of Psi.

Some years ago an experiment was undertaken to try and influence a ball dropping through a vertical "pinball" maze. Individuals were asked to focus on getting the ball to land to the left or right.

The results were clear. The statistics clearly showed that people were able to influence the track of the ball by thought alone.

Unfortunately, mainstream scientists ignored the result because, and I''m paraphrasing, THERE WAS TOO MUCH DATA.

Thanks for the info mate.

I do recall one farcical denial of Psi.

Some years ago an experiment was undertaken to try and influence a ball dropping through a vertical "pinball" maze. Individuals were asked to focus on getting the ball to land to the left or right.

The results were clear. The statistics clearly showed that people were able to influence the track of the ball by thought alone.

Unfortunately, mainstream scientists ignored the result because, and I''m paraphrasing, THERE WAS TOO MUCH DATA.

Lack of evidence is still lack of evidence though,

originally posted by: CreationBro

a reply to: neoholographic

Excellent thread neo.

The points you've touched on are very important to consider, in the very least.

Outright denial and logical fallacies such as claiming that a lack of evidence is evidence of lack, etc. have no place in true empiricism. Needless to say, some of us are certain of various evidences.

I've been down this road. Put the most hardened skeptic, cookie cutter, by the books psych doctor around me for even a single, in person conversation, and they'll realize they were quite simply, short sighted. What can I say...I bleed high strangeness, as many ATS'rs do.

I really don't mean that in offense, I mean that as a blunt fact, and many many folks out there are right on that level of awareness.

a reply to: neoholographic

It says that people who don’t believe are prejudiced bigots.

But we don’t believe because the “evidence” is not there

It says that people who don’t believe are prejudiced bigots.

The paper begins by noting the reason for presenting an overview and discussion of the topic: “Most psychologists could reasonably be described as uninformed skeptics — a minority could reasonably be described as prejudiced bigots — where the paranormal is concerned”. Indeed, it quotes one cognitive scientist as stating that the acceptance of psi phenomena would “send all of science as we know it crashing to the ground”.

But we don’t believe because the “evidence” is not there

a reply to: Woodcarver

You, you, you evidencist you!

Psi seems to fit into the category of gods. Not really testable.

When an experiment is shown to be statistically equal to chance it's because the "vibes" got messed up by the experiment. How very quantum like. The thing is there is a math behind quantum phenomenon which predict exactly that.

Where's the psi math?

You, you, you evidencist you!

Psi seems to fit into the category of gods. Not really testable.

When an experiment is shown to be statistically equal to chance it's because the "vibes" got messed up by the experiment. How very quantum like. The thing is there is a math behind quantum phenomenon which predict exactly that.

Where's the psi math?

originally posted by: CreationBro

a reply to: neoholographic

Excellent thread neo.

The points you've touched on are very important to consider, in the very least.

Outright denial and logical fallacies such as claiming that a lack of evidence is evidence of lack, etc. have no place in true empiricism. Needless to say, some of us are certain of various evidences.

I've been down this road. Put the most hardened skeptic, cookie cutter, by the books psych doctor around me for even a single, in person conversation, and they'll realize they were quite simply, short sighted. What can I say...I bleed high strangeness, as many ATS'rs do.

I really don't mean that in offense, I mean that as a blunt fact, and many many folks out there are right on that level of awareness.

Thanks and the evidence is overwhelming. Look at this.

Results of the GCP studies have been published on many occasions over the past 16 years, but never widely noted by the general media. Now may be the time to start paying attention.

Why? For one thing, the statistical certainty has mounted to the point that it's hard to ignore. Toward the end of 1998, the odds against chance started exceeding one in 20, an acceptable level in many disciplines. Then, with added studies, the level of certainty began to zoom. By the year 2000, the odds against chance exceeded one in 1,000; and in 2006, they broke through the one in a million level; they're now more than one in a trillion with no upper limit in sight.

This far exceeds the bar for statistical significance used in many fields, such as medicine and weather forecasting. Odds against chance ranging from 20-to-one to 100-to-one are commonly considered sufficient. The certainty level is set unusually high for the Higgs Boson; data for validating its existence are considered acceptable if they exceed one in 3.5 million. The GCP level of statistical certainty is now more than 285,000 times greater than that.

noosphere.princeton.edu...

WOW!

This signal that shows thought can cause a random system to behave in a non random way is 285,000 times greater than the certainty level set for the Higgs Boson. They even went up to a 5.9 Sigma level which would say there's a 1 in 300 million chance that they weren't looking at the Higgs Boson. With this signal, it's 1 IN A TRILLION.

Also this:

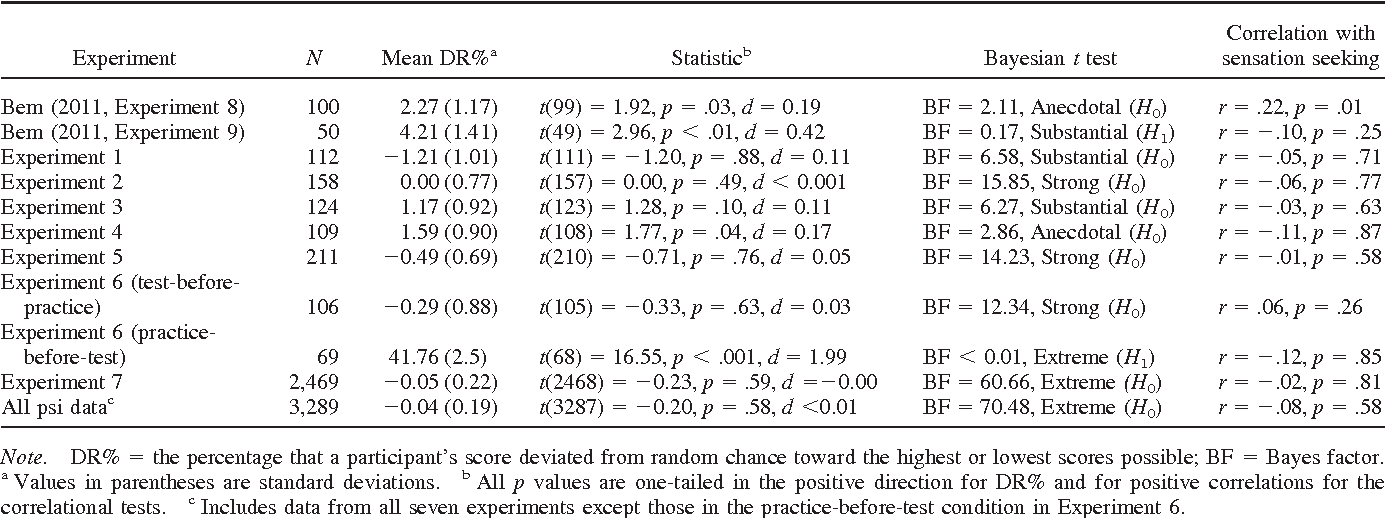

The effect size in the Retroactive Priming experiment of Bem was .25. This is small but significant. The p value was .006. Anything under .05 is considered significant.

This means the .25 will be spread out amongst the population and some people will exhibit stronger Psi effects and in others it will be much weaker.

An effect size of .5 or greater is medium up to .8 and up is large.

For comparison, the effect size of aspirin in relation to preventing heart attacks was .03. This is very small compared to Dr. Bem's test yet this result was significant enough for the FDA to say places like Bayer can use it in commercials and Doctors put patients who have had heart attacks on aspirin to prevent a second heart attack.

I have no math or evidence to back this up, but about ten years ago I saw a YouTube video of a psi wheel. Intrigued, I made one, cupped my hands

around it and, after some time, I got it to move a little. With a little more practice I got it to spin.

People claimed the movement was caused by thermals coming from the hands so I took my hands away and I was still able to make it move.

After a few weeks of practicing, I was able to make move even standing five feet away from it. I never had much control over it, like I couldn't make it spin on command or choose the direction, but I eliminated every variable and could absolutely make it move just by concentrating on it.

I never did anything more with it. I really just wanted to see for myself if it was real or not, but I can say that it is a completely real phenomenon.

I can only wonder what a lifetime of dedication to such a practice would yield. I don't have that kind of focus though.

People claimed the movement was caused by thermals coming from the hands so I took my hands away and I was still able to make it move.

After a few weeks of practicing, I was able to make move even standing five feet away from it. I never had much control over it, like I couldn't make it spin on command or choose the direction, but I eliminated every variable and could absolutely make it move just by concentrating on it.

I never did anything more with it. I really just wanted to see for myself if it was real or not, but I can say that it is a completely real phenomenon.

I can only wonder what a lifetime of dedication to such a practice would yield. I don't have that kind of focus though.

a reply to: neoholographic

Thanks to age and experience I've slowly come around to the idea that 'psi' might be a real thing. Not like I'm 100% or have any missionary zeal about it; it's a personal belief and not much more.

One of the reasons why psi studies don't gain traction is because they never seem to bring more than a 'wow' moment. Sure enough, there's a jargon present and the terms aren't always reflected by a consensus. For example, QE is often bandied around and the users of the term don't appear to have a grasp of what it is. It's accrued the convenience of 'portals' in the way people cite QE to appear to explain things they can't actually explain.

Statement: Aliens, ghosts and demons all use portals. What exactly is a portal? Statement: Telepathy and precognition occur via quantum entanglement. So what is QE and how does it facilitate communication across time and space?

It's a given that the collective 'we' don't have all the answers to space and time. Where's all this dark matter etc? At the same time, physicists have done a fine job predicting and measuring particles and effects alongside Quantum Theory. As far as I'm aware, psi researchers haven't offered a testable hypothesis that predicts which particles transfer information from one consciousness to another.

Thanks to age and experience I've slowly come around to the idea that 'psi' might be a real thing. Not like I'm 100% or have any missionary zeal about it; it's a personal belief and not much more.

One of the reasons why psi studies don't gain traction is because they never seem to bring more than a 'wow' moment. Sure enough, there's a jargon present and the terms aren't always reflected by a consensus. For example, QE is often bandied around and the users of the term don't appear to have a grasp of what it is. It's accrued the convenience of 'portals' in the way people cite QE to appear to explain things they can't actually explain.

Statement: Aliens, ghosts and demons all use portals. What exactly is a portal? Statement: Telepathy and precognition occur via quantum entanglement. So what is QE and how does it facilitate communication across time and space?

It's a given that the collective 'we' don't have all the answers to space and time. Where's all this dark matter etc? At the same time, physicists have done a fine job predicting and measuring particles and effects alongside Quantum Theory. As far as I'm aware, psi researchers haven't offered a testable hypothesis that predicts which particles transfer information from one consciousness to another.

It's not a case of lack of evidence, the evidence is plentiful, what we lack is an adequate way to measure the evidence

Science is a buisness no longer science

Science is a buisness no longer science

originally posted by: Phage

a reply to: Woodcarver

You, you, you evidencist you!

Psi seems to fit into the category of gods. Not really testable.

When an experiment is shown to be statistically equal to chance it's because the "vibes" got messed up by the experiment. How very quantum like. The thing is there is a math behind quantum phenomenon which predict exactly that.

Where's the psi math?

What?

This makes no sense. Why do I need Psi math when I have methods to conduct research in these areas that includes math that's also used as SCIENCE in other disciplines as the article pointed out.

Cardeña also notes that, despite its current, controversial reputation, the field of psi research has a long history of introducing methods later integrated into psychology (e.g. the first use of randomization, along with systematic use of masking procedures; the first comprehensive use of meta-analysis; study preregistration; pioneering contributions to the psychology of hallucinations, eyewitness reports, and dissociative and hypnotic phenomena). And some of psychology’s most respected names, historically, have also shared an interest in parapsychology, including William James, Hans Berger (inventor of the EEG), Sigmund Freud, and former American Psychological Association (APA) president Gardner Murphy.

www.dailygrail.com...

Finding the effect size, p-value or meta analysis of the data isn't math? Using statistics like we do in all sorts of areas of Science isn't math?

You can't refute any of the evidence that's been presented so you make a vacuous statement that doesn't apply.

Why do I need a Schrodinger equation to show the effect size in a study about Twin Telepathy? Again, that makes no sense. It's just like I don't need a Schrodinger equation to show the effect size of Aspirin's prevention of a second heart attack. I just need more trials and an accumulation of data.

This isn't Burger King and you can't have it your way because of a blind belief. We use these same methods in other disciplines and it's called SCIENTIFIC EVIDENCE. Some of these methods originated with Psi research.

For instance, if I'm doing research on Twin Telepathy and I want to see if there's a connection between sets of twins that others may not have. I get 10 sets of identical twins, fraternal twins, siblings and strangers and I run a test with 10 cards and each pairing is in different rooms. The first pair chooses a card among the 10 cards and their counterpart tries to guess which card they chose.

At the end of the trial, you look at the data and their's a higher effect size above chance for the identical twins than the other groups. You say that's interesting.

Five years later, there's 2,000 trials of the experiment conducted worldwide. You do a meta analysis and you see that these test show a stronger deviation from the null hypothesis from the first trials.

This is evidence of an effect. The next thing you do is try and find the mechanism. You don't just bury your head in the sand because you don't like what the evidence is showing.

You may end up doing another thousand trials and find that the effect isn't there. This happens.

Aspirin Does Not Prevent Heart Attacks in Women, Study Finds

www.nytimes.com...

This is Science whether you like it or not. I don't need a Schrodinger wave function to show evidence of a Psi effect or the effect of Aspirin when preventing heart attacks.

a reply to: CreationBro

What can I say...I bleed high strangeness, as many ATS'rs do.

Don't let SailorJerry find out. He'll think you have a mental issue and bug you about it. *eyeroll*

What can I say...I bleed high strangeness, as many ATS'rs do.

Don't let SailorJerry find out. He'll think you have a mental issue and bug you about it. *eyeroll*

originally posted by: Woodcarver

a reply to: neoholographic

It says that people who don’t believe are prejudiced bigots.

The paper begins by noting the reason for presenting an overview and discussion of the topic: “Most psychologists could reasonably be described as uninformed skeptics — a minority could reasonably be described as prejudiced bigots — where the paranormal is concerned”. Indeed, it quotes one cognitive scientist as stating that the acceptance of psi phenomena would “send all of science as we know it crashing to the ground”.

But we don’t believe because the “evidence” is not there

But the evidence is out there if you care to look, what we are lacking is an explanation of how it works.

a reply to: surfer_soul

That's why science says "ick". Science likes to measure things. If you get my meaning.

You can't blame them for that. It's what they do. Can't measure God. Nope. Can't measure psi. Nope.

Continue doing your praying. Continue with your psi stuff.

Why do you require validation from those you disdain?

That's why science says "ick". Science likes to measure things. If you get my meaning.

You can't blame them for that. It's what they do. Can't measure God. Nope. Can't measure psi. Nope.

Continue doing your praying. Continue with your psi stuff.

Why do you require validation from those you disdain?

edit on 6/30/2018 by Phage because: (no reason given)

a reply to: neoholographic

And they offer tarot readings.

People don’t accept this because the methods for testing are absolutely rediculous. Making wheels spin, making balls drop to one side or the other, making random number generators do this or that. Claiming a one in a trillion odds that this is legit. There are all kinds of ways to beat those systems, or to at least manipulate the odds to their favor. This is about as unscientific a way to test anything. Now, i know you are convinced, but that does little to help their cause. If they need money so bad, why don’t they win the lottery more often, why don’t they use their powers in vegas, or any gambling establishment.

They are seeking funding. They need money to continue their scam and you are just the kind of person they are targeting.

Also, don’t forget to claim your free tarot reading

And they offer tarot readings.

People don’t accept this because the methods for testing are absolutely rediculous. Making wheels spin, making balls drop to one side or the other, making random number generators do this or that. Claiming a one in a trillion odds that this is legit. There are all kinds of ways to beat those systems, or to at least manipulate the odds to their favor. This is about as unscientific a way to test anything. Now, i know you are convinced, but that does little to help their cause. If they need money so bad, why don’t they win the lottery more often, why don’t they use their powers in vegas, or any gambling establishment.

They are seeking funding. They need money to continue their scam and you are just the kind of person they are targeting.

Also, don’t forget to claim your free tarot reading

edit on 30-6-2018 by Woodcarver because: (no reason given)

originally posted by: Phage

a reply to: surfer_soul

That's why science says "ick". Science likes to measure things. If you get my meaning.

You can't blame them for that. It's what they do. Can't measure God. Nope. Can't measure psi. Nope.

Continue doing your praying. Continue with your psi stuff.

Why do you require validation from those you disdain?

Wouldn't the experiments that produce data be a measure?

The issue is more one of interpretation of what the data signifies.

We can measure things that have a possible explanation in God or psychic abilities. We can exclude alternate explanations through experimental design. But we still have to make interpretation of the results. That point, the interpretation, is where science is often inconsistent.

Science's roots in naturalism gives a confirmation bias to interpret observations and experimental results.

Science will accept quite flimsy evidence for naturalistic explanations (for example, that a big bang singularity arose from quantum™ fluctuation) but will reject heavily evidenced but non falsifiable paradigms such as the anthropic principle/s.

edit on 30/6/2018 by chr0naut because: (no reason given)

a reply to: Phage

You can't be serious.

Psi effects are measured.

We use these methods to MEASURE the effect size of things in Science all of the time.

This would be like say the effect of Aspirin on preventing heart attacks hasn't been measured. That's simply Asinine. Psi effects have been measured using well established methods and some of those methods originated with Psi research.

Here's some simple examples.

Pictorial cigarette pack warnings: a meta-analysis of experimental studies

tobaccocontrol.bmj.com...

Here's another one.

Bayesian Meta-Analysis of Multiple Continuous Treatments: An Application to Antipsychotic Drugs

arxiv.org...

The point is, these methods are used in many different ways and this is SCIENCE. These studies are published in Journals across many different disciplines in Science.

Without things like p-values, effect sizes and meta analysis, we couldn't MEASURE how effective a new drug will be across the population. Here's an example of meta analysis in Psi and it's no different than any study used to find the overall effect size of a group of drugs.

If there's no MEASURED EFFECT then the drug will not get approved.

Feeling the future: A meta-analysis of 90 experiments on the anomalous anticipation of random future events

Abstract

f1000research.com...

This is Science whether you like it or not. The methods have been used for years and some of the methods were created by Psi Researchers. So, it's not the Science it's the subject matter.

This is Researchers doing Scientific research and these methods are accepted as Science by Pseudoskeptics except when it's used for Psi. Give me a break!

originally posted by: Phage

a reply to: surfer_soul

That's why science says "ick". Science likes to measure things. If you get my meaning.

You can't blame them for that. It's what they do. Can't measure God. Nope. Can't measure psi. Nope.

Continue doing your praying. Continue with your psi stuff.

Why do you require validation from those you disdain?

You can't be serious.

Psi effects are measured.

We use these methods to MEASURE the effect size of things in Science all of the time.

This would be like say the effect of Aspirin on preventing heart attacks hasn't been measured. That's simply Asinine. Psi effects have been measured using well established methods and some of those methods originated with Psi research.

Here's some simple examples.

Pictorial cigarette pack warnings: a meta-analysis of experimental studies

We included studies that used an experimental protocol to test cigarette pack warnings and reported data on both pictorial and text-only conditions. 37 studies with data on 48 independent samples (N=33 613) met criteria.

Pictorial warnings were more effective than text-only warnings for 12 of 17 effectiveness outcomes all (p < 0.05). Relative to text-only warnings, pictorial warnings (1) attracted and held attention better; (2) garnered stronger cognitive and emotional reactions; (3) elicited more negative pack attitudes and negative smoking attitudes and (4) more effectively increased intentions to not start smoking and to quit smoking. Participants also perceived pictorial warnings as being more effective than text-only warnings across all 8 perceived effectiveness outcomes.

tobaccocontrol.bmj.com...

Here's another one.

Bayesian Meta-Analysis of Multiple Continuous Treatments: An Application to Antipsychotic Drugs

Modeling dose-response relationships of drugs is essential to understanding their effect on patient outcomes under realistic circumstances. While intention-to-treat analyses of clinical trials provide the effect of assignment to a particular drug and dose, they do not capture observed exposure after factoring in non-adherence and dropout. We develop Bayesian methods to flexibly model dose-response relationships of binary outcomes with continuous treatment, allowing for treatment effect heterogeneity and a non-linear response surface. We use a hierarchical framework for meta-analysis with the explicit goal of combining information from multiple trials while accounting for heterogeneity. In an application, we examine the risk of excessive weight gain for patients with schizophrenia treated with the second generation antipsychotics paliperidone, risperidone, or olanzapine in 14 clinical trials. Averaging over the sample population, we found that olanzapine contributed to a 15.6% (95% CrI: 6.7, 27.1) excess risk of weight gain at a 500mg cumulative dose. Paliperidone conferred a 3.2% (95% CrI: 1.5, 5.2) and risperidone a 14.9% (95% CrI: 0.0, 38.7) excess risk at 500mg olanzapine equivalent cumulative doses. Blacks had an additional 6.8% (95% CrI: 1.0, 12.4) risk of weight gain over non-blacks at 1000mg olanzapine equivalent cumulative doses of paliperidone.

arxiv.org...

The point is, these methods are used in many different ways and this is SCIENCE. These studies are published in Journals across many different disciplines in Science.

Without things like p-values, effect sizes and meta analysis, we couldn't MEASURE how effective a new drug will be across the population. Here's an example of meta analysis in Psi and it's no different than any study used to find the overall effect size of a group of drugs.

If there's no MEASURED EFFECT then the drug will not get approved.

Feeling the future: A meta-analysis of 90 experiments on the anomalous anticipation of random future events

Abstract

In 2011, one of the authors (DJB) published a report of nine experiments in the Journal of Personality and Social Psychology purporting to demonstrate that an individual’s cognitive and affective responses can be influenced by randomly selected stimulus events that do not occur until after his or her responses have already been made and recorded, a generalized variant of the phenomenon traditionally denoted by the term precognition. To encourage replications, all materials needed to conduct them were made available on request. We here report a meta-analysis of 90 experiments from 33 laboratories in 14 countries which yielded an overall effect greater than 6 sigma, z = 6.40, p = 1.2 × 10-10 with an effect size (Hedges’ g) of 0.09. A Bayesian analysis yielded a Bayes Factor of 1.4 × 109, greatly exceeding the criterion value of 100 for “decisive evidence” in support of the experimental hypothesis. When DJB’s original experiments are excluded, the combined effect size for replications by independent investigators is 0.06, z = 4.16, p = 1.1 × 10-5, and the BF value is 3,853, again exceeding the criterion for “decisive evidence.” The number of potentially unretrieved experiments required to reduce the overall effect size of the complete database to a trivial value of 0.01 is 544, and seven of eight additional statistical tests support the conclusion that the database is not significantly compromised by either selection bias or by “p-hacking”—the selective suppression of findings or analyses that failed to yield statistical significance. P-curve analysis, a recently introduced statistical technique, estimates the true effect size of our database to be 0.20, virtually identical to the effect size of DJB’s original experiments (0.22) and the closely related “presentiment” experiments (0.21). We discuss the controversial status of precognition and other anomalous effects collectively known as psi.

f1000research.com...

This is Science whether you like it or not. The methods have been used for years and some of the methods were created by Psi Researchers. So, it's not the Science it's the subject matter.

This is Researchers doing Scientific research and these methods are accepted as Science by Pseudoskeptics except when it's used for Psi. Give me a break!

edit on 30-6-2018 by neoholographic because: (no reason given)

originally posted by: Phage

a reply to: surfer_soul

That's why science says "ick". Science likes to measure things. If you get my meaning.

You can't blame them for that. It's what they do. Can't measure God. Nope. Can't measure psi. Nope.

Continue doing your praying. Continue with your psi stuff.

Why do you require validation from those you disdain?

What makes you think I require validation or I disdain anyone?

Science might yet discover how the psi stuff works, it’s not science I object to but those that are so sceptical of everything that doesn’t match their world view they are unprepared to even look at what data there is that might shift their paradigm.

Einstein the librarian was mocked by the the scientific establishment at first because he wasn’t part of the scientific establishment at the time. Attitudes like that don’t help progress.

originally posted by: neoholographic

Indeed, it quotes one cognitive scientist as stating that the acceptance of psi phenomena would “send all of science as we know it crashing to the ground”.

This is insanely stupid. It would do nothing of the sort. "Science" is not a singular noun. There is no reason "science" cannot incorporate new discoveries, just as it has in the past. Indeed, this must happen. Your signature says it all.

new topics

-

A priest who sexually assaulted a sleeping man on a train has been jailed for 16 months.

Mainstream News: 58 minutes ago -

The goal of UFO's/ fallen angels doesn't need to be questioned - It can be discerned

Mainstream News: 1 hours ago -

Biden pardons 39 and commutes 1500 sentences…

Mainstream News: 6 hours ago -

Jan 6th truth is starting to leak out.

US Political Madness: 7 hours ago -

Deep state control - How your tax dollars are used to censor and brainwash

Propaganda Mill: 8 hours ago -

DONALD J. TRUMP - TIME's Most Extraordinary Person of the Year 2024.

Mainstream News: 8 hours ago -

Top Sci Fi/Horror Crossover Movies

Movies: 11 hours ago

top topics

-

USS Liberty - I had no idea. Candace Owen Interview

US Political Madness: 13 hours ago, 20 flags -

Jan 6th truth is starting to leak out.

US Political Madness: 7 hours ago, 19 flags -

DONALD J. TRUMP - TIME's Most Extraordinary Person of the Year 2024.

Mainstream News: 8 hours ago, 9 flags -

Magic Vaporizing Ray Gun Claim - More Proof You Can't Believe Anything Hamas Says

War On Terrorism: 12 hours ago, 8 flags -

Top Sci Fi/Horror Crossover Movies

Movies: 11 hours ago, 8 flags -

Biden pardons 39 and commutes 1500 sentences…

Mainstream News: 6 hours ago, 8 flags -

One out of every 20 Canadians Dies by Euthanasia

Medical Issues & Conspiracies: 12 hours ago, 6 flags -

Deep state control - How your tax dollars are used to censor and brainwash

Propaganda Mill: 8 hours ago, 4 flags -

The goal of UFO's/ fallen angels doesn't need to be questioned - It can be discerned

Mainstream News: 1 hours ago, 2 flags -

A priest who sexually assaulted a sleeping man on a train has been jailed for 16 months.

Mainstream News: 58 minutes ago, 2 flags

active topics

-

USS Liberty - I had no idea. Candace Owen Interview

US Political Madness • 29 • : fringeofthefringe -

Secretary of Defense - Lots of Attention Since Pete Hegseth was Nominated to Fill The Position.

US Political Madness • 71 • : WeMustCare -

Who's coming with me?

General Conspiracies • 43 • : BingoMcGoof -

Should be BANNED!

General Chit Chat • 18 • : worldstarcountry -

Biden pardons 39 and commutes 1500 sentences…

Mainstream News • 18 • : Euronymous2625 -

DONALD J. TRUMP - TIME's Most Extraordinary Person of the Year 2024.

Mainstream News • 23 • : stelth2 -

Magic Vaporizing Ray Gun Claim - More Proof You Can't Believe Anything Hamas Says

War On Terrorism • 11 • : worldstarcountry -

A priest who sexually assaulted a sleeping man on a train has been jailed for 16 months.

Mainstream News • 4 • : NorthOS -

The goal of UFO's/ fallen angels doesn't need to be questioned - It can be discerned

Mainstream News • 3 • : TTU777 -

Drones everywhere in New Jersey

Aliens and UFOs • 85 • : bscotti